Introduction

Over the past decade, artificial intelligence (AI) technology has taken incredible steps forward. Writing in the New York Times, technology columnist Kevin Roose recently proclaimed a “golden age of progress” for AI.

This progress could not have been made without improvements in machine learning (ML) technology, including deep and reinforcement learning techniques. The advent of hardware to support running computationally challenging AI models has been another contributor. Some of the developments have been slow and steady, whereas others presented as a breakthrough.

DeepMind’s AlphaFold has been one of those breakthroughs in the science space. Their XLand technology is set to repeat the success.

Table of contents

Also Read: Is deep learning supervised or unsupervised?

What is XLand?

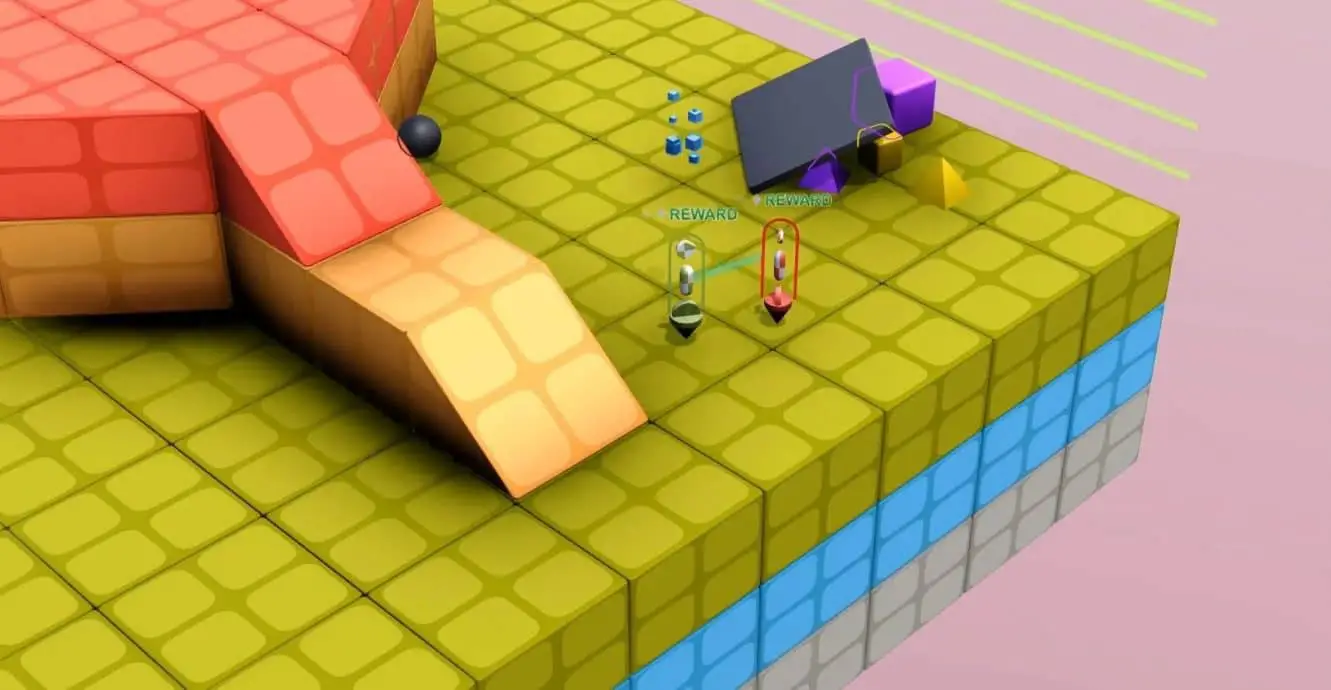

XLand is a digital 3D training environment for artificial intelligence agents. The environment resembles a colorful video-game-like playground. In this playground, the players face billions of different tasks they need to resolve.

In that respect, XLand is like other AI training tools. As we dive deeper into this new release, it will become clear just how much further this environment goes.

This tool is far more than an AI playground. The tasks are set by changing the make-up of the environment, the rules of the game, and the number of players. There is also a playground manager in charge of adapting rules and the layout of the environment. The players are AI agents that use XLand to deal with gradually more complex tasks.

Both playground managers and AI players use a technique called reinforcement learning. They learn by trial and error, being rewarded for solving a problem correctly and punished when they get things wrong.

Also Read: Self taught AI will be the end of us

XLand Characteristics

Apart from utilizing reinforcement learning, XLand is based on open-ended learning. In that way, the tool resembles how humans learn. Children at play, for example, learn without being given an explicit goal. They simply explore their surroundings with different toys to develop a better understanding of their world.

Here are some of the main characteristics of XLand:

- Learning progresses from simple to complex tasks

- Learning is open-ended and based on reinforcement

- Players learn by experimenting

In the XLand environment, AI players start out small and then progress to more complex tasks. This feature is another parallel to human learning. Infants tend to play with simple toys, solving easy tasks. As they get older, their toys become more complex, at some point including entire worlds.

AI players in XLand start by playing single-player games based on simple tasks like having to identify a shape in a specific color. Once they perform well in simple, single-player games, XLand then presents players with more complex challenges. Tasks become harder, and more players are added to the game.

XLand challenged some of its players with up to 4,000 worlds and hundreds of thousands of different games. Some completed more than three million unique tasks. Learning within the environment is open-ended, meaning that there is not one single best thing to do in each situation.

This is a distinct departure from the way most existing reinforcement learning tools work. With XLand, AI players are allowed to experiment. They can try one solution to see what happens rather than being restricted by yes or no solutions. They can also try to use objects as tools to reach another object or to hide behind something big enough. Again, the idea is not to limit learning and allow players to learn as humans learn.

Human children experiment with their toys as well as their food. They are discovering the world around them naturally and iteratively. Later on in life, scientists still apply the principle of experimentation, for example. Although they are generally guided by a hypothesis rather than asking an entirely open-ended question, scientists are open to entirely unexpected discoveries during their experimentation process.

Theories of Intelligence

The concept of artificial intelligence has been around for nearly 100 years. Whilst some sources credit codebreaker Alan Turing with laying the foundations of today’s AI, it was Marvin Minsky of Dartmouth College who coined the term in 1956.

AI allows machines to complete tasks by simulating human intelligence. This technology does not replace the type of intelligence displayed by humans or animals but instead augments and copies it. Those fundamentals have not changed since the early days of AI. What has changed, however, is that this technology has entered every aspect of our lives. From suggested viewing on streaming services to home assistants like Apple’s Alexa, we are surrounded by AI applications.

To better understand modern AI, it helps to divide it into two categories – narrow and broad or general artificial intelligence. The examples above are representations of narrow AI. Even chatbots fall under narrow AI applications.

General AI is a much wider application of AI technology, aiming to get closer to the flexibility and adaptability of the human brain. At this point, true general AI remains a concept rather than a reality. However, tools like XLand may be starting to change that.

Differences and Challenges Between Simulations and the Real World

Simulations are necessary for machine learning and any kind of training of AI applications. They allow machines to cut short the lifetime of accumulated experience that humans benefit from. Without simulations, machines would likely take years to pick up human-like skills.

However, as powerful as simulations can be, they cannot perfectly depict reality. Experts refer to this “discrepancy between simulated and real environments” and the difficulty of transferring experiences from one to the other as the reality gap. While it is possible to improve the simulations, this type of optimization requires exceptional effort, making the simulation somewhat less efficient.

Plus, most simulators have flaws. Powerful machine learning algorithms manage to exploit those flaws and effectively cheat the simulation. The problem with this is that the cheating is done in ways that would not work in reality.

Technology is improving, and the gap between simulation and reality is closing. For the moment, though, a combination of simulation and reality remains the best way to train RL applications.

The challenges of deep reinforcement learning

Before considering the challenges of deep reinforcement learning, it is worth clarifying some terminology. Reinforcement learning is part of machine learning. Machine learning refers to machines, like computers, learning from data without needing additional human input.

Deep learning takes this approach one step further by making it possible for the machine to analyze and process huge amounts of data. The data can be unstructured like images, audio files, and text. Deep learning allows computers to process far more data than humans could. To do this, the computer uses skills that are generally associated with human intelligence. They include learning, problem-solving, observation, and, of course, the ability to analyze data.

Reinforcement learning (RL) involves a process of trial and error. Deep reinforcement learning uses the same principle but deals with larger amounts of data. Typically, RL involves AI players engaging in round after round of games with having to repeat the process from scratch whenever they need to learn another game.

This limitation of RL is one of the biggest challenges developers have to overcome when they are using these principles. Learning one game at a time is a relatively slow process compared to the human ability to adapt already learned skills to a new scenario.

Are we One Step Closer to General AI with XLand?

It would be fair to refer to general AI as the holy grail of artificial intelligence. To date, general AI remains a concept only. Opinions differ as to when the world will arrive at this stage. Some scientists predict that AI will be a reality in less than 20 years. Others feel that because of our limited understanding of the human brain, true general AI may be centuries away.

So, what role does XLand play in the process? XLand is breaking the mold of reinforcement learning as we know it. Rather than repeating the same RL process over and over, XLand presents AI agents with new tasks and trains them in a way that encourages them to apply already learned behaviors.

So far, the results are promising. XLand owners DeepMind have found that their training is resulting in “more generally capable agents.” They are noticing heuristic behaviors emerging rather than the highly specific behaviors that AI agents normally display for their individual tasks. The DeepMind team has also witnessed that agents are experimenting when they are not sure of the exact solution to apply to a given situation.

At this point, developers still have some way to go until artificial intelligence technology becomes truly general AI. However, tools like XLand are bringing us several steps closer to the goal. By changing the way reinforcement learning trains AI players and building more human-like training environments, XLand has the potential to transform AI training, resulting in far more capable players.