Introduction -Artificial intelligence and image editing.

Artificial intelligence (AI) has revolutionized the field of image editing by introducing advanced features that simplify and automate complex processes, thereby expanding creative possibilities. AI algorithms are capable of understanding image content, recognizing patterns, and making intelligent decisions based on the data they have been trained on. This has resulted in sophisticated tools that can perform tasks ranging from object removal to style transfer, and from image enhancement to the generation of realistic images from scratch.

Table of Contents

Also Read: Real-world applications of artificial intelligence in web design.

Object Recognition and Removal

One of the key capabilities of AI in image editing is object recognition. By training AI on large datasets, it can identify and distinguish various elements within an image. This leads to impressive functionality like object removal, where AI not only detects and erases the selected object but also fills in the background intelligently, making it look as though the object was never there.

Image Enhancement

AI has also been effective in automating image enhancement tasks. It can adjust parameters like brightness, contrast, saturation, and sharpness based on the image’s content and lighting conditions. AI can even analyze the photo’s composition and suggest cropping or framing adjustments to improve the overall aesthetics of the image. Such enhancements, which would typically require a trained human eye and manual adjustments, can now be done instantly and accurately using AI.

Style Transfer

Style transfer is another fascinating application of AI in image editing. By learning the artistic style of one image (for example, a painting) and applying it to another image (like a photograph), AI can create unique and creative renditions. This would be extremely time-consuming and challenging to do manually, but AI can accomplish it in seconds.

Image Generation

AI has reached a point where it can generate realistic images from textual descriptions or from a combination of existing images. This capability has been demonstrated by tools such as OpenAI’s DALL-E, which can create images of hypothetical creatures or objects that do not exist in reality, based solely on textual prompts.

GANPaint Studio tool

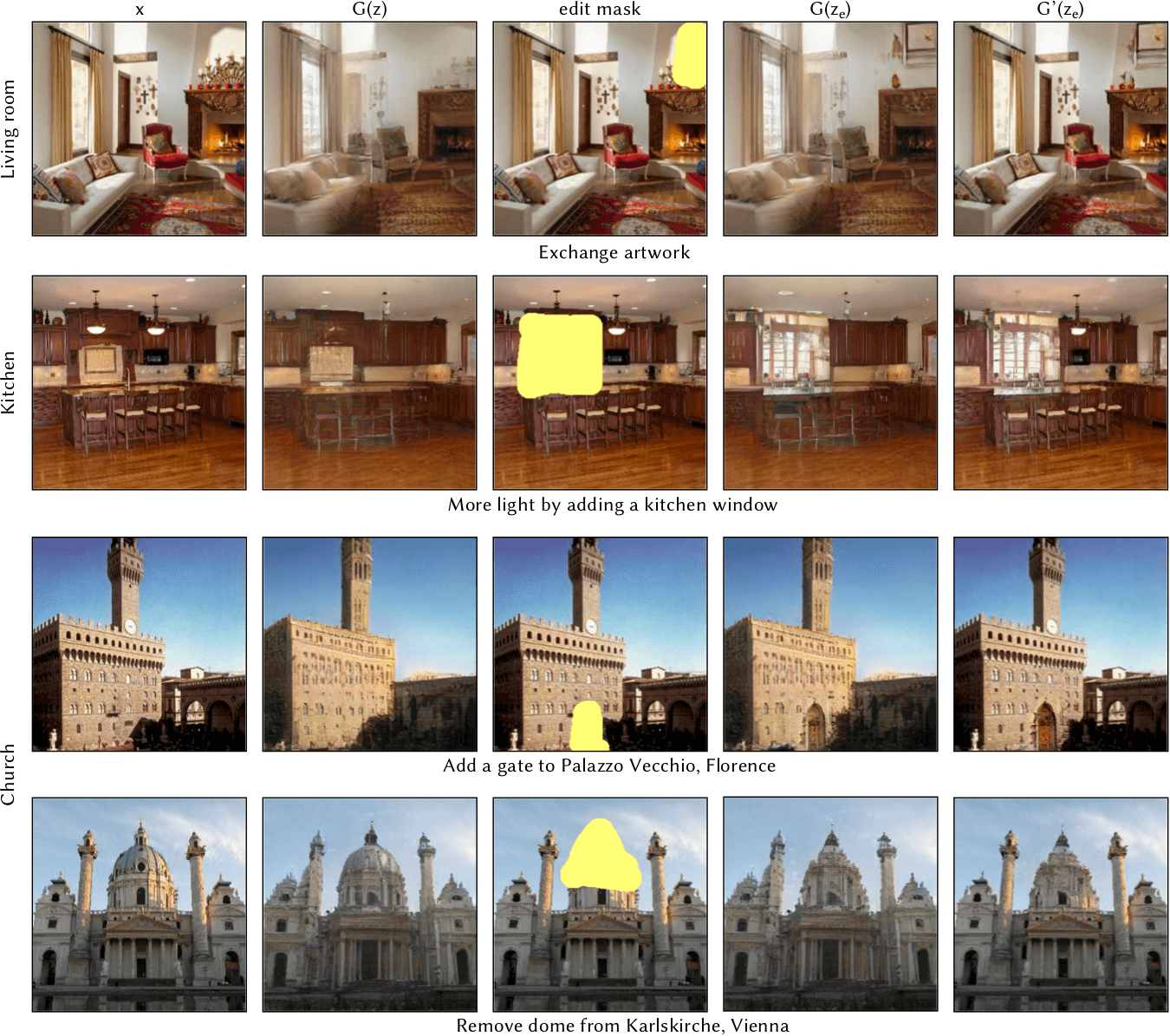

Artificial intelligence and image editing has come a long way! GANPaint Studio tool takes a natural image of a specific category, e.g. churches or kitchen, and allows modifications with brushes that do not just draw simple strokes, but actually draw semantically meaningful units – such as trees, brick-texture, or domes. This is a joined project by researchers from MIT CSAIL, IBM Research, and the MIT-IBM Watson AI Lab.

The core part of Artificial intelligence and image editing based GANPaint Studio is a neural network (GAN) that can produce its own images of a certain category, e.g. kitchen images. In previous work, we analyzed which internal parts of the network are responsible for producing which feature (project GANDissect). This allowed us to modify images that the network produced by “drawing” neurons.

The novelty we added for GANPaint Studio is that a natural image (of this category) can now be ingested and modified with semantic brushes that produce or remove units such as trees, brick-texture, or domes. The demo is currently in low resolution and not perfect, but it shows that something like this is possible. Please check the video below.

Try the demo – GANPaint Studio (SIGGRAPH)

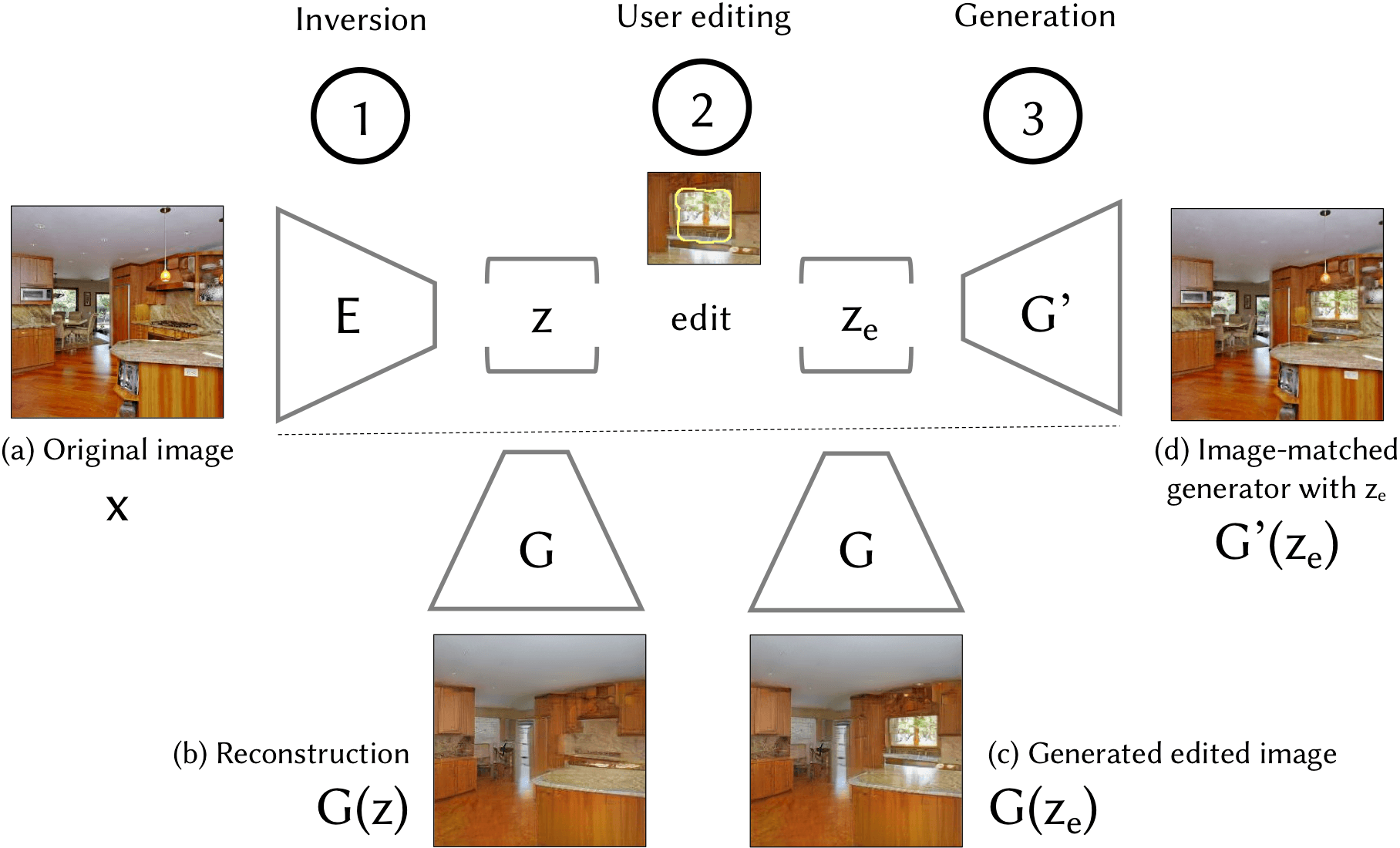

To perform a semantic edit on an image x, they take three steps. (1) first compute a latent vector z = E (x) representing x. (2) then apply a semantic vector space operation ze = edit(z) in the latent space; this could add, remove, or alter a semantic concept in the image. (3) Finally, regenerate the image from the modified ze . Unfortunately, as can be seen in (b), usually the input image x cannot be precisely generated by the generator G , so (c) using the generator G to create the edited image G(xe) will result in the loss of many attributes and details of the original image (a). Therefore to generate the image we propose a new last step: (d) learn an image-specific generator G′ which can produce x′e = G′(ze) that is faithful to the original image x in the unedited regions. Photo from the LSUN dataset.

(to appear at SIGGRAPH 2019)

David Bau, Hendrik Strobelt, William Peebles, Jonas Wulff, Bolei Zhou, Jun-Yan Zhu, Antonio Torralba

Conclusion

The applications of AI in image editing are vast and continually growing. As AI algorithms become more sophisticated, they will provide more accurate and efficient editing tools. However, it’s important to also recognize the ethical considerations around some of these technologies, especially regarding deepfakes and privacy issues. Looking forward, the goal is to use AI responsibly to enhance our creative capabilities, streamline workflows, and explore new frontiers in image editing.

Citation

@article{Bau:Ganpaint:2019,

author = {David Bau and Hendrik Strobelt and William Peebles and

Jonas Wulff and Bolei Zhou and Jun{-}Yan Zhu

and Antonio Torralba},

title = {Semantic Photo Manipulation with a Generative Image Prior},

journal = {ACM Transactions on Graphics (Proceedings of ACM SIGGRAPH)},

volume = {38},

number = {4},

year = {2019},

}

https://ganpaint.io/