Introduction

DALL·E 2 AI art generator is an artificial intelligence language model created by OpenAI, which is capable of generating high-quality images from text descriptions. It is the successor to the original DALL·E model, which was trained to create images from text input, and it was released in September 2021.

AI generated art is a relatively new field of art that is currently stretching the boundaries of creativity and is disrupting how art is actually made. Artists can now use an advanced machine learning model to generate new visual works. The images created by this these are called AI-generated images. This process is able to generate unique artwork the likes of which the world has never seen! We are already seeing computer generated art in art galleries and even on music album covers.

So what is AI art? Well AI art refers to art that is made using artificial intelligence (AI) image generation software. Artificial intelligence is a field of computer science that focuses on building machines that mimic human intelligence or even simulate the human brain through a set of algorithms. There are many different AI art generators, for example there is Google Deep Dream, WOMBO Dream, GauGAN2, and DALL·E 2. In this article we will explore more about DALL·E 2.

Also Read: Gen Z Embraces AI for Work Efficiency

Table of contents

Also Read: AI based illustrator draws pictures to go with text captions.

What is DALL·E 2 AI art generator?

DALL·E 2 is a recently new AI art image generator that is capable of creating realistic images simply by having users type in a description of the image they want created. For example if you wanted it to draw a tree, you would type in “a tree as digital art” or you could type a more descriptive prompt. At first DALL·E 2 was free to use for anyone, but now users are given a limited amount of credits every month that they can use to generate art. DALL·E 2 also has the capability to make edits to already existing images, once again simply by text descriptions created by the user. We call these DALL-E images.

DALL·E 2 is trained on a massive dataset of text and image pairs, allowing it to generate images that correspond to specific textual prompts. For example, if you give it a prompt like “an armchair in the shape of an avocado,” it will generate an image of an armchair that resembles an avocado.

What sets DALL·E 2 apart from previous AI art generators is its ability to generate complex and detailed images with multiple objects and textures, as well as its ability to manipulate existing images to fit new prompts. This opens up a wide range of possibilities for applications in fields such as design, advertising, and gaming.

This includes the ability to even remove and add elements. Even further, it can do this while taking into account certain textures, reflections, and even shadows! It can even create different variations of an already created image. Going back to our tree example, instead of typing “a tree as digital art” we could instead type “a tree as a cave painting” and we would get an image of the tree but in a different style. With this program almost anyone can create art, the only limit is our imagination. Next, let us look more into how DALL·E 2actually works.

Also Read: Amazon’s AI Kindle Scribe Revolutionizes Reviews

How does DALL·E 2 work?

DALL·E 2 seems groundbreaking, but how does it actually work? Well lets look at image generation from a high level, since that is basically what it does. First, a text encoder takes a text input, which comes from the user. It is then trained to map the text to a representation space. Next, a machine model, which is called the prior, maps the text to a corresponding image that captures the semantics of the prompt contained in the text encoding. Lastly an image decoder is then able to generate an image which is the visual manifestation of the semantic information processed from the text encoding. However there is a bit more, but to understand that we have to first look at another OpenAI model known as CLIP.

Conditional Image Generation with CLIP Latents

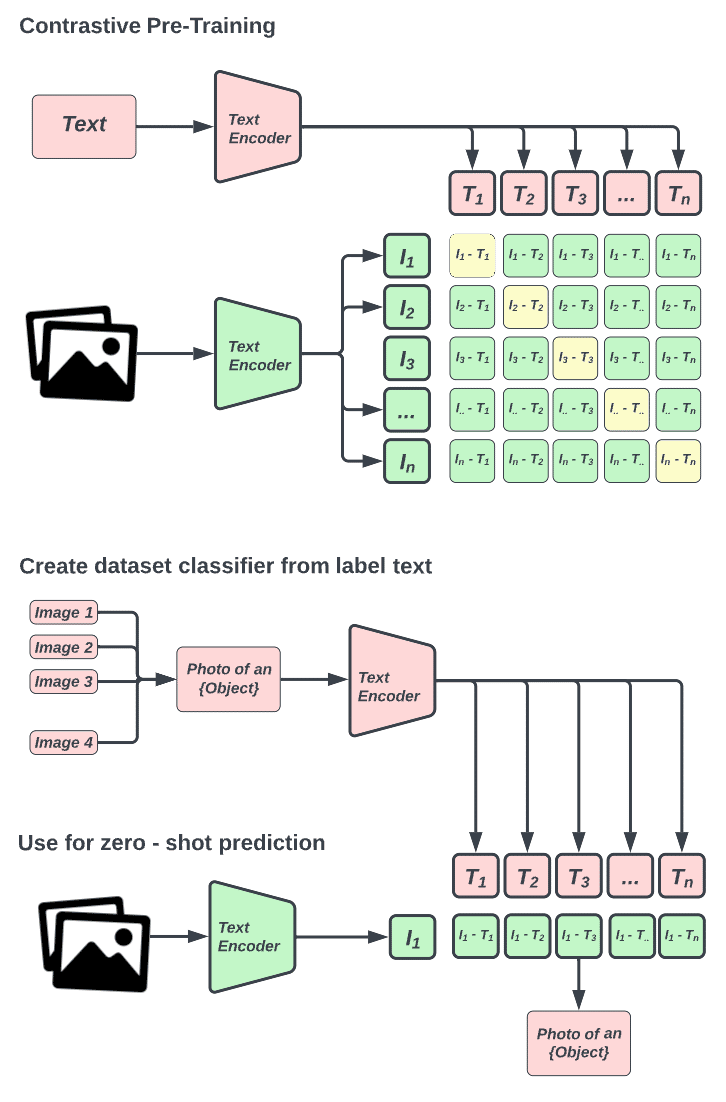

The entire DALL·E 2 model hinges on an OpenAI generative model called CLIP (Contrastive Language-Image Pre-training). CLIP has the ability to learn semantics from natural language, so let’s take a look at how CLIP is trained at a high level. First, all image systems and their captions are passed through their encoders and all objects are mapped into an m-dimensional space. Next, the cosine similarity of the image and text pairs are computed. Finally, the objective is to max the cosine similarity between N correct encoded pairs and minimize the cosine similarity between N2 – N incorrect encoded pairs. CLIP is important to DALL·E 2 because it is what actually determines how semantically related a natural language snippet is to a visual concept, which is critical for text-conditional image generation. Keep in mind this is only one of the image creation algorithms DALL·E uses. Now that we know have an idea as to how DALL·E 2 generates images, let’s compare it to the original DALL·E 1 and see why it is better.

Also Read: Famous Pieces of AI Generated Art

Why DALL·E 2 over DALL·E 1?

DALL·E 1 was created by OpenAI in 2021 and just one year later in 2022 DALL·E 2 was pushed out. Both generate images from natural language descriptions, but what makes DALL·E 2 better? Well first we can look at their differences starting with clarity between visuals and texts. DALL·E 1 was able to generate art from simple text by creating many outputs and selecting the most appropriate of them.

This was a slow process. On the other hand, DALL·E 2 is able to find a link between visuals and the text that describes them, thus making more coherent images. It uses a process known as diffusion to gradually create a pattern to resemble a picture. This process is much faster than DALL·E 1, as it is able to create many variations in seconds. Another reason to use DALL-E 2 over DALL·E 1 is the quality of images. DALL·E 1 was only able to render images in one specific way, usually with a simple background. These were usually low quality. DALL·E 2, however, is able to produce more photorealistic images that are larger and more detailed. It can also produce higher resolution images over DALL·E 1. Finally, DALL·E 2 has the ability to produce multiple variations of the same image and output them. This is a feature that DALL·E 1 does not have. Now that we know how amazing DALL·E’s output can be, let’s look at how to prevent it from being misused for malicious purposes.

Also Read: Navigating Digital Grief: AI Replicas Explained

Preventing Harmful AI Art Generations and Curbing Misuse

The biggest problem at the moment with AI-generated content is that anyone can use them, which can lead to potential harms. Although DALL·E 2 is embraced by AI artists, traditional artists are not too happy with it. It allows anyone to create violent content since it has no filter. AI art generators can create imagery that is violent images, adult images, and even copyrighted images because of the technology stable diffusion. Once someone has an AI-generated content creator, such as DALL·E 2, and begins producing AI-generated content, there are no technical constraints into what it can be used for. Since the technology is still new, it is still unclear what the consequences of machine generated content could be.

Although, ultimately it is up to people whether they use it morally or not. It is easy to imagine DALL·E 2 art and other art generators being used to generate fake propaganda, spam, and other explicit content involving public figures. So far though, nothing like this has happened, but how to do we prevent harm from deepfakes like this? At the moment the most a profit company can do against AI art generations is filter the AI itself to not produce harmful forms of art, and make content policies on what can be created and what cannot.

Another thing they can do is create human monitoring systems to check what is being created by users. However, one thing that AI art generators don’t filter out is copyrighted art. As of now it is able to mimic the style and aesthetics of other artists which is a breach of copyright and ethics. If artists have problems with the misuse of art generators, companies are very unlikely to come up with solutions as they will simply shift the blame onto users of the product.

Also Read: Redefining Art with Generative AI

Conclusion

In spite of its possible misuses, machine generated content is a very big push forward for artists looking to make AI-created images. It also has the capability to get more people interested in art as they will be able to easily create it. DALL·E 2also showcases the power of diffusion models in deep learning. It gives people a lot of power, but how they use that power is up to them. Thank you for reading this article.

Also Read: OpenAI Offers Unlimited Sora Access This Holiday

References

OpenAI. “DALL·E 2.” OpenAI, https://openai.com/dall-e-2/. Accessed 22 Feb. 2023.

—. “DALL·E 2 Explained.” YouTube, Video, 6 Apr. 2022, https://www.youtube.com/watch?v=qTgPSKKjfVg. Accessed 22 Feb. 2023.

—. “DALL·E API Now Available in Public Beta.” OpenAI, 3 Nov. 2022, https://openai.com/blog/dall-e-api-now-available-in-public-beta/. Accessed 22 Feb. 2023.

—. “DALL·E: Introducing Outpainting.” OpenAI, 31 Aug. 2022, https://openai.com/blog/dall-e-introducing-outpainting/. Accessed 22 Feb. 2023.

—. “OpenAI Research.” OpenAI, 30 July 2021, https://openai.com/research/. Accessed 22 Feb. 2023.