What is Moravec’s Paradox?

In the modern world, technology amazes us with its infallibility in completing increasingly higher-level tasks. We have ingrained in them logical and mathematical constraints that seem to surpass our own limitations. Despite this, modern AI still finds difficulty in accomplishing tasks that infants experience no trouble with. This is the basis for Moravec’s paradox. The official statement from Moravec’s 1988 paper is below:

“Encoded in the large, highly evolved sensory and motor portions of the human brain is a billion years of experience about the nature of the world and how to survive in it. The deliberate process we call reasoning is, I believe, the thinnest veneer of human thought, effective only because it is supported by this much older and much more powerful, though usually unconscious, sensorimotor knowledge. We are all prodigious olympians in perceptual and motor areas, so good that we make the difficult look easy. Abstract thought, though, is a new trick, perhaps less than 100 thousand years old. We have not yet mastered it. It is not all that intrinsically difficult; it just seems so when we do it.”

Table of contents

Also Read: How Video Games Use AI

Why are Simple Tasks Hard for AI?

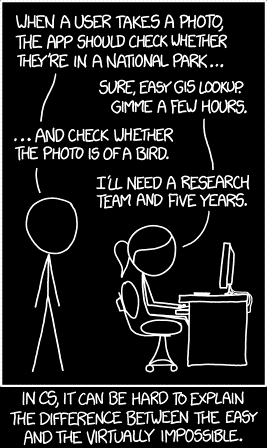

The short answer is that the human brain is a product of millions of years of evolution whereas AI has barely reached its 50th birthday. What we as humans find difficult, like calculating the trajectory of a rocket ship or decoding cryptic messages, are only difficult because they are relatively new concepts in the grand scheme of evolution. The skills we already have, like consciousness, perception, visual acuity, and emotion all come acquired through evolution. The challenge is how do we teach a machine these concepts we don’t give a second thought to?

The things that humans find hard are only hard because they are new. The skills that we already acquired through evolution come to us so naturally that we do not have to think about it. How exactly are we going to teach a machine the things that we do not even think about?

For example, even a simple task such as playing catch requires a lot of considerations. AI would need sensors, transmitters, and effectors. It needs to calculate the distance between its companion and itself, the sun glare, wind speed, and nearby distractions. It would need to decide how firmly to grip the ball and when to squeeze the mitt during a catch. It would also need to consider several what-if scenarios: What if the ball goes over my head? What if it hits the neighbor’s window? The amount of code to write and execute would be near infinite!

AI still has a ways to go to compensate for the human ingenuity. Moravec’s claim still holds sway today. AI can accomplish much, but the effort required to teach an AI maybe considerably more than we instinctively anticipate.

The Hard Problems That Are Easy

Moravec’s Paradox, named after AI and robotics researcher Hans Moravec, offers a fascinating insight into the nature of intelligence and the complexities associated with replicating human skills in machines. This paradox highlights that tasks we deem simple, predominantly sensorimotor skills such as recognizing a face in a crowd (facial recognition) or catching a ball, are notoriously difficult for machines to master. Conversely, tasks that we consider complex, such as high-level mental reasoning or computation, are often relatively straightforward for machine intelligence.

Artificial intelligence has seen remarkable progress in areas like natural language processing and high-level mental reasoning tasks, where significant computational resources can be applied to solve problems or make predictions. AI can process and analyze vast amounts of data faster and more accurately than a human can. However, when it comes to replicating intuitive, physical tasks requiring adaptability, machines struggle. These sensorimotor skills, fine-tuned by millions of years of evolution and learning, pose a formidable challenge for AI, which is yet to master the adaptive learning and nuanced interpretation necessary for these tasks.

Examples of skills that emphasize the paradox include activities that require a combination of fine motor control, spatial awareness, and real-time decision-making, such as driving a car in heavy traffic or cleaning a cluttered room. While AI can perform intelligent tasks that demand additional computer power like playing chess, translating languages, or predicting weather patterns, it grapples with the seemingly simple task of understanding and navigating the physical world as humans do. Moravec’s Paradox thus draws attention to the complexities and intricacies inherent in what we consider ‘simple’ human tasks, reminding us that the path to creating truly ‘intelligent’ machines demands a comprehensive understanding of both the physical and cognitive aspects of human intelligence.

The Easy Problems That Are Hard

At first glance, one might assume that replicating human intelligence is a matter of emulating the high-level cognitive functions that we associate with intelligent behavior. However, Moravec’s Paradox posits a captivating twist; while machines excel at complex tasks such as mathematical computations or playing chess, they falter at what humans find elementary due to natural selection, such as basic perception skills and sensory skills. These seemingly simple tasks are a result of millions of years of evolution, and they are deeply ingrained in our neural architecture. For machines, these tasks represent an entirely different kind of complexity that is not easily solved with traditional algorithmic approaches.

Deep learning and neural networks, subfields of machine learning, have emerged as promising techniques to address some of the challenges outlined by Moravec’s Paradox. By mimicking the structure and function of the human brain to a certain extent, neural networks can learn from experience and improve their performance on tasks such as object recognition. This approach is more aligned with how humans develop perception skills, but even then, it requires an enormous amount of data and computational power to even come close to human performance in certain aspects. Despite the progress, current AI systems still struggle with tasks that require a high degree of adaptability and generalization, which are innate to humans.

Moravec’s Paradox elucidates the notion that the path to creating intelligent machines is not just about outperforming humans in complex cognitive tasks but also entails mastering the seemingly simple tasks that are second nature to humans due to evolution. The ability for AI to seamlessly integrate perception and sensory skills with high-level reasoning is vital for the development of truly intelligent machines. This realization continues to shape the research in AI, driving a multidisciplinary approach that draws from neuroscience, cognitive science, and computer science to achieve a more holistic understanding of intelligence and to create machines that can genuinely mirror human capabilities.

Narrow And General Artificial Intelligence

Moravec’s Paradox highlights an intriguing dichotomy between narrow and general artificial intelligence. Narrow AI, or weak AI, excels in specific tasks, often outperforming humans by millions of times. These technological advancements have yielded AI-powered tools capable of performing complex operations at exceptional speed and scale, from advanced statistics to image recognition. For example, an AI can scan thousands of images and identify specific objects with a level of accuracy and speed that no human could achieve. Abstract thinking tasks, such as logical reasoning or high-level statistical analysis, are also areas where AI shines, as these tasks can be performed much more efficiently by machines compared to the human mind.

However, general AI, or strong AI, which is expected to understand, learn, adapt, and implement knowledge across a broad range of tasks, remains largely elusive. Despite advanced technology, AI still struggles with many simple things humans take for granted. Understanding facial expressions, for instance, is something that humans do intuitively, yet it’s an incredibly complex task for an AI. Similarly, self-driving cars, while showcasing tremendous progress, are not fully autonomous, primarily because of their inability to replicate the wide range of simple processes that a human driver performs instinctively, like predicting the behavior of pedestrians or responding to unexpected road conditions.

Moravec’s Paradox underlines an essential point in the discussion of artificial intelligence robots, job losses, and the future of work. It’s easier for AI to automate tasks involving high-level reasoning or analysis than it is to automate tasks involving basic sensory or motor skills. While AI continues to advance at a rapid pace, it’s important to remember that certain aspects of human intelligence remain uniquely human, at least for now. Moravec’s Paradox serves as a humble reminder that the path to achieving general AI is not just about high-level reasoning or computation but also involves mastering the simple, intuitive tasks that we humans often take for granted.

Also Read: Artificial Intelligence and disinformation.

Are we close to a breaking through Moravec’s Paradox?

What AI cannot do right now is to go beyond the parameters of what it has learned. Humans, on the other hand, can use their imagination to dream new possibilities. AI cannot perform creative tasks such as telling a joke or writing an original story. But are they really that far off?

In 2016, Google’s brainchild, DeepMind Technologies, developed AlphaGo a program that defeated world Go champion Lee Sedol. This reinforcement learning AI innovated new strategies for the centuries old game that earned the respect of many masters. In 2021, OpenAI released GPT-3, a revolutionary NLP algorithm that has greater comprehension for the nuances and context of language. Through it, OpenAI created DALL-E which generates never before seen art from any supplied text.

Though these feats are impressive, it does not necessarily mean that sentient AI is around the corner. Human’s innate skills are valuable assets that are not easily transferable, and humans will always remain relevant. However, we may be closer to a breakthrough than Moravec could have ever guessed.

Conclusion

In our exploration of Moravec’s Paradox, we’ve delved into the curious contradiction observed by AI and robotics researchers – that machines find the “easy” things hard, and the “hard” things easy. As articulated by Hans Moravec and his contemporaries, this paradox reveals an important insight into the evolution of both human intelligence and artificial intelligence. It illustrates how we, as humans, often take for granted the complexity of our motor skills and perception, the very tasks AI finds challenging to master, while cognitive tasks we consider complex are relatively easy for AI.

The implications of Moravec’s Paradox in the field of AI are profound. It underlines the current limitations of AI and robotics in replicating human-like perception and motor skills, and thus guides researchers towards a more holistic approach in developing intelligent systems. It reminds us that to achieve human-level AI, we must not only focus on high-level cognitive tasks but also on what seem to be simple, mundane tasks, such as recognizing a face in a crowd or picking up a delicate object.

However, it’s important to remember that this doesn’t make AI any less valuable or impactful. Even as AI struggles with tasks we find easy, it’s revolutionizing industries, making groundbreaking discoveries, and improving lives in ways we couldn’t have imagined a few decades ago.

Moravec’s Paradox provides an intriguing perspective on the development of AI. It pushes us to rethink our assumptions about intelligence and challenges us to broaden our approach to creating machines that can genuinely replicate the full spectrum of human abilities. As we venture further into the future of AI, this paradox serves as a compass, guiding us towards a more nuanced and comprehensive understanding of both human cognition and artificial intelligence.

References

Frana, Philip L., and Michael J. Klein. Encyclopedia of Artificial Intelligence: The Past, Present, and Future of AI. ABC-CLIO, 2021.

Kirchschlaeger, Peter G. Digital Transformation and Ethics: Ethical Considerations on the Robotization and Automation of Society and the Economy and the Use of Artificial Intelligence. Nomos Verlag, 2021.

Moravec, Hans. Mind Children: The Future of Robot and Human Intelligence. Harvard University Press, 1988.