Introduction

Machine Learning has made inroads into many industries such as finance, healthcare, retail, autonomous driving, transportation and others . Machine Learning gives the computers the capability to learn without being explicitly programmed. This allows computers to accurately predict based on patterns in the data. The machine learning process involves data being fed to the model (algorithm). The model identifies the data patterns and makes predictions. Initially, the training process involves the model being fed training data on which model makes the predictions. The model is then tweaked till we get the desired accuracy. New data is then fed into the model to test for desired accuracy. The model is re-trained until the model gives the desired outcome.

Adversarial machine learning attack is a technique in which one tries to fool deep learning models with false or deceptive data with a goal to cause the model to make inaccurate predictions. The objective of the adversary is to cause the model to malfunction.

Table of contents

- Introduction

- Adversarial Attacks in Machine Learning and How to Defend Against Them

- Types of Adversarial Attacks

- How are Adversarial Examples Generated?

- Adversarial Perturbation

- Black Box VS White Box Attacks

- Examples of Black Box Attacks

- Physical Attacks

- Out of Distribution (OOD) Attack

- How Can We Trust Machine Learning?

- How do we defend against adversarial attacks

- Denoising Ensembles

- Verification Ensemble

- Diversity

- Conduct Adversarial Training

- Assess Risk

- Verify Data

- Conclusion

- References

Adversarial Attacks in Machine Learning and How to Defend Against Them

The success of machine learning is attributed to big datasets being fed to classifiers (models) to make predictions. You train the classifier by minimizing a function which measures the error made on this data. You optimize this function thereby minimizing the error of the predictions you make on the training data by adjusting the parameters of the classifier.

Adversarial attacks exploit the same underlying mechanism of learning, but aim to maximize the probability of errors on the input data. It has become possible because of inaccurate or misrepresenting data used during the training or using maliciously designed data for an already trained model.

To get an idea how adversarial attacks have gained prominence; in 2014 there were no papers regarding the adversarial attacks on preprint server Arxiv.org. Today there are more than 1000 research papers on adversarial attacks and their examples. Google and NYU in 2013 published a research paper titled “Intriguing properties of neural networks,” which showcased the essence of adversarial attack on neural networks.

Adversarial attacks and defense techniques to defend them are becoming common themes in conferences including Black Hat, DEF CON, ICLR, etc.

Also Read: Top 20 Machine Learning Algorithms Explained

Types of Adversarial Attacks

Adversarial attack vectors can take several forms.

Evasion – As the name suggests, they are carried out to avoid detection and are carried out on models that are already trained. An adversary will introduce data to intentionally deceive an already trained model into making errors. This is one of the most prevalent types of attack.

Poisoning – These are carried out during the training phase. The adversary will provide contaminated (misrepresented or inaccurate) data during training forcing the model to make wrong predictions

Model extraction – Adversaries in this case interacts with a production deployed model and tries to re-construct a local copy of the model; a substitute model, which is 99.9% in agreement with the production deployed model.This means the copy of the model is basically identical for the most practical tasks. This is also called Model Stealing type of attack.

How are Adversarial Examples Generated?

Machine learning uses two types of techniques: supervised learning, which trains a model on known input and output data so that it can predict future outputs, and unsupervised learning, which finds hidden patterns or intrinsic structures in input data. At its core, the model produces a loss function. The loss function is a measure of how good your prediction model does in terms of being able to predict the expected outcome or value.

Loss is the penalty for a bad prediction. That is, loss is a number indicating how bad the model’s prediction was on a single example. If the model’s prediction is perfect, the loss is zero; otherwise, the loss is greater. The goal of training a model is to find a set of weights and biases that have low loss, on average.

In adversarial attacks, the adversary can trick the system by either contaminating the input data or changing the predicted outcome from the original expected prediction. They can be classified in targeted or untargeted attacks. In a targeted attack, noise will be intentionally introduced into an input dataset to cause the model to give an incorrect prediction. In a untargeted attack, an adversary will simply try to find any inputs that tricks the model.

Here are some examples:

- Just adding a few pieces of tape can trick a self-driving car to misclassify a stop sign as a speed limit sign. The first image on the left is an original image which is converted to an adversarial sample using a month shaped tape.

- Researchers at Harvard were able to fool a medical imaging system into classifying a benign mole as malignant with 100% confidence.

- In a Speech-To-Text Transcription Neural Network, a small perturbation when added to the original waveform caused it to transcribe as any phrase the adversary chose.

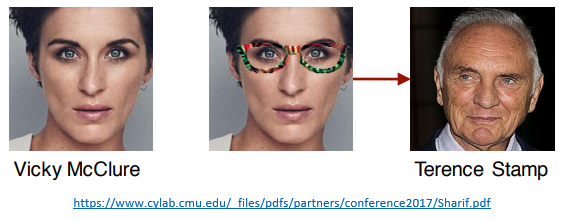

- Attacks against Deep Neural Networks for face recognition with carefully fabricated eyeglass frames

The existence of these adversarial examples means that systems that incorporate deep neural network learning models actually have a very high-security risk.

Also Read: Introduction to Generative Adversarial Networks (GANs)

Adversarial Perturbation

An adversarial perturbation is any modification to a clean image that retains the semantics of the original input but fools a machine learning model to misclassify. The way this works is; the adversary will compute the derivative of the function that does the classification. A noise in then introduced to the input image and fed back to the function to trick the classifier. In the example below, an imperceptible perturbation is added to original input image to create and adversarial image.

Popular Adversarial Attack methods include the following:

Limited-memory BFGS (L-BFGS) – The L-BFGS method is a non-linear gradient-based numerical optimization algorithm to minimize the number of perturbations added to images. While it is effective at generating adversarial samples, it is computationally intensive.

FastGradient Sign method (FGSM) – It is a simple and fast gradient-based method used to generate adversarial examples to minimize the maximum amount of perturbation added to any pixel of the image to cause misclassification.

Jacobian-based Saliency Map Attack (JSMA) – Unlike FGSM, the method uses feature selection to minimize the number of features modified while causing misclassification. Flat perturbations are added to features iteratively according to saliency value by decreasing order. It is computationally more intensive than FGSM, but the advantage is; very few features are perturbed.

Deepfool Attack – This untargeted adversarial sample generation technique aims at minimizing the euclidean distance between perturbed samples and original samples. Decision boundaries between classes are estimated, and perturbations are added iteratively. It is effective at producing adversarial samples with fewer perturbations but is computationally intensive than FGSM and JSMA.

Carlini & Wagner Attack (C&W) – The technique is based on the L-BFGS attack but without box constraints and different objective functions. The adversarial examples generated by this method was able to defend state of the art defenses, such as defensive distillation and adversarial training. It is quite effective in generating adversarial examples and can defeat adversarial defenses.

Generative Adversarial Networks (GAN) – GANs have been used to generate adversarial attacks, where two neural networks compete with each other. Thereby one is acting as a generator, and the other behaves as the discriminator. The two networks play a zero-sum game, where the generator tries to produce samples that the discriminator will misclassify. Meanwhile, the discriminator tries to distinguish real samples from ones created by the generator. Training a Generate Adversarial Network is very computationally intensive and can be highly unstable.

Black Box VS White Box Attacks

An adversary may or may not have knowledge of the target model and can perform the following two types of attack:

Black box attack –The adversary has no knowledge of the model (how deep or wide the neural network is) or its parameters and also does not have access to any training dataset. The adversary can only observe the output of the model. Hence it is the hardest to exploit, but if carried out could be very effective. In this case, an adversary could create an adversarial example with a model from a clean slate or without any model.

White box attack

White box attack is one where the adversary has full knowledge of the deployed model; it’s model architecture, input and output parameters and the training dataset. The adversary can adapt and directly craft adversarial samples on the target model. Adaptive attack is also known as a gradient-based or iterative attack. The adaptive aspect refers to the adversary’s ability to modify the attack as they receive feedback from the model. The adversary can generate an initial set of inputs and observe the model’s response to them. Based on this response, the adversary can modify the inputs to make them more effective at evading the model’s defenses. This process is repeated iteratively until the adversary is able to find inputs that can reliably fool the model. They are particularly challenging to defend against because the adversary can modify their attack strategy in real-time to overcome any defenses that the model may have in place. Additionally, because the adversary has access to the model’s architecture and parameters, they can use this information to generate attacks that are specifically tailored to the model.

Currently, defense approach that is effective against a black-box attack is vulnerable to an adaptive white-box attack. It is challenging to develop defenses that can completely protect a model from an adaptive attack.

| Description | Black box | White box |

| Adversary Knowledge | Restricted knowledge from being able to only observe the network output on some probed inputs. | Detailed knowledge of the network architecture and the parameters resulting from training. |

| Strategy | Based on a greedy local search generating an implicit approximation to the actual gradient w.r.t the current output by observing changes in input. | Based on the gradient of the network loss function w.r.t to the output. |

| http://leonardtang.me/posts/AA-Survey | ||

Examples of Black Box Attacks

The various ways in which practical black box attacks can be manifested is described below:

Physical Attacks

It involves adding something ‘physically’ to the input data to trick the model. It’s usually easier to realize. For example, a CMU research showed that the adversary could just added a colorful eyeglass to facial recognition models and trick the model. The image below illustrates this – The first image is the original image and the second image is an adversarial sample image.

Out of Distribution (OOD) Attack

Black box attacks can also be carried out via out-of-distribution (OOD) attacks. An out-of-distribution attack is a type of adversarial attack where the attacker uses data points that are outside of the distribution of the training data used to build the model.

Machine learning models are trained on a specific dataset that represents the distribution of the problem space that they are intended to solve. An adversary can attempt to trick a model by providing input data that falls outside of this distribution. This can cause the model to produce incorrect outputs, leading to serious consequences in real-world applications such as self-driving cars, medical diagnosis systems, and fraud detection systems.

How Can We Trust Machine Learning?

As machine learning is making more decisions for us and are becoming complex, how can we trust machine learning.

The core principles of trust revolve around the following questions:

- Can I trust what I am building?

- Can you trust what I built?

- Can we trust what we are all building?

To answer the above questions, the three important qualities we need to consider are

- Clarity

- Competency

- Alignment

Clarity is the quality of communicating well and being easily understood. It about understanding why are we making a particular decision and whether we are doing it for the right reasons. Clarity can help humans make more informed decisions. We need to be clear about what is the right metric we will consider.

Competency is the quality of having sufficient knowledge, judgement, skill or strength for a particular skill. In machine learning, competency is all about evaluation. In the machine learning world, this means we need to test training models more systematically. We have little insight on how the system might behave in the real world based on the training we do offline. So the benchmark dataset and test dataset are at best a weak proxy to what can happen in the real world.

Alignment is the most complex one. It is a state of agreement or co-operation among persons, groups, nations, etc. with a common cause or viewpoint. It’s agreeing on the balance between concerns and trying to answer the question – Does my system have the same cause or viewpoint that I hope to have? – as every choice that you make when you create systems impact people and they have to be aligned. The choice of data is one of the important decision that defines the behavior of the machine learning model. The diversity and coverage of data is important to avoid any biases and perpetuating any stereotype.

How do we defend against adversarial attacks

While one may not be able to prevent adversarial attacks entirely, a combination of several defense methods; defensive and offensive, could be used to defend against adversarial attacks. Defense approaches demonstrated to be effective against black-box attacks are vulnerable to white-box attacks.

In the defensive approach, the machine learning system could detect adversarial attacks and act on it via denoising and verification ensembles.

Denoising Ensembles

Denoising algorithm is used to remove noise from signals or images. Denoising ensembles refer to a technique used in machine learning to improve the accuracy of denoising algorithms.

Denoising ensembles involve training multiple denoising algorithms on the same input data, but with different initializations, architectures, or hyperparameters. The idea is that each algorithm will have its own strengths and weaknesses, and by combining their outputs in a smart way, the final denoised output will be more accurate.

Denoising ensembles have been successfully applied to various tasks, such as image denoising, speech denoising, and signal denoising.

Verification Ensemble

Verification ensemble is a technique used in machine learning to improve the performance of verification models, which are used to determine whether two inputs belong to the same class or not. For example, in face recognition systems; a verification model may be used to determine whether two face images belong to the same person or not.

Verification Ensemble can be done in different ways, such as averaging the outputs of the individual verifiers or using a voting mechanism to choose the output with the most agreement among the verifiers. Verification ensembles have been shown to improve the performance of verification tasks, such as face recognition, speaker verification, and signature verification.

A Bit-Plane classifier is a technique used to analyze the distribution of image data across different bit planes, in order to extract useful information and identify patterns or features in the image. It is commonly used in image processing tasks such as image compression or feature extraction. Bit-Plane classifiers can help identify the specific areas or features of an image that are most vulnerable to adversarial attacks. Robust Bit-Plane classifiers can be trained to focus on specific bit planes or image features that are less susceptible to adversarial attacks, while ignoring other, more vulnerable features.

Diversity

As adversarial attackers get sophisticated and better at attacks, having a diversity of denoisers and verifiers will improve the chances of successfully thwarting the attack. A diverse group of denoisers and verifiers act as a multiple gate keepers thus making it difficult for the adversary to successfully execute an attack.

Different denoisers may be better suited to different types or different levels of noise. For example, some denoisers may be better at removing high-frequency noise, while others may be better at removing low-frequency noise. By using a diverse set of denoisers, the model can be more effective at removing a wide range of noise types.

Different verifiers may be better suited to different types of data or different types of errors. For example, some verifiers may be better at detecting semantic errors, while others may be better at detecting syntactic errors. By using a diverse set of verifiers, the model can be more effective at detecting a wide range of errors and ensuring the validity of its output.

Conduct Adversarial Training

Adversarial training is a technique used in machine learning to improve the robustness of a model against adversarial attacks. The objective is to augment the training data during the testing phase with adversarial perturbations, in a way that causes the model to make a mistake. The original model is then subjected to both the original and adversarial examples, thus forcing it to learn and make more robust decisions.

The process involves the following steps:

- Generate adversarial examples: Adversarial examples can be generated using a variety of techniques, such as the Fast Gradient Sign Method (FGSM), the Projected Gradient Descent (PGD) method, or the Carlini & Wagner (C&W) attack. These methods involve perturbing the input data in a way that maximizes the model’s loss function.

- Augment the training data: The adversarial examples are added to the training set, along with their corresponding labels.

- Train the model: The model is trained on the augmented dataset, using standard optimization techniques such as stochastic gradient descent (SGD).

- Evaluate the model: The performance of the model is evaluated on a test set that contains both clean and adversarial examples.

- Repeat the process: The steps above are repeated for multiple epochs, with additional adversarial input tweaks, with the goal of gradually improving the model’s ability to resist adversarial attacks.

While Adversarial training cannot guarantee complete robustness, it is one of effective strategies that can improve the robustness of machine learning models against adversarial attacks.

A surrogate classifier can be used as a tool to generate adversarial perturbations that can then be used to attack the original model. This can be especially useful when the original model is complex or difficult to attack directly, as the surrogate model can provide a simpler target for the attack. One approach is to train a separate neural network that is similar in architecture and behavior to the original model. This surrogate model is then used to generate adversarial perturbations which can be applied to the original model to test its robustness against attacks.

Assess Risk

Risk assessment involves identifying and evaluating potential risks associated with the model’s deployment, such as the likelihood and impact of an adversarial attack.

Identify potential adversarial attack scenarios that could occur in the model’s deployment environment. This could include attacks such as evasion attacks, poisoning attacks, or model extraction attacks.

Assess the likelihood and impact of each identified attack scenario. The likelihood of an attack could be based on factors such as the attacker’s access to the machine learning model, their knowledge of the model’s architecture, and the difficulty of the attack. The impact of an attack could be based on factors such as the cost of incorrect predictions or the damage to the model’s reputation.

Develop mitigation strategies to reduce the likelihood and impact of identified attack scenarios. This could include strategies such as using multiple machine learning models to make predictions, limiting access to the model, or incorporating input preprocessing techniques to detect potential adversarial inputs.

Monitor the model’s performance and behavior to detect potential adversarial attacks. This could include monitoring the distribution of input data or analyzing the model’s decision-making process to identify potential signs of an attack.

it is important to regularly review and update these strategies as new attack scenarios emerge or the deployment environment changes.

Verify Data

Data verification involves thoroughly checking and validating the data used to train the model. This process can include data preprocessing, cleaning, augmentation and checking for quality. Preprocessing steps should include normalization and compression. Additionally, one could also consider input normalization. This involves preprocessing the input data to ensure that it falls within a certain range or distribution. This can help reduce the effectiveness of certain types of adversarial attacks, such as those that involve adding minimum perturbations to the input data.Additionally, training data can be made robust by ensuring data is encrypted and sanitized. It is also advisable to train your model with data in an offline setting.

By constantly review the training data for contamination, the model will become less vulnerable to adversarial attacks.

Conclusion

Machine learning models are here to stay and will continue to get more advanced with time. Adversarial attacks will becoming increasingly sophisticated, and defending against them will require a multi-faceted approach. While deep learning models using adversarial examples could help increase robustness to some degree, current applications of adversarial training have not fully solved the problem, and scaling is a challenge.

It is important to note that defending against adversarial attacks has to be an ongoing process, and machine learning practitioners must remain vigilant, subject their machine learning systems to attack simulations and adapt their defense strategies as new attack scenarios emerge.

Also Read: Introduction to Machine Learning Algorithms

References

Peter, Hansen. “How Can I Trust My ML Predictions?” phData, 7 Jul. 2022, https://www.phdata.io/blog/how-can-i-trust-my-ml-model-output. Accessed 20 Mar. 2023.

Daniel, Geng and Rishi, Veerapaneni. “Tricking Neural Networks: Create your own Adversarial Examples” medium.com, 10 Jan. 2018, https://medium.com/@ml.at.berkeley/tricking-neural-networks-create-your-own-adversarial-examples-a61eb7620fd8. Accessed 22 Mar. 2023

Alexey, Kurakin, Ian J., Goodfellow, Samy, Bengio. “Adversarial Examples In The Physical World” Technical Report, Google, Inc., https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/45471.pdf. Accessed 22 Mar. 2023

Attacking machine learning with adversarial examples, openai.com, 24 Feb. 2017, https://openai.com/research/attacking-machine-learning-with-adversarial-examples. Accessed 25 Mar. 2023

Adversarial Machine Learning: What? So What? Now What – https://www.youtube.com/watch?v=JsklJW01bjc. Accessed 24 Mar, 2023

Stanford Seminar – How can you trust machine learning? Carlos Guestrin- https://youtu.be/xPLLbueK4NY. Accessed 24 Mar, 2023

Gaudenz Boesch, “What Is Adversarial Machine Learning? Attack Methods in 2023”, https://viso.ai/deep-learning/adversarial-machine-learning/. Accessed 25 Mar. 2023