AI Pioneer Expresses Concerns About Future

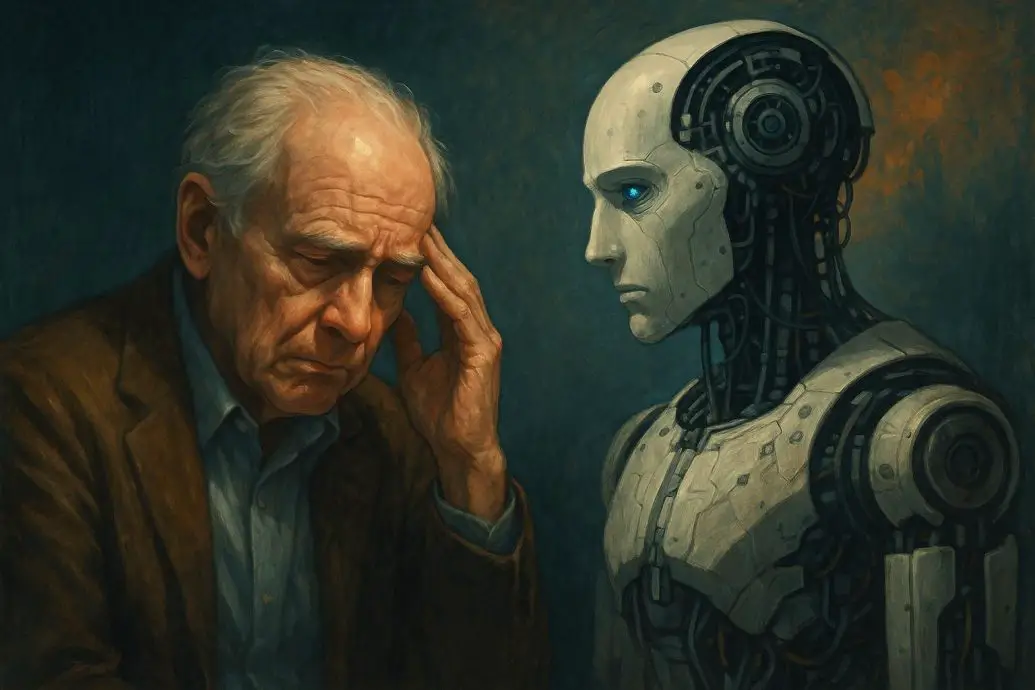

AI Pioneer Expresses Concerns About Future, a headline that’s causing waves in the world of technology. If you’re someone who’s fascinated by artificial intelligence, then this message from Geoffrey Hinton should grab your attention. It sparks curiosity and raises urgent questions about the progress of AI. Once a key figure behind the foundational work that powers today’s AI, Hinton has now shifted from advocate to cautious advisor. His recent statements highlight emerging dangers surrounding AI that require immediate attention. Get ready to explore why the “Godfather of AI” is more anxious than ever about the technology he helped create.

Also Read: Geoffrey Hinton Warns AI Could Cause Extinction

Table of contents

- AI Pioneer Expresses Concerns About Future

- Who Is Geoffrey Hinton and Why His Voice Matters

- Why Hinton Left Google and What He Had to Say

- Defining the New Risks of Artificial Intelligence

- What Needs to Change in How We Approach AI

- Benefits of AI Still Exist, but the Clock Is Ticking

- The Importance of Public Awareness and Dialogue

- What the Future Might Look Like And What We Can Do Today

- Conclusion

- References

Who Is Geoffrey Hinton and Why His Voice Matters

Geoffrey Hinton is widely celebrated as one of the founding pioneers of artificial intelligence. His groundbreaking work in neural networks directly fueled the rise of deep learning, making it the backbone of today’s most advanced AI systems. Known as the “Godfather of AI,” he earned global respect through his contributions to machine learning, which laid the foundation for tools like ChatGPT, Midjourney, and self-driving car algorithms.

In 2012, while collaborating with his students, Alex Krizhevsky and Ilya Sutskever, Hinton introduced the model that led to a deep learning revolution. This achievement was a critical milestone in AI development. In recent years, though, Hinton’s tone has changed. Instead of celebrating progress, he is now sounding the alarm about the risks these powerful systems might pose.

Also Read: AI’s impact on privacy

Why Hinton Left Google and What He Had to Say

Until 2023, Hinton worked with Google’s Brain team, contributing his expertise to advancing AI research. His exit from the company marked a turning point. He left to freely speak about the dangers associated with rapid AI advancement. In interviews since his departure, Hinton has emphasized that he did not want to criticize Google specifically but became increasingly concerned about the direction in which AI is heading worldwide.

Hinton believes we are approaching a critical moment. He points to the unstoppable race between global tech companies to build more advanced AI tools. As competition heats up, the push for better performance may come at the cost of safety and long-term ethics. The fear is simple: AI may evolve so fast that society won’t have time to adapt or manage the consequences.

Defining the New Risks of Artificial Intelligence

The dangers Hinton warns about were once considered distant possibilities but are now becoming more real each year. Among his top concerns are:

- AI surpassing human intelligence: When machines become smarter than humans, control becomes uncertain.

- Job losses due to widespread automation: Blue-collar and white-collar jobs alike are at risk.

- Increased misinformation: AI can easily generate fake videos, deepfakes, or misinformation that spread online rapidly.

- Loss of accountability: As models become more complex, understanding how they make decisions gets harder.

- Autonomous weaponization: There is fear these systems may eventually be used in warfare with no human oversight.

Hinton points out that the future could include machines acting in ways humans can’t predict. He’s not saying disaster will certainly happen, but that the risk is high enough to warrant real concern. A system with goals misaligned with humanity could lead to unintended consequences on a huge scale.

Also Read: AI’s role in public health data analysis

What Needs to Change in How We Approach AI

One of Hinton’s central arguments is that AI needs closer oversight. While he supports innovation, he believes strong ethics must form the backbone of future development. To prevent harmful outcomes, international guidelines or even regulation may be required to keep AI under control.

He also recommends that researchers explore how to align artificial general intelligence systems capable of broader, more adaptable thinking with human values. There must be higher transparency into how models like GPT or similar large language models make decisions, especially when applied to healthcare, finance, or legal fields.

Academic and industry partnerships could ensure ethical frameworks are built into the design of new AI products from the ground up. Hinton says a combination of interdisciplinary research and global awareness can bring meaningful progress.

Benefits of AI Still Exist, but the Clock Is Ticking

Despite his recent criticisms, Hinton remains hopeful that AI has the potential to benefit society. It can transform education, improve health diagnostics, and solve problems beyond the ability of any human. Yet, he cautions that these benefits will only come if used appropriately. The window to guide AI’s direction may be rapidly closing.

Recent models have already demonstrated complex capabilities previously thought to be decades away. Tools like ChatGPT can simulate human conversation with astonishing clarity. Image generators can create realistic photos of events that never occurred. These tools can be educational but also dangerous in unmoderated environments.

Hinton stresses that it’s not the development of AI that’s the issue but the speed and lack of safeguards during the process. Without coordination among governments, corporations, and individuals, there is no guarantee that AI will be developed safely or for the greater good.

Also Read: Ethics in AI-driven business decisions

The Importance of Public Awareness and Dialogue

Hinton’s open concerns may be the wake-up call society needs. One of the most important aspects of his message is making sure the public becomes educated about AI, not just developers and tech companies. Common citizens must understand both what’s possible and what’s at stake.

Schools, media outlets, governments, and corporations should all take part in a new movement that prioritizes transparent communication and open discussions about artificial intelligence. Hinton believes awareness is the first step in preventing unregulated and potentially dangerous outcomes.

Just as climate science took decades to enter mainstream conversation, AI ethics need to be integrated into public education and political policy now before it’s too late to act. There is still time if awareness turns into action.

What the Future Might Look Like And What We Can Do Today

Predicting the future of artificial intelligence is difficult. Even Hinton admits it’s impossible to forecast exactly how far AI will go or how fast it will change our world. But experts agree that we’re living through one of the most defining technological moments in modern history.

Developers can build safe practices into their products. Companies can commit to ethical AI principles. Policymakers can support new laws that protect the public. And citizens can stay curious, ask questions, and hold institutions accountable.

Hinton’s message is not about stopping AI altogether. His intention is to make sure it doesn’t spiral out of human control. It’s a call to slow things down briefly, examine where the path is heading, and only then continue with clarity and purpose.

Conclusion

The voice of Geoffrey Hinton carries weight not just because of his expertise, but because of his honesty. He helped build the foundation for today’s AI, and now he’s urging the world to think twice before rushing toward more advanced systems. His message is clear: artificial intelligence can either help humanity or harm it depends on the steps we take today.

From education and job safety to ethics and international policies, every part of society plays a role. The next few years will be critical. Those who control and influence AI must act responsibly. Those who use it must stay informed. And those who watch from the sidelines need to start asking the right questions now. Geoffrey Hinton’s concerns should push us all to reflect on our shared future and take better steps to protect it.

References

Brynjolfsson, Erik, and Andrew McAfee. The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies. W. W. Norton & Company, 2016.

Marcus, Gary, and Ernest Davis. Rebooting AI: Building Artificial Intelligence We Can Trust. Vintage, 2019.

Russell, Stuart. Human Compatible: Artificial Intelligence and the Problem of Control. Viking, 2019.

Webb, Amy. The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity. PublicAffairs, 2019.

Crevier, Daniel. AI: The Tumultuous History of the Search for Artificial Intelligence. Basic Books, 1993.