Introduction

Logistic regression is one of the most commonly used statistical methods for solving classification problems and its aim is to estimate the probability of an event occurring based on the independent variables. It analyses the relationship between a binary dependent variable i.e only two categories and one or more independent variables. However, there may be situations where the dependent variable has three or more categories. In such situations multinomial logistic regression is used. The main difference between logistic regression and multinomial logistic regression is the number of categories in the dependent variable and similar to multiple linear regression, the multinomial logistic regression does predictive analysis.

Table of contents

What is Multinomial Logistic Regression?

Multinomial Logistic Regression is a statistical analysis model used when the dependent variable is categorical and has more than two outcomes. It extends binary logistic regression, which is applicable for dichotomous outcomes, allowing for multiple classes. It provides probabilities for each outcome category and determines the impact of predictor variables on these probabilities. This makes it useful for understanding and predicting the categorial outcome in complex data sets.

Multinomial logistic regression is a classification algorithm used to model and analyze relationships between a dependent categorical variable with more than two categories (i.e., a multinomial variable) and one or more independent variables or explanatory variables. The number of pairs of categories that can be formed depends on the total number of categories present in the dependent variable. In this type of regression, the dependent variable is categorical and should be either an ordinal variable or a nominal variable. Ordinal variable can be ordered or ranked. The independent variables can be a continuous variable, categorical variable, or a combination of both. The ordinal model, specifically ordinal logistic regression, is used to analyze outcomes with ordered categories.

When conducting a multinomial logistic regression, one category is chosen as the reference or baseline category, against which the other categories are compared. The reference category is typically selected based on theoretical or practical considerations, and the interpretation of the model’s coefficients is based on the comparisons with this reference category. The response categories represent the different possible outcomes or groups that the observations can be classified into. For example, if we are studying the factors influencing a person’s choice of transportation mode (car, bus, or bicycle), the response variable would have three categories: car, bus, and bicycle.

Also, two or more independent variables can be multiplied to create additional independent variables called interaction variables. They capture the combined effect of the interacting variables on the dependent variable. This allows you to examine whether the relationship between the independent variables and the outcome categories differs across different levels of the interaction variable. For example, suppose you have a multinomial logistic regression model predicting the likelihood of different car types (categories: sedan, SUV, and hatchback) based on variables such as age and income. If you include an interaction term between age and income, you can assess whether the effect of income on car type varies across different age groups.

It’s important to note that the interpretation of odds ratios in multinomial regression can be more complex than in binary logistic regression, as they involve comparing multiple outcome categories. Additionally, in multinomial regression, the concept of “adjusted odds ratios” is not as commonly used as it is in binary logistic regression, where it refers to accounting for the influence of other variables. For example, if the reference category chosen is “car,” the model estimates the probabilities of choosing the bus or bicycle relative to choosing the car. The estimated coefficients represent the log-odds ratios of each category compared to the reference category. These coefficients indicate the direction and magnitude of the association between the explanatory variables and the log-odds of belonging to a particular category relative to the reference category. It’s important to note that standard deviation is not directly used in multinomial logistic regression, as it is typically employed for continuous variables rather than categorical outcomes.

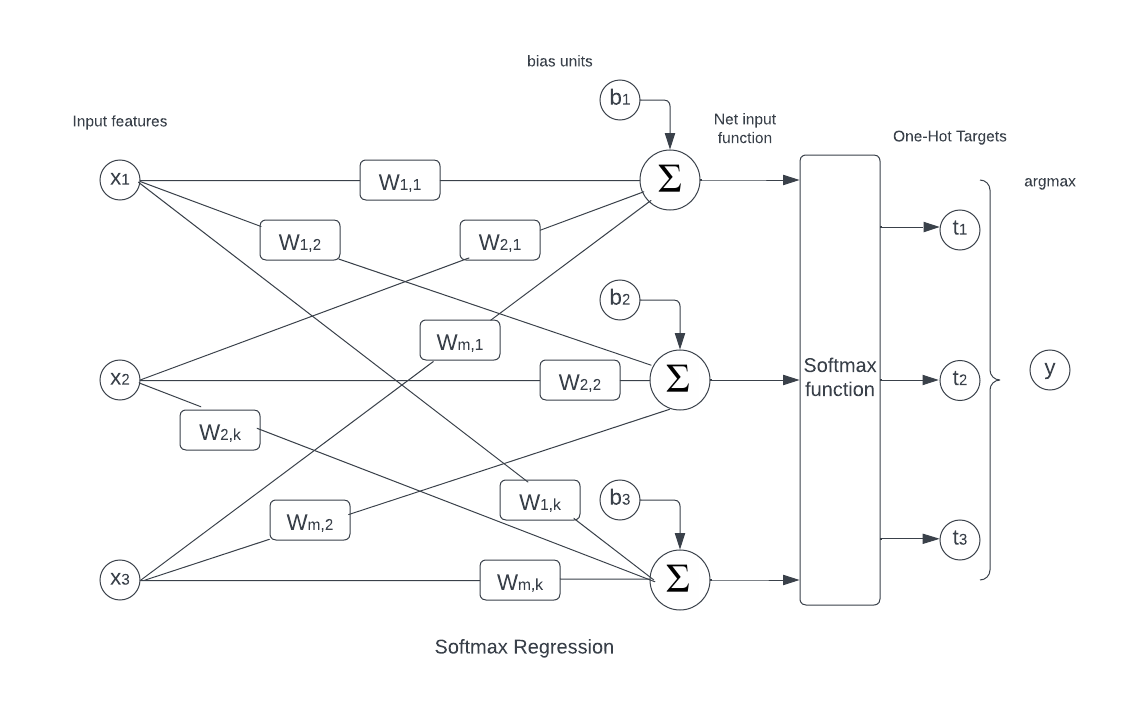

Multinomial logistic regression is closely related to the concept of probability distribution. In this regression model, the dependent variable follows a multinomial distribution, which represents the probabilities of the various categories. The probabilities are estimated using the logistic function, which maps the linear combination of predictor variables to the probability space. An additional normalization factor is introduced to ensure that the predicted probabilities for each category sum up to 1. This factor is required because the model estimates the log-odds of each category relative to a reference category, and the probabilities are obtained by applying the softmax function to the log-odds. The model involves estimating K-1 regression equations, where K represents the number of categories in the dependent variable. This is due to the choice of a reference category against which the other categories are compared.

The K-1 regression equations capture the relationship between the predictor variables and the log-odds of each category relative to the reference category. The reference category is usually selected arbitrarily or based on prior knowledge, and its coefficients are typically set to zero. Observed features or predictor variables are used to predict the probabilities of different categories in the dependent variable. These observed features are typically numerical or categorical variables that are believed to be related to the outcome. By estimating K-1 separate regression equations, the model takes into account the unique relationships between the predictor variables and each category’s log-odds compared to the reference category. This allows for the modeling of the distinct probabilities associated with each category, while still maintaining the necessary constraint that the probabilities sum up to 1 across all categories.

Also Read: Introduction to Classification and Regression Trees in Machine Learning

Multinomial logistic regression is also known as softmax regression, maximum entropy (MaxEnt) classifier, or multinomial logit model. These terms are often used interchangeably to refer to the same statistical method.

Some examples of multinomial logit model:

- A college student can choose a major based on the grades, socio economic status, their likes and dislikes.

- Given the writing scores and their likes, school students can choose between a general program, vocational program or an academic program.

To conduct a multinomial logistic regression analyses, the following steps are typically followed:

Data Preparation

Prepare your dataset, ensuring that the outcome variable and explanatory variables are correctly coded and formatted.

We will have a series of N data points. Each data point i, from 1 to N, consists of a set of M explanatory variables and an associated categorical outcome Yi also known as dependent variable or response variable, which can take on one of K possible values which represent separate categories. The explanatory variables and outcome represent observed properties of the data points, and are often thought of as originating in the observations of N data points.

Model Specification

Determine the appropriate model specification based on your research question and the nature of the data. This includes selecting the outcome variable and identifying the relevant explanatory variables.

Model Estimation

Estimate the parameters (coefficients) of the multinomial logistic regression model. The log-likelihood is used to estimate the parameters of the model. This is typically done using maximum likelihood estimation, which finds the set of coefficients that maximize the likelihood of observing the data. Model estimation is an iterative process.

Model Evaluation Procedure

The model evaluation procedure involves assessing how well it captures the relationship between the variables. Common mathematical models include likelihood ratio tests, AIC (Akaike Information Criterion), BIC (Bayesian Information Criterion), and pseudo R-squared measures.

Interpretation of Coefficients

These model-coefficients represent the relationship between the explanatory variables and the log-odds of each outcome category compared to a chosen reference category. They can be used to understand the direction and magnitude of the effects of the explanatory variables on the outcome categories.

Predictions and Inference

Use the estimated model to make the optimal prediction for new observations or to infer the probabilities of the outcome categories given specific values of the explanatory variables. Additionally, conduct hypothesis tests, such as Wald tests or likelihood ratio tests, to assess the significance of the coefficients and compare different groups or levels within the explanatory variables. If the dependent variable has no natural ordering, the ordinary least square estimator cannot be used but multinomial logit model or multinomial probit model should be used. The multinomial probit model assumes that the dependent variable follows a multivariate normal distribution, while the multinomial logit model assumes a multinomial distribution. This leads to different functional forms for the models.

Model Validation

Validate the model by checking assumptions, such as the independence of observations, linearity of relationships, and absence of multicollinearity. Assess the robustness of the results through sensitivity analyses or by cross-validating the model using different subsets of the data.

Reporting and Interpretation

Summarize and report the findings of the multinomial logistic regression analysis, including the estimated coefficients, their significance, and the interpretation of the results. Provide context and discuss the implications of the findings in relation to the research question.

The mathematical model for multinomial logistic regression is based on the principles of maximum likelihood estimation. The probability equation for multinomial regression can be expressed as follows:

P(Y = k | X) = e^(βkX) / ∑(j=1 to K) e^(βjX)

Where:

P(Y = k | X) – probability of the dependent variable Y being in category k given the independent variable X.

K – total number of categories for the dependent variable.

βk – vector of coefficients for the k-th category of the dependent variable.

X – vector of independent variables.

The softmax function in the denominator ensures that the sum of the probabilities for all categories (j=1 to K) adds up to 1. The coefficients βk represent the effects of the independent variables on the log-odds of each category, and they are estimated using maximum likelihood estimation or other optimization techniques. Once the coefficients are estimated, the probabilities for each category can be calculated using the probability equation. The category with the highest probability can be considered the predicted category for a given set of independent variable values. It’s important to note that multinomial regression assumes the independence of observations and assumes the proportional odds assumption, which implies that the relationship between the independent variables and the log-odds of the categories is constant across categories.

Also Read: Softmax Function and its Role in Neural Networks

As a log-linear model, where the logarithm of the probabilities of each category in the dependent variable is modeled as a linear combination of the predictor variables. The log-linear model estimates the regression coefficients that quantify the relationships between the predictor variables and the log-odds of each category relative to a reference category. By exponentiating the log-odds, the predicted probabilities are obtained. This log-linear model formulation allows for the analysis of the multiplicative effects of the predictor variables on the outcome probabilities, providing insights into the associations between the predictors and the categorical outcome.

As a latent-variable model, where the observed categorical outcome represents an actual variable influenced by an underlying continuous latent variable. This continuous latent variable captures the unobserved information that explains the relationships between the predictor variables and the categorical outcome. The differences of vector between the observed outcome and the latent variable is represented by the error variable, accounting for the inherent variability and measurement errors. Additionally, regression vectors can be included in the model to capture complex relationships and interactions between the predictors and the latent variable, enhancing the model’s predictive capacity.

In the context of Multinomial Logit Model Approach, latent variable models can be used to account for unobserved heterogeneity or latent constructs that might influence the relationship between predictor variables and the multinomial outcome variable. This can provide a more comprehensive and nuanced understanding of the underlying structure and dynamics of the data. This is a common approach in discrete choice models. They can also be extended to more complex models. These complex models often provide increased flexibility and can capture more intricate relationships between the independent variables and the outcome categories

Also, Independence of irrelevant alternatives assumptions (IIA) is a critical assumption in choice models, including multinomial logit models. It assumes that the choice between two options is not influenced by the presence of other options that are not part of the comparison. It implies that the preferences and probabilities of choosing between two alternatives should not be affected by the introduction of additional alternatives.

The maximum likelihood estimator (MLE) method is also used in the multinomial logit model to estimate the regression coefficients that determine the probabilities of the outcome categories based on the predictor variables, maximizing the likelihood of observing the actual outcome categories. Maximum likelihood estimator method assumes that the data are independently and identically distributed and follows a multinomial distribution.

Dependent Variable

In multinomial logistic regression, the reference category is the category of the dependent variable that is used as a baseline for comparison with the other categories. It is the category that is omitted from the set of equations used to estimate the probabilities of the other categories.

Suppose we want to predict the level of education attainment (high school, college, or graduate degree) based on a set of demographic and socioeconomic factors such as age, gender, income, and parental education.

The dependent variable will be the level of education:

- high school

- college

- graduate degree

We could choose high school as the reference category, meaning that the equations used to estimate the probabilities of attaining college or graduate degree would not include the variables associated with high school.

The independent variables will be the demographic and socioeconomic factors:

- age

- gender

- income

- parental education

Assumptions

For applying multinomial logistic model, the data must satisfy the following assumptions:

Independence of observations

The observations in the dataset should be independent of each other. This means that there should be no correlation or dependence between the dependent variable and any of the independent variables.

Linearity of the logit

The relationship between the independent variables and the log-odds of the dependent variable should be linear. This means that the log-odds of the dependent variable should change at a constant rate for each unit change in the independent variable.Within the framework of the multinomial logit model, the log-odds for the various outcome categories are expressed as a linear combination of the predictor variables.

Absence of multicollinearity

The independent variables should be independent of each other. This means that there should be no high correlation or multicollinearity between the independent variables.

No outliers

The dataset should not have any outliers that can have a significant impact on the regression coefficients.

Large sample size

Multinomial logistic model requires a relatively large sample size to ensure that the estimates of the coefficients and the predicted probabilities are accurate and reliable.

Standard errors are used to estimate the precision or uncertainty associated with the coefficient estimates. These standard errors help assess the statistical significance of the estimated coefficients and provide information about the variability of the model’s predictions. Violations of these assumptions may lead to biased estimates of the coefficients, inaccurate predictions, and decreased statistical power. Therefore, it is important to check for these assumptions before performing multinomial logistic regression and to take appropriate measures if any of the assumptions are violated.

To illustrate, consider the scenario where people have the option to commute to work by car or bus. Adding a bicycle as an additional possibility does not affect the relative probabilities of choosing a car or bus. By employing this approach, we can represent the selection among K alternatives as K-1 separate binary choices, in which one particular alternative acts as the “pivot” against which the remaining K-1 alternatives are assessed and compared. Let’s take a specific example where the choices include a car and a blue bus, with an odds ratio of 1:1. If we introduce the option of a red bus, individuals may become indifferent between a red and a blue bus. As a result, the odds ratio for car:blue bus:red bus, known as the Bus Odds Ratio, could become 1:0.5:0.5. This change maintains the 1:1 ratio of car to any bus while altering the Car:Blue Bus Ratio to 1:0.5. This case highlights that the introduction of the red bus option, which is related to the Blue Bus Ratio, is not irrelevant, as a red bus serves as a perfect substitute for a blue bus.

Setup in SPSS Statistics

SPSS Statistics is a statistical software suite developed by IBM for data management, advanced analytics, multivariate analysis, business intelligence, and criminal investigation.

Suppose we want to predict the type of car purchased (economy, midsize, or luxury) based on the following independent variables – income, age, gender

In SPSS Statistics, we create four variables:

1. Independent variable – income

2. Independent variable – age

3. Independent variable – gender

4. Dependent variable – car purchased – which has three categories: economy, midsize, and luxury

The independent variables income, age and gender will be added to the covariates list.

Test Procedure in SPSS Statistics

Step 1 – Click Analyze > Regression > Multinominal Logistic on the main menu. This will open a Multinomial Logistic Regression dialogue box

Step 2 – Transfer the dependent variable, car purchased, into the Dependent: box and the covariate variables, income, age and gender, into the Covariate(s): box

Step 3 – Click on the Statistics button. You will be presented with the Multinomial Logistic Regression: Statistics dialogue box

Step 4 – Click the Cell probabilities, Classification table and Goodness-of-fit checkboxes.

Step 5 – Click on the Continue button and you will be returned to the Multinomial Logistic Regression dialogue box.

Step 6 – Click on the OK button. This will generate the results.

The results generate the following tables:

Goodness-of-Fit table

It provides two measures, Pearson and Deviance, that can be used to assess how well the model fits the data. Pearson presents the Pearson chi-square statistic. Large chi-square values indicate a poor fit for the model. Deviance presents the Deviance chi-square statistic. These two measures of goodness-of-fit might not always give the same result.

Model Fitting Information Table

It provides an overall measure of your model. The “Final” row presents information on whether all the coefficients of the model are zero i.e., whether any of the coefficients are statistically significant.

Pseudo R-Square Table

It provides the Cox and Snell, Nagelkerke and McFadden pseudo R2 measures

Likelihood Ratio Tests Table:

It shows which of your independent variables are statistically significant. This table is mostly useful for nominal independent variables because it is the only table that considers the overall effect of a nominal variable.

Parameter Estimates Table

It presents the parameter estimates also known as the coefficients of the model

Solution Approaches

There are two solution approaches – K models for K classes and Simultaneous Models

K models for K classes

In Multinomial Logistic Regression, we use K-1 logistic regression models to predict K classes. Let’s assume that we have K classes labeled 1, 2, …, K. We fit K-1 binary logistic regression models, where each model compares the probability of being in class i with the probability of being in class K for i=1,2,…,K-1.

For example, suppose we have three classes labeled 1, 2, and 3. We would fit two logistic regression models: one to compare the probability of being in class 1 to the probability of being in class 3, and another to compare the probability of being in class 2 to the probability of being in class 3.

Then, for a new observation, we would apply all K-1 logistic regression models to calculate the predicted probabilities of belonging to classes 1, 2, …, K-1. The probability of belonging to class K is then obtained by subtracting the sum of the predicted probabilities for classes 1, 2, …, K-1 from 1.

Simultaneous Models

Simultaneous models in Multinomial Logistic Regression refer to fitting all K classes into one model with a single set of parameters. In contrast to fitting K-1 binary logistic regression models, simultaneous models estimate all K class probabilities at once using a softmax function. Softmax function maps a K-dimensional vector of real numbers to a K-dimensional vector of probabilities that sum up to one. Specifically, for an observation with predictor variables X, the K-class probabilities are computed as follows:

P(Y=i|X) = e^(βi0 + βi1X1 + βi2X2 + … + βipXp) / (e^(β10 + β11X1 + β12X2 + … + β1pXp) + e^(β20 + β21X1 + β22X2 + … + β2pXp) + … + e^(βK-10 + βK-11X1 + βK-12X2 + … + βK-1pXp))

where

βij – jth coefficient estimate for the ith class

βi0 – intercept for the ith class

Xj – value of the jth predictor variable for the observation

Y – random variable representing the class label

The simultaneous model estimates a single set of parameters, which simplifies the interpretation of the model and makes it more computationally efficient. However, it assumes that the relationships between the predictor variables and the class probabilities are the same for all K classes. This may not be a reasonable assumption in some cases, which is why K-1 binary logistic regression models are often used instead.

Advantages

Multinomial logistic regression has several advantages, including:

Handling multiple classes

Multinomial logistic regression can handle situations where the response variable has more than two categories, making it a useful tool for multi-class classification problems.

Interpretable coefficients

Multinomial logistic regression produces coefficients that are interpretable and can be used to understand the relationship between the predictor variables and the class probabilities.

Model flexibility

Multinomial logistic regression can handle both linear and nonlinear relationships between the predictor variables and the class probabilities, allowing for a more flexible model than some other classification methods.

Probabilistic predictions

Multinomial logistic regression produces probabilistic predictions, which can be useful in situations where it is important to know the likelihood of an observation belonging to each class.

Easy implementation

Multinomial logistic regression is widely available in statistical software packages and is relatively easy to implement, making it a practical choice for many applications.

Account for co-variates

Multinomial logistic regression can account for the effect of covariates on the class probabilities, which can be useful in situations where the relationship between the predictor variables and the response variable may differ across different groups of observations.

Challenges

Multinomial logistic regression, like any statistical method, has several challenges that can affect its performance and interpretation. Some of these challenges include:

Overfitting

Multinomial logistic regression models can be prone to overfitting, especially when the number of predictor variables is large relative to the number of observations. Overfitting can result in a model that performs well on the training data but poorly on new data.

Multicollinearity

Multinomial logistic regression assumes that the predictor variables are independent, but in practice, there may be correlations or multicollinearity among the predictor variables. This can lead to unstable and unreliable coefficient estimates.

Class imbalance

When the number of observations in each class is not balanced, the model may be biased towards the majority class, leading to poor performance for the minority classes.

Nonlinearity

Multinomial logistic regression assumes a linear relationship between the predictor variables and the log odds of each class, but in practice, this assumption may not hold, leading to poor model performance.

Categorical predictor variables

Multinomial logistic regression requires that all predictor variables are continuous, but in practice, there may be categorical predictor variables. Categorical predictor variables can be converted into dummy variables, but this can lead to issues with multicollinearity and overfitting.

Model complexity

Multinomial logit models can become complex when there are many predictor variables, interactions, and higher-order terms. This can make the model difficult to interpret and prone to overfitting.

Real World Applications

Real-world predictive models play a crucial role in various domains and multinomial logistic regression has a wide range of real-world applications, particularly in fields where categorical outcomes with more than two classes are common. Some examples include:

Natural language processing

Multinomial LR classifiers can be used in text classification problems where the outcome variable has multiple categories, such as sentiment analysis of customer reviews.The learning process can be slower compared to Naive Bayes classifier as the maximum entropy classifier requires the iterative learning of weights.

Healthcare

Multinomial logistic regression can be used to predict the probability of a patient belonging to different disease severity classes based on their symptoms and medical history.

Finance

Multinomial LR classifiers can be used to predict the probability of a company being assigned different credit ratings based on their financial statements and market performance.

Conclusion

The multinomial regression model has proven to be a valuable tool for analyzing categorical outcomes with more than two unordered categories. By estimating the coefficients associated with the predictor variables, this model allows us to understand the relationship between the predictors and the probabilities of each outcome category, while considering a reference category. The coefficients provide insights into the direction and magnitude of the effects, indicating how changes in the predictors influence the likelihood of belonging to different categories. Additionally, the model’s ability to handle multiple outcome categories simultaneously enhances its versatility in various research fields. However, interpretation and understanding of the coefficients should be done with caution, considering the reference category and the specific context of the study. Overall, multinomial logistic regression provides a robust framework for investigating complex categorical outcomes and contributes to our understanding of the factors influencing categorical responses.

References

Multinominal Logistic Regression | Stata Data Analysis Examples https://stats.oarc.ucla.edu/stata/dae/multinomiallogistic-regression/. Accessed 14 May. 2023.

Multinomial logistic regression https://en.wikipedia.org/wiki/Multinomial_logistic_regression. Accessed 14 May. 2023.

Multinomial Logistic Regression By Great Learning Team Updated on Sep 9, 2022 https://www.mygreatlearning.com/blog/multinomial-logistic-regression/. Accessed 15 May. 2023.

Multinomial Logistic Regression using SPSS Statistics https://statistics.laerd.com/spss-tutorials/multinomial-logistic-regression-using-spss-statistics.php. Accessed 15 May. 2023.