Introduction

The Adam optimizer is an algorithm used in deep learning that helps improve the accuracy of neural networks by adjusting the model’s learnable parameters. It was first introduced in 2014 and is an extension of the stochastic gradient descent (SGD) algorithm. The name “Adam” stands for Adaptive Moment Estimation, which refers to its adaptive learning rate and moment estimation capabilities. It is an extension of the popular stochastic gradient descent algorithm, which is used for updating the weights of a neural network. By analyzing the historical gradients, Adam can adjust the learning rate for each parameter in real-time, resulting in faster convergence and better performance. Overall, the Adam optimizer is a powerful tool for improving the accuracy and speed of deep learning models.

The Adam (Adaptive Moment Estimation) optimizer is a popular optimization algorithm in machine learning, particularly in deep learning applications. It combines the benefits of two other optimization techniques – Momentum and Adaptive Gradient Algorithm (AdaGrad) – to provide an efficient and adaptive update of model parameters. By computing both first-order momentum (moving average of gradients) and second-order moment (moving average of squared gradients) of the loss function, Adam adjusts the learning rate for each parameter individually, ensuring a smooth and fast convergence. This optimization technique has gained popularity because of its adaptive learning rates, robustness to noise, and suitability for handling sparse gradients, making it a go-to choice for training various machine learning models, including neural networks.

Table of contents

- Introduction

- What Are Optimizers in Deep Learning?

- What is Adam Optimizer?

- Adam is Effective

- Adam Configuration Parameters

- The Adam Algorithm for Stochastic Optimization

- Visual Comparison Between Optimizers

- Implementation

- Adam optimizer in PyTorch

- Advantages and Disadvantages of Adam Optimizer

- Conclusion

- References

What Are Optimizers in Deep Learning?

Optimizers are a key component in deep learning that help improve the accuracy and efficiency of neural networks. In layman’s terms, optimizers are algorithms that adjust the learnable parameters of a neural network in order to minimize the error or cost function during training.

During training, the neural network is fed input data and produces output predictions, which are then compared to the actual target values. The difference between the predicted output and the actual target value is known as the loss or cost function. The optimizer’s job is to minimize this loss function by adjusting the network’s parameters.

There are several types of optimizers used in deep learning, including the popular stochastic gradient descent (SGD) algorithm, as well as more advanced optimizers like Adam and AdaGrad. These optimizers use different methods to adjust the parameters of the neural network, such as adaptive learning rates and momentum.

The goal of an optimizer is to help the neural network converge faster and more accurately by finding the optimal set of parameters that minimize the cost function. This is achieved by adjusting the weights and biases of the neural network in a way that reduces the error between the predicted and actual values.

Optimizers can also help prevent overfitting, which is a common problem in deep learning where the neural network becomes too complex and starts to memorize the training data instead of learning to generalize to new data.

Overall, optimizers play a critical role in deep learning by helping neural networks learn and improve their accuracy over time. By using the right optimizer and adjusting its parameters, developers can help their neural networks achieve better performance and faster convergence during training.

Also Read: Introduction to XGBoost and its Uses in Machine Learning

What is Adam Optimizer?

The Adam optimizer is a popular algorithm used in deep learning that helps adjust the parameters of a neural network in real-time to improve its accuracy and speed. Adam stands for Adaptive Moment Estimation, which means that it adapts the learning rate of each parameter based on its historical gradients and momentum.

In simple terms, Adam uses a combination of adaptive learning rates and momentum to make adjustments to the network’s parameters during training. This helps the neural network learn faster and converge more quickly towards the optimal set of parameters that minimize the cost or loss function.

Adam is known for its fast convergence and ability to work well on noisy and sparse datasets. It can also handle problems where the optimal solution lies in a wide range of parameter values.

Overall, the Adam optimizer is a powerful tool for improving the accuracy and speed of deep learning models. By analyzing the historical gradients and adjusting the learning rate for each parameter in real-time, Adam can help the neural network converge faster and more accurately during training.

Adam is Effective

Yes, the Adam optimizer is considered to be effective for deep learning applications. It has been shown to perform well on a wide range of datasets and can help neural networks converge faster and more accurately during training.

One of the main advantages of Adam is its ability to handle noisy and sparse datasets, which are common in real-world applications. It can also handle problems where the optimal solution lies in a wide range of parameter values.

Studies have shown that Adam can often outperform other optimization methods, such as stochastic gradient descent and its variants, in terms of convergence speed and generalization to new data. However, the optimal choice of optimizer may depend on the specific dataset and problem being solved.

Overall, the Adam optimizer is a powerful tool for improving the accuracy and speed of deep learning models. Its adaptive learning rate and momentum-based approach can help the neural network learn faster and converge more quickly towards the optimal set of parameters that minimize the cost or loss function.

Adam Configuration Parameters

The Adam optimizer has several configuration parameters that can be adjusted to improve the performance of a deep learning model. Here are some of the main Adam configuration parameters explained:

- Learning rate: This parameter controls how much the model’s parameters are updated during each training step. A high learning rate can result in large updates to the model’s parameters, which can cause the optimization process to become unstable. On the other hand, a low learning rate can result in slow convergence and may require more training steps to reach the optimal set of parameters.

- Beta1 and Beta2: These parameters control the exponential decay rates for the first and second moment estimates of the gradient, respectively. In other words, they help the optimizer keep track of the historical gradients for each parameter during training.

- Epsilon: This parameter is used to avoid dividing by zero when calculating the update rule. It is a small value added to the denominator of the update rule to ensure numerical stability.

- Weight decay: This is a regularization term that can be added to the cost function during training to prevent overfitting. It penalizes large values of the model’s parameters, which can help improve generalization to new data.

- Batch size: This parameter controls how many training examples are used in each training step. A larger batch size can result in faster convergence, but can also increase memory requirements and slow down the training process.

- Max epochs: This parameter determines the maximum number of training epochs or iterations that will be performed during training. It helps prevent overfitting and can improve the generalization of the model to new data.

Overall, adjusting these configuration parameters can help improve the performance and accuracy of a deep learning model trained using the Adam optimizer. By fine-tuning these parameters, developers can achieve faster convergence and better generalization to new data

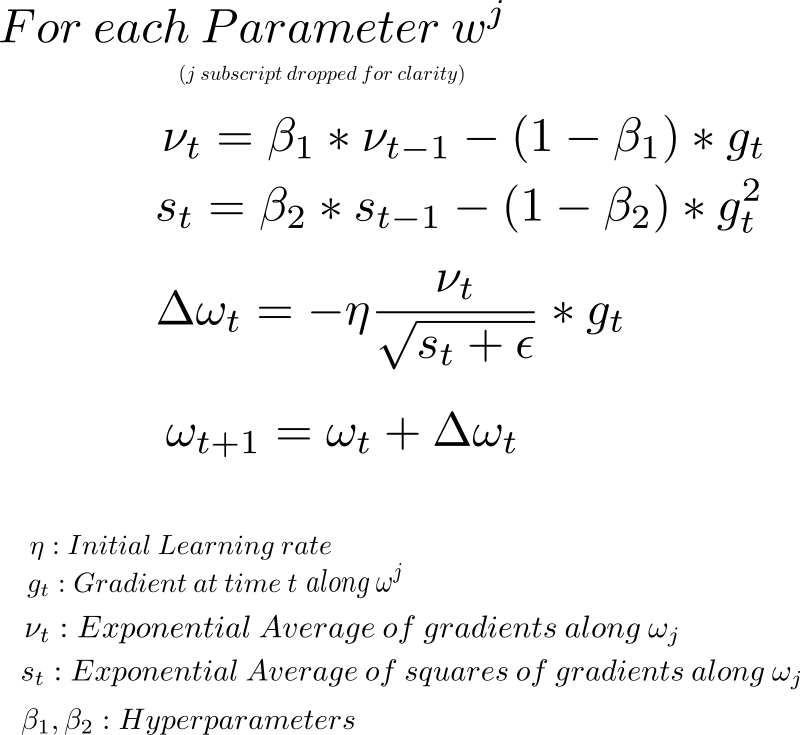

The Adam Algorithm for Stochastic Optimization

The Adam optimizer is an algorithm used for stochastic optimization in deep learning. It is designed to improve the performance of gradient-based optimization methods, such as stochastic gradient descent.

The algorithm works by maintaining two moving averages of the gradient and its square, which are used to estimate the first and second moments of the gradient. These estimates are then used to adjust the learning rate and weight update vectors during training.

During each training step, the algorithm calculates the gradient of the cost or loss function with respect to each parameter in the model. It then updates the moving averages and calculates the adaptive learning rate for each parameter.

The weight update vector is then calculated by combining the adaptive learning rate with the current gradient estimate and applying a momentum-based update rule. The resulting update vector is then used to update the model’s parameters and move them closer to the optimal set of values that minimize the cost or loss function.

Overall, the Adam algorithm is an effective and efficient method for optimizing deep learning models. Its adaptive learning rate and momentum-based approach can help the model converge faster and more accurately towards the optimal set of parameters that minimize the cost or loss function.

Visual Comparison Between Optimizers

Some popular optimization methods are stochastic gradient descent (SGD), momentum, Nesterov accelerated gradient (NAG), AdaGrad, AdaDelta, and RMSprop.

SGD is a simple optimization method that updates the model parameters based on the gradient of the cost function with respect to the parameters. It is a popular choice because of its simplicity, but it can be slow to converge and can get stuck in local minima.

Momentum optimization is a variant of SGD that adds a momentum term to the parameter updates. This helps the optimizer accelerate towards the minimum and overcome small gradients or noise in the data. However, it can also get stuck in saddle points or oscillate around the minimum.

NAG is another variant of momentum optimization that uses a “look-ahead” strategy to estimate the gradient of the cost function at the next step. This can help the optimizer avoid overshooting the minimum and lead to faster convergence. However, it can also suffer from oscillation or instability.

AdaGrad is an optimization method that adapts the learning rate for each parameter based on its historical gradients. This helps the optimizer make smaller updates to parameters that have been frequently updated and larger updates to parameters that have not. However, it can also suffer from a slow convergence rate and an excessive decrease in the learning rate over time.

AdaDelta is a variant of AdaGrad that uses an exponential decay rate to control the adaptive learning rate. This helps overcome the decreasing learning rate problem of AdaGrad and can lead to faster convergence. However, it can be slower than other optimizers in the early stages of training.

RMSprop is an optimization method that uses a moving average of the squared gradients to adapt the learning rate for each parameter. This helps the optimizer overcome the slow convergence of AdaGrad and can handle non-stationary problems. However, it can also suffer from high memory requirements and instability in some cases.

Overall, the choice of optimizer depends on the specific dataset and problem being solved. Each optimizer has its own strengths and weaknesses and may perform differently on different types of data. It is important to test different optimizers and compare their performance on the training and validation datasets before choosing the best one for the specific deep learning application.

Implementation

Implementation of optimizers in deep learning requires defining the optimizer and setting its hyper-parameters, such as learning rate, momentum, decay rates, and batch sizes. These hyper-parameters determine how the optimizer updates the weights and biases of the neural network during training.

To implement an optimizer, first, the optimizer object needs to be created and passed to the training algorithm. The training algorithm then uses the optimizer to compute the gradients of the cost function with respect to the weights and biases, and updates them accordingly.

The hyper-parameters of the optimizer can be set manually or can be tuned automatically using techniques such as grid search or random search. The learning rate is the most important hyper-parameter and determines how fast or slow the optimizer should learn. A higher learning rate can lead to faster convergence, but may also cause the optimizer to overshoot the minimum and lead to unstable behavior. A lower learning rate can lead to slower convergence, but may also lead to a more stable optimizer.

Batch size is another important hyper-parameter that determines how many training examples are used in each weight update. A larger batch size can lead to faster convergence and more stable gradients, but can also require more memory and computation time. A smaller batch size can lead to slower convergence and noisier gradients, but can require less memory and computation time.

Implementation of optimizers also involves monitoring the training and validation loss during training to ensure that the model is improving and not overfitting. Various metrics such as training accuracy, validation accuracy, and loss line plots can be used to assess the performance of the optimizer.

Overall, implementing an optimizer requires setting the hyper-parameters, monitoring the training and validation loss, and adjusting the hyper-parameters as necessary to ensure the best performance of the deep learning model.

Adam optimizer in PyTorch

In PyTorch, the Adam optimizer is implemented as part of the torch.optim module. To use the Adam optimizer for training a model, you need to import the optimizer and create an instance by passing the model’s parameters and the desired learning rate. Here’s a simple example:

import torch

import torch.nn as nn

from torch.optim import Adam

# Define your model (a simple neural network, for example)

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = self.fc2(x)

return x

# Create an instance of the model

model = SimpleNet()

# Initialize the Adam optimizer with the model's parameters and a learning rate

optimizer = Adam(model.parameters(), lr=0.001)After initializing the optimizer, you can use it in the training loop to update the model’s parameters. For example:

# Training loop

for epoch in range(num_epochs):

for batch_data, batch_labels in data_loader:

# Zero the gradients

optimizer.zero_grad()

# Forward pass

outputs = model(batch_data)

# Calculate loss

loss = loss_function(outputs, batch_loss)Advantages and Disadvantages of Adam Optimizer

The Adam optimizer has several advantages over other optimization algorithms in deep learning. First, it has adaptive learning rates that adjust based on the gradient magnitude, which makes it suitable for a wide range of problems and architectures. This adaptive learning rate also allows the optimizer to converge faster and more accurately, even in noisy or sparse datasets.

Another advantage of the Adam optimizer is that it can handle large and complex datasets without overfitting or getting stuck in local minima. This is because it uses a decaying average of the past gradients, which ensures that the optimizer always moves in the relevant direction and avoids oscillations.

Moreover, the Adam optimizer has a low memory requirement, as it only needs to store the first and second moments of the gradient, which makes it more memory efficient than other optimization algorithms. This also makes it suitable for training deep learning models on limited resources, such as mobile devices or edge devices.

However, there are also some disadvantages to using the Adam optimizer. One disadvantage is that it can be sensitive to the initial learning rate and other hyper-parameters, which can affect the convergence and stability of the optimizer. In addition, the adaptive moment estimation used by the Adam optimizer can lead to poor convergence in certain cases, especially when the training data is highly redundant.

Another disadvantage of the Adam optimizer is that it can overfit in some cases, especially when the dataset is small or the model has a large number of learnable parameters. This can be mitigated by using regularization techniques or reducing the learning rate during training.

Overall, the Adam optimizer has many advantages and is widely used in deep learning applications, but it is important to understand its limitations and use it appropriately in different contexts.

Also Read: How to Use Linear Regression in Machine Learning

Conclusion

In conclusion, the Adam optimizer is a popular and effective optimization algorithm for training deep learning models. It has several advantages over other optimization algorithms, such as adaptive learning rates, low memory requirements, and faster convergence. These advantages make it suitable for a wide range of problems and architectures, and it is widely used in deep learning applications.

However, the Adam optimizer also has some limitations and disadvantages that need to be taken into consideration when using it. These include sensitivity to hyper-parameters, poor convergence in certain cases, and potential for overfitting. To mitigate these limitations, it is important to tune the hyper-parameters carefully and use appropriate regularization techniques during training.

In general, the choice of optimization algorithm depends on the specific problem and architecture being used, and different optimization algorithms may have different strengths and weaknesses in different contexts. Therefore, it is important to experiment with different optimization algorithms and compare their performance to find the best one for a given task.

In summary, the Adam optimizer is a powerful tool for optimizing deep learning models, but it is important to use it appropriately and understand its strengths and limitations. By carefully tuning the hyper-parameters and using appropriate regularization techniques, the Adam optimizer can be a highly effective tool for training deep learning models and achieving high accuracy on a wide range of tasks.

References

Alabdullatef, Layan. “Complete Guide to Adam Optimization.” Towards Data Science, 2 Sept. 2020, https://towardsdatascience.com/complete-guide-to-adam-optimization-1e5f29532c3d. Accessed 28 Mar. 2023.

Brownlee, Jason. “Gentle Introduction to the Adam Optimization Algorithm for Deep Learning.” MachineLearningMastery.Com, 2 July 2017, https://machinelearningmastery.com/adam-optimization-algorithm-for-deep-learning/. Accessed 28 Mar. 2023.

Gupta, Ayush. “Optimizers in Deep Learning: A Comprehensive Guide.” Analytics Vidhya, 7 Oct. 2021, https://www.analyticsvidhya.com/blog/2021/10/a-comprehensive-guide-on-deep-learning-optimizers/. Accessed 28 Mar. 2023.

“Intuition of Adam Optimizer.” GeeksforGeeks, 22 Oct. 2020, https://www.geeksforgeeks.org/intuition-of-adam-optimizer/ Accessed 28 Mar. 2023.