Introduction

Overfitting and underfitting are the two most common problems encountered while doing machine learning.

This article will discuss the issues we face, and how overfitting and underfitting occur. The article talks about what is a target function. How generalization comes into picture for machine learning. What is a statistical Fit. It will help you develop an understanding of bias and variance, talk about the bias-variance tradeoff. It will talk about the techniques to improve the machine learning models.

Table of contents

Also Read: Top Data Science Interview Questions and Answers

What is Target Function in Machine Learning?

Target function can be assumed to be the true predictor which can predict with 100% accuracy on any future data.

Using supervised learning, we try to select a function from a set of hypothetical functions while ensuring that the selected function is closest to the target function. The goal of any machine learning model is to get us as close to the target function as possible.

What is Generalization in Machine Learning

The aim of machine learning is to infer general concepts from specific examples. This is referred to as induction. Generalization is the process of how well unseen data points perform with machine learning done on the known specific set of observations.

Generalization is the goal of machine learning algorithms. If a particular algorithm is able to perform well with any data point in the problem domain, then it has been generalized. Overfitting and underfitting lead to reduction of generalization, causing poor performance.

Is it A Statistical Fit?

Statistics and machine learning are fields that often overlap.

We use the term fit to understand how close we are to the target function. The goodness of fit refers to the measure used to estimate how well the model matches the target function.

Machine learning and statistics are related and sometimes overlapping fields. Statistical inference is the main purpose of statistics. The aim of inference is to find statistical properties of the underlying data and to estimate the uncertainty about those properties. However, while doing so, the field of statistics developed dimension reduction and regression techniques that are the cornerstone of machine learning applications.

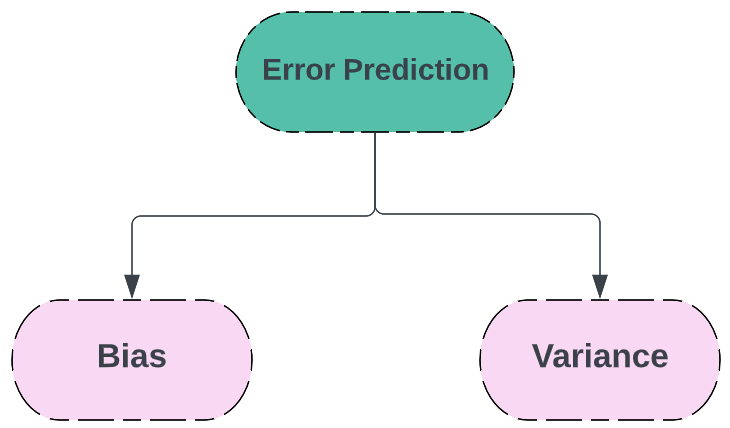

Bias and Variance

Machine learning prediction error can be broadly divided into two categories: bias and variance. The statistical fit can be achieved post understanding of trade off between low bias and high variance.

Bias and Variance

Bias

Refers to the assumptions made by a model to easily learn the model function. We can also understand bias as the error rate of the training data.

Lower error rates are referred to as low bias. Examples of low-bias machine learning algorithms include: Decision Trees, k-Nearest Neighbors and SVMs. High error rate is referred to as high bias. Examples of high-bias machine learning algorithms include: Linear Regression, Linear Discriminant Analysis and Logistic Regression.

Variance

Variance is defined as the difference in error rate between training and testing data. When the variance is high, it is referred to as high variance. When the variance is low, it is referred to as low variance.

The increase in variance lowers bias, and similarly increasing bias lowers variance.

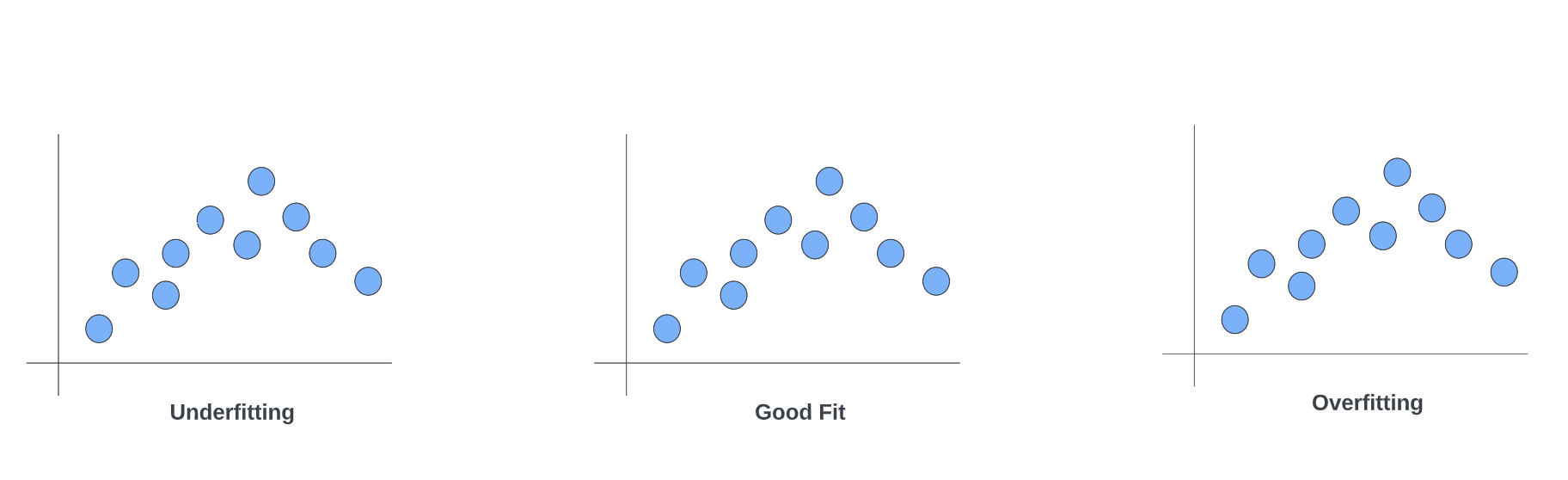

Overfitting in Machine Learning

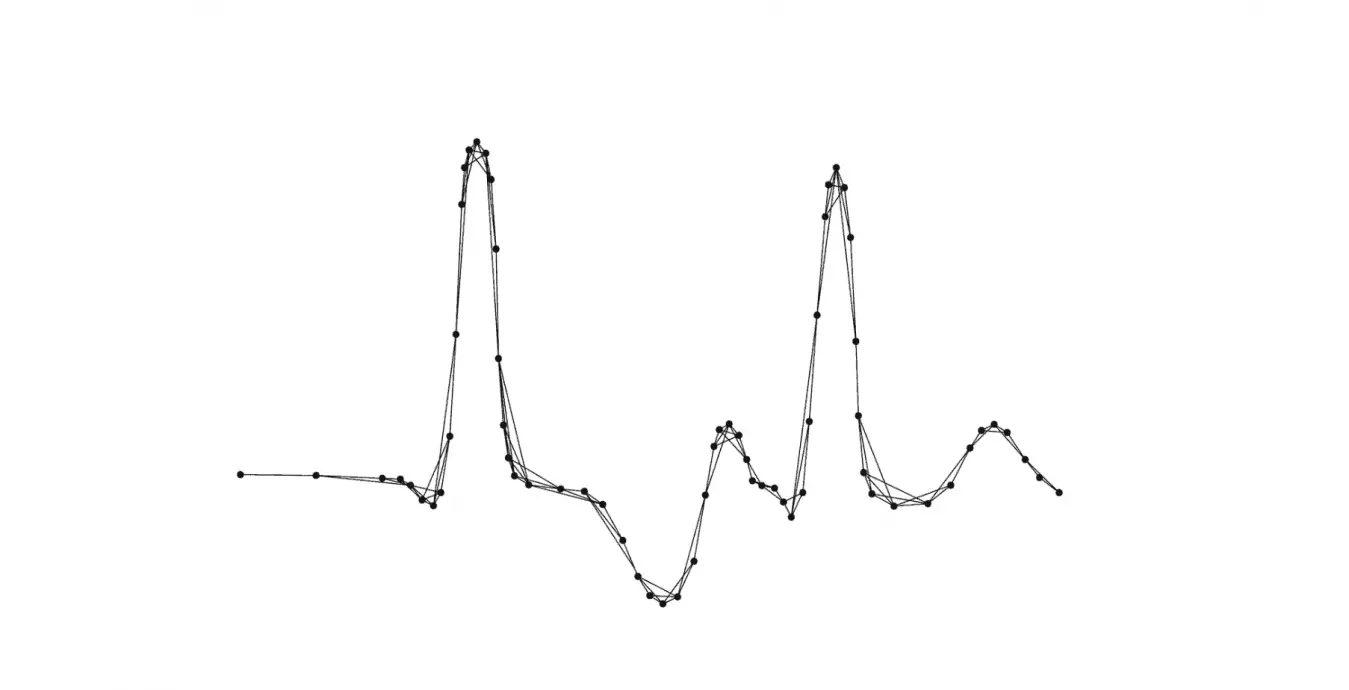

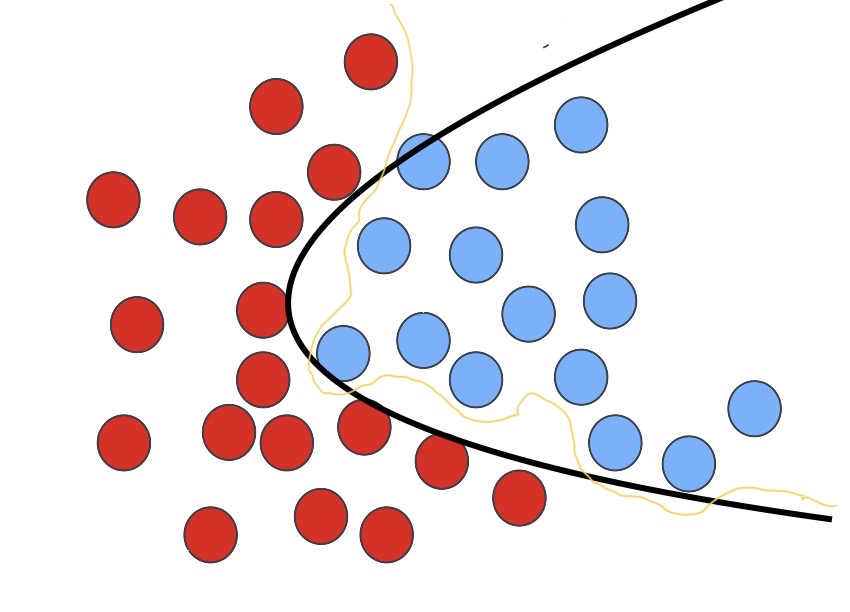

When a model learns the training data too well, it leads to overfitting. The details and noise in the training data are learned to the extent that it negatively impacts the performance of the model on new data.

Yellow line shows a model overfitting the data

The minor fluctuations and noise are learned as concepts by the model. This leads to the model failing when it encounters new data.

Powerful algorithms like decision tree that are used for classification and prediction are prone to overfitting. A Decision tree is a flowchart-like tree structure, where each internal node denotes a test on an attribute, each branch represents an outcome of the test, and each leaf node (terminal node) holds a class label.

What causes overfitting?

There are multiple reasons that can lead to overfitting

- High variance and low bias

- The model is too complex

- The size of the training data

How to reduce overfitting?

- Increase training data.

- Reduce model complexity.

- Ridge Regularization and Lasso Regularization

- Use dropout for neural networks to tackle overfitting.

Also Read: How To Use Cross Validation to Reduce Overfitting

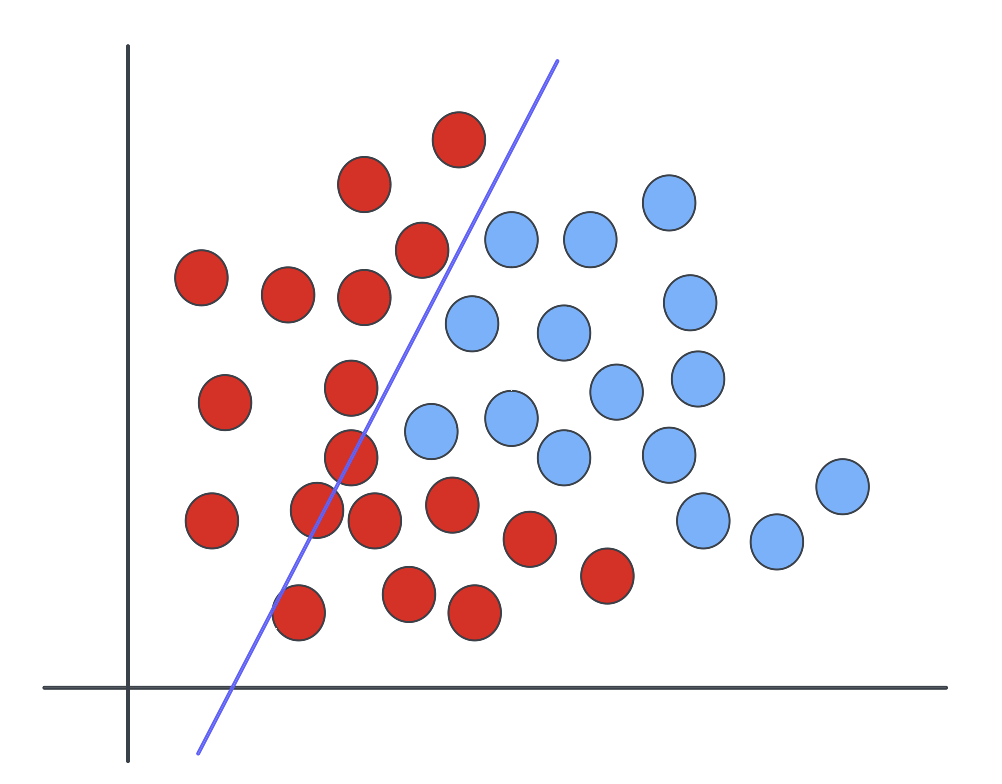

Underfitting in Machine Learning

Underfitting

When the model doesn’t fit the training data or the new data, it is called underfitting. It is easier to detect underfitting than overfitting.

What causes Underfitting?

- High bias and low variance

- The size of the training dataset used is not enough.

- The model is too simple.

- Training data is not cleaned and also contains noise in it.

How to reduce underfitting?

- Increase model complexity

- Increase the number of features, performing feature engineering

- Remove noise from the data.

- The duration for training should be increased.

What is a Good Fit?

Modifying an algorithm to better fit a given data set, will lead to low bias, but it will increase variance. This shall cause the model to make incorrect predictions.

In an ideal scenario, a model needs to fit between overfitting and underfitting because both of these can lead to poor model performance. During the learning process, the model’s errors reduces and so does the training error on the test dataset.

Let’s talk about the techniques one can use to get a good fit. Which helps reduce overfitting and underfitting.

Variance-bias tradeoff

Increasing the complexity of the model to count for bias and variance, thus decreasing the overall bias while increasing the variance to an acceptable level. This aligns the model with the training dataset without incurring significant variance errors.

Increasing the training data set can also help to balance this trade-off, to some extent. This is the preferred method when dealing with overfitting models. Furthermore, this allows users to increase the complexity without variance errors that pollute the model, as with a large data set.

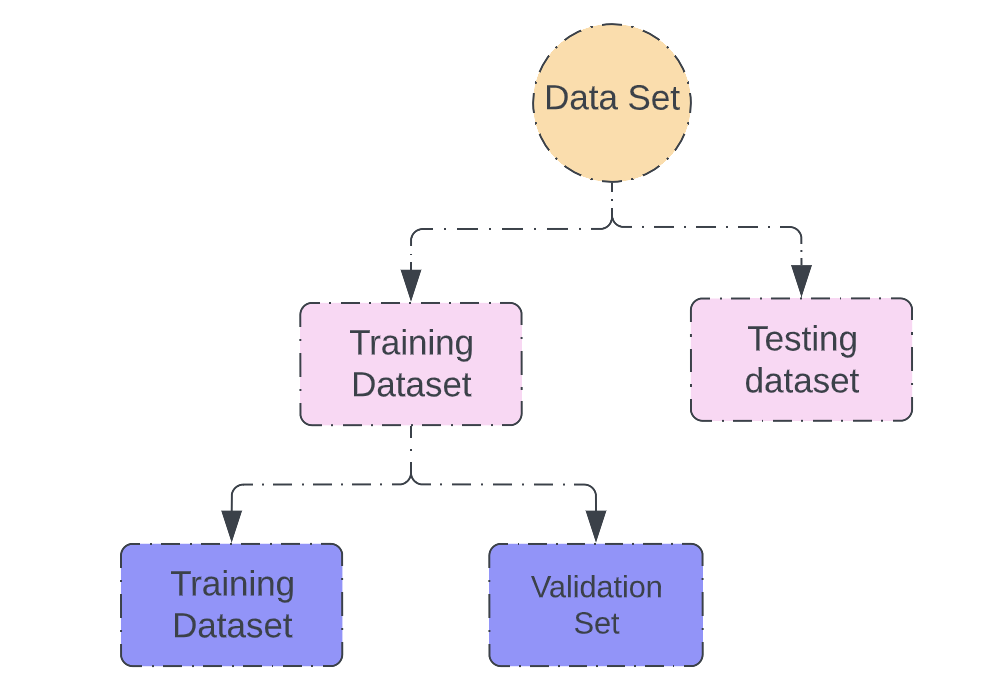

Introduce a validation set

Validation set

Once the model has been trained on the training set. The validation set can be used to get an unbiased evaluation of the model. This ensures that the generalization is maintained.

The right practice is when you get a data set, separate out a testing dataset. Split the remaining observation into training set and validation set.

Cross-validation

Cross-validation uses various validation datasets to evaluate ML models. It is mainly utilized to prevent overfitting. In the case of cross validation, the validation data is a mix of training and real-world data.

K-fold cross validation: This allows us to overcome the single test bottleneck issue. In this case, we split the dataset into ‘k’ folds. Then uses the one of the k folds as the validation set and the remaining as training set.

Hyperparameter tuning

Hyperparameters are the parameters that define the model architecture.

We can ask the ML algorithm to provide us with a combination of parameters and architecture for our data distribution. The process of searching the parameters is known as hyperparameter tuning.

Ensemble methods

Ensemble method is used to solve the overfitting problem. Using this method, we basically average the predictions of a group of predictive models.

An actual example where ensemble method is used is Tesla, which uses HydraNet, in it’s self-driving cars. The tesla cars receive input from 8 different cameras. HydraNet accomplishes this through the use of an ensemble CNN architecture.

Regularization

Regularization is basically simplification of the dataset and making it less flexible. It can be used in case of parametric and non-parametric model.

Note: Regularization is known as shrinkage.

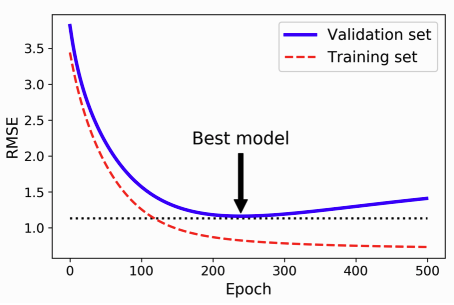

Early stopping.

Source: Hands-on machine learning with scikit-learn, keras and tensorflow, Aurélien Geron, 2019

As we continue to train the data, the error on the training data continues to reduce and ends up overfitting the model. This is happening because the model is learning the noise in the training dataset, leading to reduction of generalization.

Also Read: Pandas and Large DataFrames: How to Read in Chunks

Conclusion

In this article, we discovered the problems faced during machine learning. We kept the focus on over fitting and underfitting. The techniques to tackle these concerns like cross-validation, hyperparameter tuning, ensemble methods, regularization and early stopping. Utilization of Bias and variance for error prediction and understanding the bias variance trade of.

Also Read: What is Joint Distribution in Machine Learning?

References

Brownlee, Jason. “How to Avoid Overfitting in Deep Learning Neural Networks.” MachineLearningMastery.Com, 16 Dec. 2018, https://machinelearningmastery.com/introduction-to-regularization-to-reduce-overfitting-and-improve-generalization-error/. Accessed 15 Feb. 2023.

Contributors to Wikimedia projects. “Generalization.” Wikipedia, 26 May 2022, https://en.wikipedia.org/wiki/Generalization. Accessed 15 Feb. 2023.

—. “Inductive Reasoning.” Wikipedia, 6 Feb. 2023, https://en.wikipedia.org/wiki/Inductive_reasoning. Accessed 15 Feb. 2023.

—. “Overfitting.” Wikipedia, 10 Oct. 2022, https://en.wikipedia.org/wiki/Overfitting. Accessed 15 Feb. 2023.

Steve. “Andrej Karpathy Details Autopilot in 10 Minutes.” AutoPilot Review, 9 Nov. 2019, https://www.autopilotreview.com/teslas-andrej-karpathy-details-autopilot-inner-workings/. Accessed 15 Feb. 2023.

TensorFlow. “Solve Your Model’s Overfitting and Underfitting Problems – Pt.1 (Coding TensorFlow).” YouTube, Video, 11 Oct. 2018, https://www.youtube.com/watch?v=GMrTBtzJkCg. Accessed 15 Feb. 2023.

Understanding the Bias-Variance Tradeoff. http://scott.fortmann-roe.com/docs/BiasVariance.html. Accessed 15 Feb. 2023.