Introduction

In the vast expanse of artificial intelligence (AI), Natural Language Processing (NLP) holds a distinct place due to its transformative influence on human-machine interactions.

By interpreting the syntax, semantics, and context of human language, Natural Language Processing (NLP) allows machines to comprehend human language intricacies without requiring explicit programming for each individual command, thereby streamlining human-computer interaction.

NLP’s significance extends beyond this basic interaction, playing a crucial role in understanding the vast amount of unstructured data available in the form of text.

Also Read: What are the Natural Language Processing Challenges, and How to fix them?

Table of contents

- Introduction

- NLP

- What is Natural Language Processing?

- Understanding NLP

- The Growth of natural language processing

- How NLP Works

- Core Techniques in NLP

- Applications of NLP

- Benefits of NLP

- Challenges in NLP

- NLP with Python

- How Does Natural Language Processing (NLP) Work?

- Techniques and Methods of Natural Language Processing (NLP)

- Conclusion

- References

NLP

On the user-facing end, these developments enhance a wide range of applications, from sentiment analysis in customer satisfaction surveys to search engines that better comprehend user queries. They also enable personal assistants like Siri and Alexa to respond to commands more effectively, making human communication with these devices seamless. As we continue to innovate, these advancements in natural language processing promise an exciting future in the field of linguistics and beyond.

Recent developments in generative AI models like Open AI’s ChatGPT, for instance, use advanced NLP techniques to simulate human-like text conversations, while Midjourney, another groundbreaking product, is carving a niche by providing personalized travel recommendations based on text input. Despite being in their early stages, these applications of NLP are already demonstrating a profound impact on industries ranging from customer service to tourism.

But what exactly is NLP? How does it revolutionize the way we communicate with machines? And what are the mechanisms that enable machines to understand and respond to our language? This exploration will elucidate the intricacies of NLP, shedding light on its core principles, its significance, practical applications, and the inherent challenges in realizing its full potential.

We will journey together through the fascinating landscape of NLP, providing you with an understanding of how it forms the foundation of groundbreaking AI products like ChatGPT and Midjourney, and how it is shaping the future of human-machine interaction.

Also Read: What is Neural Architecture Search and How Does it Work in Machine Learning?

What is Natural Language Processing?

Natural Language Processing, or NLP, represents a dynamic intersection of computer science, artificial intelligence, and linguistics. Its primary goal is to equip machines with the ability to comprehend, interpret, and generate human language in a contextually accurate and valuable manner. Acting as a conduit between humans and machines, NLP strives to enable computers to grasp not merely the words spoken or written by humans, but also the underlying context and intent that give these words meaning.

Understanding NLP

NLP combines elements of linguistics, computer science, and machine learning to process and analyze large amounts of natural language data. It allows machines to understand not just individual words, but also the context in which they’re used. From parsing grammar to interpreting intent and sentiment, NLP attempts to model human language as accurately as possible. NLP systems are the backbone of many AI-powered tools used in healthcare, finance, customer service, and education.

A key aspect of NLP is its foundation in linguistic theory. Morphology (word structure), syntax (sentence structure), semantics (meaning), pragmatics (contextual meaning), and discourse analysis all contribute to how language is processed. By integrating these principles with machine learning, NLP allows machines to engage in human-like language tasks, such as summarizing reports, answering questions, or translating texts.

Also Read: Natural language processing in language learning

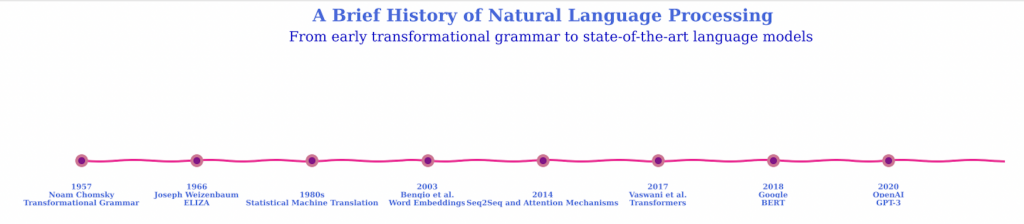

The Growth of natural language processing

The last 50 years have witnessed an evolution in the field of language processing, with one fundamental insight being that the myriad forms of linguistic knowledge can be effectively represented using a handful of formal models and theories. Drawn from the foundational toolkits of computer science, mathematics, and linguistics, these models have fostered considerable advancements in our language understanding.

State machines, one such model, can effectively handle the knowledge of phonology, morphology, and syntax. They encompass deterministic and non-deterministic finite-state automata and finite-state transducers. These models operate on states, transitions among states, and an input representation to derive meaningful results.

Complementing state machines, we have formal rule systems, another critical model. These systems, which can be probabilistic or non-probabilistic, include regular grammar, context-free grammar, and feature-augmented grammar. They further enhance our capabilities in dealing with linguistic complexities.

Models based on logic, such as first-order logic and lambda calculus, have traditionally been employed to model semantics and pragmatics. However, contemporary research has started focusing more on potentially robust techniques derived from non-logical lexical semantics.

Probabilistic models, by augmenting state machines, formal rule systems, and logic with probabilities, play a crucial role in resolving ambiguity problems inherent in language processing.

In the realm of information retrieval and word meaning interpretation, vector-space models, grounded in linear algebra, have proven invaluable. The application of these models usually involves a search through a state space representing input.

Machine learning, with tools like classifiers and sequence models, holds significant sway in many language processing tasks. These tools attempt to categorize single objects or sequences of objects into classes, using methodologies such as distinct training and test sets, cross-validation, and meticulous evaluation of trained systems.

Also Read: Natural language processing in language learning

How NLP Works

NLP operates through a series of steps designed to convert human language into structured data that machines can process. This typically involves:

- Tokenization: Breaking text into smaller units like words or phrases. This step helps in isolating meaningful language components.

- Part-of-Speech Tagging: Identifying nouns, verbs, adjectives, etc., in a sentence to understand grammatical relationships.

- Named Entity Recognition (NER): Detecting names of people, organizations, locations, dates, and quantities.

- Parsing: Analyzing grammatical structure and dependencies between words and clauses.

- Lemmatization and Stemming: Reducing words to their base form (e.g., “running” to “run”) to simplify and unify word usage.

- Sentiment Analysis: Evaluating the emotional tone expressed in text, such as positive, negative, or neutral.

These steps form the NLP pipeline that enables deeper understanding of language data. Advanced NLP systems also involve co-reference resolution (identifying pronoun references), word sense disambiguation, and pragmatic inference to improve comprehension.

Core Techniques in NLP

NLP relies on several fundamental techniques:

- Bag of Words (BoW): Represents text as a set of word counts, ignoring grammar and word order. It is useful for tasks like spam detection and document classification.

- TF-IDF (Term Frequency-Inverse Document Frequency): Highlights important words in a document relative to a corpus by weighing unique word importance.

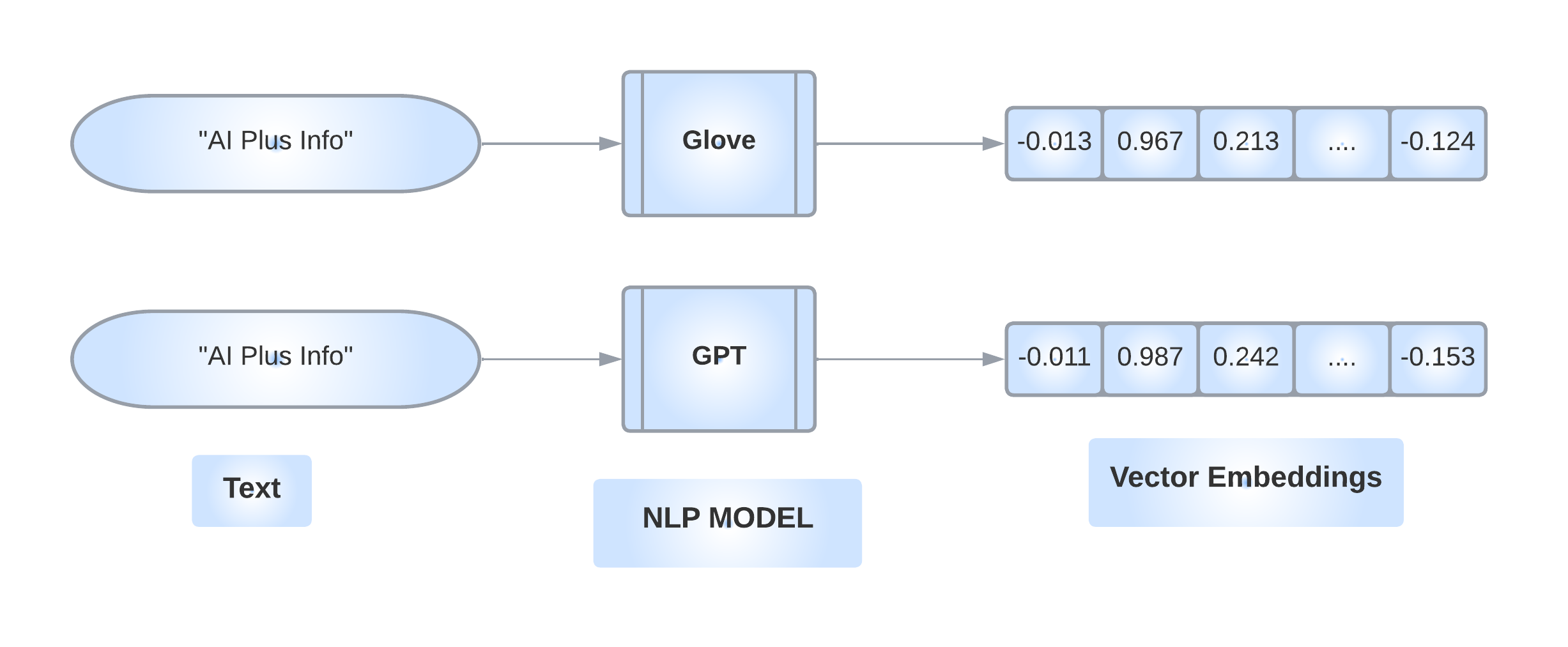

- Word Embeddings: Captures relationships between words using vector space models. Techniques like Word2Vec, FastText, and GloVe help in encoding semantic similarity.

- Transformers: Deep learning models like BERT, RoBERTa, and GPT process input by focusing on context and attention mechanisms. These models dominate state-of-the-art performance in most NLP benchmarks.

Additionally, recent advances include sequence-to-sequence models, attention mechanisms, and pre-training/fine-tuning paradigms. These have enabled scalable, generalizable language models capable of performing multiple tasks with minimal supervision.

Applications of NLP

NLP powers numerous real-world applications across industries:

- Virtual Assistants: NLP enables voice-based assistants like Siri, Google Assistant, and Alexa to process and respond to commands, schedule events, and answer queries.

- Chatbots: Automates customer service by understanding and responding to queries 24/7. NLP-driven chatbots are used in retail, banking, education, and healthcare.

- Machine Translation: Converts text between languages in tools like Google Translate and DeepL. Neural machine translation models use deep learning for more natural output.

- Sentiment Analysis: Helps businesses monitor public opinion through social media analysis and customer reviews. It is crucial for brand management, market research, and user feedback analysis.

- Text Summarization: Condenses long documents into concise summaries for news aggregation, legal contract analysis, or academic literature review.

- Speech Recognition: Translates spoken language into written text in real-time, enabling applications in transcription, voice typing, and accessibility tools.

- Information Extraction: Identifies structured information such as key terms, entities, and relationships from unstructured documents.

NLP is also integral to content recommendation, search engine optimization, and compliance monitoring.

Also Read: STEM Building Toys

Benefits of NLP

Implementing NLP offers significant advantages:

- Efficiency: Automates time-consuming language tasks, increasing productivity in document processing, email sorting, and knowledge management.

- Accessibility: Enhances user experience for individuals with disabilities via tools like screen readers, speech-to-text, and language simplification.

- Insight Extraction: Transforms unstructured text into actionable insights for business intelligence and decision-making.

- Improved User Experience: Personalizes interactions, understands customer intent, and delivers faster support via natural conversations.

- Scalability: Allows businesses to handle large volumes of queries, documents, or feedback that would otherwise require significant human effort.

- Multilingual Support: Enables organizations to interact with global audiences by offering real-time translation and language support.

NLP thus contributes to cost savings, faster turnaround times, and competitive advantage in data-driven operations.

Challenges in NLP

Despite its strengths, NLP faces notable challenges:

- Ambiguity: Words with multiple meanings can confuse models without sufficient context. For example, “bank” can refer to a financial institution or a riverbank.

- Contextual Understanding: Sarcasm, idioms, metaphors, and implied meanings are difficult for machines to interpret accurately.

- Language Diversity: Supporting numerous languages, dialects, and code-switching demands vast datasets and localized models.

- Data Privacy: Handling personal or sensitive information in healthcare, legal, and financial text raises ethical and legal concerns.

- Bias and Fairness: NLP models may perpetuate social or cultural biases present in training data, leading to discriminatory or skewed outputs.

- Resource Intensity: Training large models like GPT-4 requires substantial computational power and energy, limiting accessibility for smaller organizations.

These challenges are the focus of ongoing research in explainable AI, bias mitigation, low-resource language modeling, and secure NLP.

NLP with Python

Python has become a go-to language for Natural Language Processing, thanks to its robust ecosystem of libraries tailored for this field. Let’s delve into a few of these impressive tools:

- NLTK (Natural Language Toolkit): NLTK provides easy-to-use interfaces and resources for a wide array of language processing tasks. From tokenization, stemming, and tagging to parsing and semantic reasoning, NLTK has functions to solve all these in a few lines of code.

- SpaCy: For more heavy-duty processing, SpaCy is a great option. It’s designed to handle large volumes of text efficiently. A highlight of SpaCy is its named entity recognition and dependency parsing features. Plus, it supports a multitude of languages.

- Gensim: When it comes to topic modeling and document similarity analysis, Gensim is a great library. It’s a library designed for handling large text collections, utilizing data streaming and incremental algorithms to handle operations that traditional packages might struggle with.

- PyTorch: Developed by Meta, PyTorch is an open-source machine-learning library based on the Torch library. It’s lauded for its simplicity and ease of use when it comes to developing complex neural networks. Models like BERT and GPT-2 have PyTorch implementations due to their dynamic computation graph and efficient memory usage.

- TensorFlow/Keras: Created by the Google Brain team, TensorFlow is another open-source library for numerical computation and large-scale machine learning. TensorFlow uses data flow graphs where nodes represent mathematical operations, while the edges represent the data points.

- LangChain: This is a new-age NLP library that leverages machine learning for language detection and translation. It’s known for its high accuracy and support for a wide range of languages. LangChain is great for applications that need to handle multiple languages efficiently.

- OpenAI: OpenAI’s GPT (Generative Pre-trained Transformer) models have revolutionized the NLP field. GPT-3, the latest version as of my knowledge cutoff in September 2021, is an AI model trained on a diverse range of internet text and can generate human-like text given some input. GPT-3 is utilized for tasks including translation, question-answering, and even writing code.

- Transformers (by Hugging Face): This library has made working with large transformer models like BERT and GPT-3 much more accessible. It provides thousands of pre-trained models in over 100 languages, allowing developers to implement state-of-the-art NLP techniques with relative ease.

How Does Natural Language Processing (NLP) Work?

The journey of unraveling human language through NLP commences with raw text that is meticulously processed through a series of steps. These include tokenization, part-of-speech tagging, dependency parsing, constituency parsing, lemmatization and stemming, stopword removal, word sense disambiguation, named entity recognition (NER), and text classification. Each stage is instrumental in facilitating a machine’s understanding of human language, progressively transforming incomprehensible text into meaningful information. As we delve deeper into the world of NLP, we’ll dissect each of these stages, providing you with a comprehensive understanding of this fascinating process.

Tokenization

Tokenization is a crucial step in the NLP pipeline that involves breaking down a piece of text into individual units or ‘tokens’, which are usually words or phrases. Consider the sentence, “NLP is fascinating.” Tokenization would dissect this into separate units: “NLP”, “is”, and “fascinating.” This step can be likened to a surgeon’s precise incision, where a text body is meticulously segmented into tokens.

Here’s a simple Python snippet for tokenization using the Natural Language Toolkit (NLTK):

import nltk

nltk.download('punkt')

sentence = "NLP is fascinating"

tokens = nltk.word_tokenize(sentence)

print(tokens)

# Output: ['NLP', 'is', 'fascinating']Part-of-Speech Tagging

Once we have the tokens, the next stage involves categorizing them based on their grammatical role in a sentence, a process known as part-of-speech (POS) tagging. In the sentence “NLP is fascinating”, “NLP” is identified as a noun, “is” as a verb, and “fascinating” as an adjective. This is akin to assigning a unique identity badge to each token, which helps in understanding the context of the sentence.

Let’s see how we can do POS tagging using NLTK:

nltk.download('averaged_perceptron_tagger')

pos_tags = nltk.pos_tag(tokens)

print(pos_tags)

# Output: [('NLP', 'NNP'), ('is', 'VBZ'), ('fascinating', 'VBG')]Dependency Parsing

Moving forward, dependency parsing enters the scene. This phase examines the grammatical structure of a sentence, determining how each word interrelates with others. Think of it as solving a puzzle where each piece (word) is interconnected.

Dependency parsing is all about discovering the intricate web of connections that exist among words within a sentence and then visualizing these ties in the form of a tree-like diagram. Every word gets its own distinct label, playing a crucial role in discerning the sentence’s overall meaning.

Here’s an example of dependency parsing using the spaCy library:

nlp = spacy.load('en_core_web_sm')

doc = nlp(sentence)

for token in doc:

print(f'{token.text} <--{token.dep_}-- {token.head.text}')

# Output:

# NLP <--nsubj-- is

# is <--ROOT-- is

# fascinating <--acomp-- isfrom spacy import displacy

nlp = spacy.load("en_core_web_sm")

doc = nlp("NLP is fascinating.")

displacy.render(doc, style="dep", jupyter=True)

Constituency Parsing

Constituency parsing is a technique used in natural language processing to analyze the syntactic structure of sentences. It is similar to dependency parsing, which also analyzes the structure of sentences, but instead of breaking down sentences into individual words and their relationships, constituency parsing dissects sentences into sub-phrases or constituents based on their syntactic structure.

In simpler terms, constituency parsing breaks down a sentence into smaller parts based on the way the words in the sentence are grouped together.

Our example sentence, “NLP is fascinating”, can be divided into the noun phrase “NLP” and the verb phrase “is fascinating”. This is somewhat like dividing a cake into different layers, each with its unique flavor contributing to the whole.

Constituency parsing is a bit more complex and needs a dedicated parser. Here’s an example using the NLTK and a pre-trained parser:

from nltk.parse.stanford import StanfordParser

scp = StanfordParser(path_to_jar='path/to/stanford-parser.jar', path_to_models_jar='path/to/stanford-parser-3.9.2-models.jar')

result = list(scp.raw_parse(sentence))

print(result[0])Please replace ‘path/to/stanford-parser.jar’ and ‘path/to/stanford-parser-3.9.2-models.jar’ with the actual paths in your environment. This will give you a constituency parse tree for the sentence.

Lemmatization & Stemming

Lemmatization and stemming are techniques used in text preprocessing and natural language processing algorithms to reduce words to their root or base form. While they may appear similar, they have practical applications depending on the context and the specific requirements of the task at hand.

Stemming is a more rudimentary process, applying a set of rules to strip suffixes from words, and often, the stemmed word may not be a real word. On the other hand, lemmatization takes into account the morphological analysis of words – aiming to remove inflectional endings to return the base or dictionary form of a word, known as the lemma.

Let’s consider the word “better” and “good”. The Porter stemmer will not recognize these as the same root, but lemmatization will because it has more comprehensive linguistic knowledge.

from nltk.stem import PorterStemmer, WordNetLemmatizer

words = ["good", "better"]

stemmer = PorterStemmer()

lemmatizer = WordNetLemmatizer()

print("Stemming results:")

for word in words:

stemmed_word = stemmer.stem(word)

print(stemmed_word)

# Output:

# good

# better

print("\nLemmatization results:")

for word in words:

lemmatized_word = lemmatizer.lemmatize(word, pos='a') # 'a' denotes adjective in this context

print(lemmatized_word)

# Output:

# good

# goodWhile stemming can be faster, lemmatization provides more accurate results, taking into consideration the context of the word in the text.

When dealing with tasks that require high precision and understanding the meaning of words in the text, such as in automatic summarization, lemmatization would be the more suitable approach due to its attention to the grammatical correctness of the resultant word. On the other hand, if the task at hand is more generalized stemming could be a more efficient approach.

NER & Text Classification

Named Entity Recognition is an information extraction method that identifies and classifies named entities in a text into predefined categories such as persons, organizations, locations, and so on. It’s like labeling a proper noun with its appropriate category, enabling machines to understand the significance of the entity in the context.

text = "Did you know that the concept of Natural Language Processing dates back to the 1950s,

with the creation of the world's first chatbot, ELIZA, in the mid-1960s?"

nlp = spacy.load("en_core_web_sm")

doc = nlp(text)

doc.user_data["title"] = "Entity Recognizer"

displacy.render(doc, style="ent", jupyter=True, )

Text Classification, on the other hand, involves classifying text into predefined groups.

By analyzing the text data, the algorithm can understand the theme or sentiment of the text and classify it accordingly. For example, a text classification model could categorize movie reviews as positive, negative, or neutral based on the words and phrases used in the review.

Just as lemmatization aids in reducing words to their base form for a more precise understanding, NER and Text Classification help in deciphering the context and sentiment of the text, thereby enriching the overall text analysis and interpretation in natural language processing.

Techniques and Methods of Natural Language Processing (NLP)

NLP is a complex field that employs an array of techniques and methodologies, drawing from various domains such as machine learning, deep learning, linguistics, and semantics. Here, we’ll explore some commonly used techniques:

Bag of Words (BoW) & TF-IDF

Bag of Words (BoW) converts text into numerical features, allowing them to be utilized in machine learning algorithms. This method ignores the order of words but takes into account their frequency. While this can be a limitation in capturing the contextual essence of the text, it is a simple and effective way to represent text data numerically.

Each document is represented as a vector in a multidimensional space, where each dimension corresponds to a word in the overall vocabulary built from the corpus of documents. The value in each dimension could represent the count of times the word appears in the document.

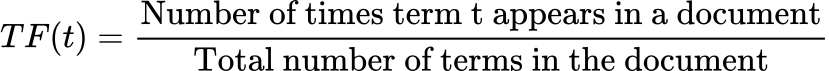

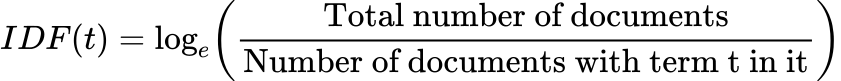

Short for Term Frequency-Inverse Document Frequency, TF-IDF quantifies the significance of a word in a document or a corpus. It’s a numerical statistic intended to reflect how important a word is to a document in a collection or corpus.

The Term Frequency (TF) is calculated as:

The Inverse Document Frequency (IDF) is calculated as:

Therefore, the TF-IDF is calculated as:

This formula evaluates how important a word is to a document in a corpus. With a high weightage given to terms that appear frequently in a specific document, but not in many documents in the corpus, it can help to adjust for the fact that some words appear more frequently in general.

Let’s consider the below two sentences as our input:

“The voice assistant responds in real time.”

“The Artificial Intelligence (AI) in the assistant is impressive.”

In this case, the vocabulary and the Bag of Words (BoW) vectors would be:

Vocabulary: {The, voice, assistant, responds, in, real, time, Artificial, Intelligence, (AI), is, impressive}

Feedback 1: {1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0}

Feedback 2: {1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1}

As for the TF-IDF, the scores will be calculated for each word in the feedback sentences based on the TF-IDF formula.

The Python code for both BoW and TF-IDF transformations would remain the same, just replacing the corpus with the new feedback sentences.

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

# define corpus

corpus = ['The voice assistant responds in real time',

'The Artificial Intelligence (AI) in the assistant is impressive']

# initialize CountVectorizer and TfidfVectorizer

bow_vectorizer = CountVectorizer()

tfidf_vectorizer = TfidfVectorizer()

# fit and transform the corpus

bow_X = bow_vectorizer.fit_transform(corpus)

tfidf_X = tfidf_vectorizer.fit_transform(corpus)

# print the feature names (vocabulary) and vectors

print("Bag of Words:")

print(bow_vectorizer.get_feature_names_out())

print(bow_X.toarray())

print("\nTF-IDF:")

print(tfidf_vectorizer.get_feature_names_out())

print(tfidf_X.toarray())Word Embeddings

Word Vector or Embedding is a lower-dimensional numeric vector input that represents a word. The reason to have these embeddings is to feed them into machine learning models or other computer programs. Note that ML models do not understand words, they only understand numbers and hence we need word embeddings.

These word embeddings are not just random numbers they preserve semantic and syntactic information on words ie. More similar words will have similar embeddings. To compare two embeddings we can use measuring the cosine similarity between them is the most widely used method.

The cosine similarity between two vectors that are completely identical is 1. This value will be 0 for vectors that are completely unrelated. If the two vectors have an inverse relationship, the cosine similarity value will be -1 this time.

A type of word representation that allows words with similar meanings to have similar representations. It represents words as high-dimensional vectors that capture the semantic meaning and context of words. Word2Vec and GloVe are popular examples of word embedding methods.

Transformers

Before the advent of the Transformer, Recurrent Neural Networks (RNNs) were the leading neural network architecture for natural language processing tasks. RNNs excel at handling sequential data but grapple with some limitations. One such drawback is their inability to process input sequences concurrently, which leads to inefficiency when managing lengthy sequences. Furthermore, they tend to be more intricate and challenging to train compared to other neural network types.

The Transformer architecture emerged to address these issues by employing a self-attention mechanism as an alternative to RNNs. Self-attention enables the model to gauge the significance of each input element within a sequence, permitting it to selectively concentrate on specific input portions during processing. As a result, the Transformer can process input sequences in parallel, significantly enhancing its speed and efficiency compared to RNNs.

The transformer model, introduced in the paper “Attention is All You Need“, has revolutionized the field of NLP. Unlike sequence-dependent models like RNNs, transformers use self-attention mechanisms to process input data in parallel, making them more efficient. They have achieved state-of-the-art results in a variety of NLP tasks, including machine translation and text summarization.

It is based on an encoder-decoder structure, but it does not generate output using recurrence or convolutions. The resulting word vectors capture the meanings and contexts of words in a more powerful and accurate way than previous methods.

One of the most exciting developments in the field of natural language processing (NLP) in recent years has been the evolution of GPT (Generative Pretrained Transformer) models. GPT-n models are based on Transformers. OpenAI researchers introduced the first GPT model GPT-1 in 2018.

At the highest level, training the GPT neural network consists of two steps.

- The first step is to create vocabulary, categories, and production rules. This is achieved by feeding GPT text (training data). For each word, the model must predict the category to which the word belongs, and then, a production rule must be created.

- The second step is to prepare vocabulary and production rules for each category. This is done by feeding the model with sentences. For each sentence, the model must predict the category to which each word belongs, and then, a production rule must be created.

There are also some tricks that the model uses to improve its ability to generate texts. For example, it is able to guess the beginning of a word by observing the context of the word. In theory, these steps and the related tricks may seem simple, but in practice they required massive amounts of computation.

Conclusion

In conclusion, Natural Language Processing stands at the crossroads of linguistics and AI, offering powerful tools to enhance human-computer interaction. These advancements hinge on sophisticated machine learning models, with deep learning techniques providing a substantial boost in the processing and interpretation of human speech and text. Despite its challenges, its potential to transform industries and society is immense. As we continue to refine and evolve NLP, the day when machines can understand and respond to all nuances of human language may not be far off.

References

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I. (2017, June 12). Attention is all you need. arXiv.Org. https://arxiv.org/abs/1706.03762

Mittal, Aayush. “What Is Adversarial Machine Learning?” Artificial Intelligence +, 29 Mar. 2023, https://www.aiplusinfo.com/blog/what-is-adversarial-machine-learning/. Accessed 5 June 2023.

Thomas, Alex. Natural Language Processing with Spark NLP: Learning to Understand Text at Scale. O’Reilly Media, 2020.