Introduction

Adversarial Machine Learning (AML) has recently captured the attention of Machine Learning and AI researchers. Why? In recent years, the development of deep learning (DL) has significantly improved the capabilities of machine learning (ML) models in a variety of predictive tasks, such as image recognition and processing unstructured data.

ML/DL models are vulnerable to security threats arising from the adversarial use of AI. Adversarial attacks are now a hot research topic in the deep learning world, and for good reason – they’re as crucial to the field as information security and cryptography. Think of adversarial examples as the viruses and malware of deep learning systems. They pose a real threat that needs to be addressed to keep AI systems safe and reliable.

With the emergence of cutting-edge advances such as ChatGPT’s incredible performance, the stakes are higher than ever regarding the potential risks and consequences of adversary attacks against such important and powerful AI technologies. For instance, it’s been found through various studies that large language models, like OpenAI’s GPT-3, might unintentionally give away private and sensitive details if they come across certain words or phrases. In critical applications like Facial Recognition systems, Self-driving cars, the consequences are severe. So, let’s dive into the world of adversarial machine learning, explore its aspects, and how to protect ourselves from these threats.

Table of contents

- Introduction

- What is Adversarial Machine Learning

- What is an Adversarial Example?

- Difference between adversarial White Box vs. Black Box attacks

- The Threat of Adversarial Attacks in Machine Learning

- How Adversarial Attacks on AI Systems Work

- What Are Adversarial Examples?

- Popular Adversarial Attack Methods

- FastGradient Sign method (FGSM)

- Jacobian-based Saliency Map Attack (JSMA)

- Deepfool Attack

- Carlini & Wagner Attack (C&W)

- Generative Adversarial Networks (GAN)

- Zeroth-order optimization attack (ZOO)

- Defense Against Adversarial Attack

- Conclusion

- References

What is Adversarial Machine Learning

Adversarial Machine Learning is all about understanding and defending against the attack on AI systems. These attacks involve the manipulation of input data to trick the model into misleading predictions.

Leveraging adversarial machine learning helps enhance security measures and promote responsible AI, making it essential for developing reliable and trustworthy solutions.

Adversarial attack on a Machine learning model

In the early 2000s, researchers discovered that spammers could trick simple machine learning models like spam filters with evasive tactics. Over time, it became clear that even sophisticated models, including neural networks, are vulnerable to adversary attacks. Although it was recently observed that real-world factors can make these attacks less effective, experts like Nicholas Frosst of Google Brain are still skeptical about new machine learning approaches that mimic human cognition. discussing. Big tech companies have started sharing resources to improve the robustness of their machine learning models and reduce the risk of adversarial attacks.

Source: https://arxiv.org/pdf/1412.6572.pdf

Caption: Adversarial examples causes Neural Network to make unexpected errors by intentionally misleading them with deceptive inputs.

To us humans, these two images look the same, but a Google study in 2015 showed a popular object detection neural network ‘GoogleNet‘ saw the left one as a “panda” and thae right one as a “gibbon” – with even more confidence! The right image, an “adversarial example,” has subtle changes that are invisible to us but completely alter what a machine learning algorithm sees.

Machine learning models, especially deep neural networks (DNNs), have completely dominated the modern digital world, enabling significant advances in a variety of industries through superior pattern recognition and decision-making abilities. However, their complex calculations are often difficult for humans to interpret, making them appear as “black boxes.” Additionally, these networks are susceptible to manipulation through small data alterations, leaving them vulnerable to adversarial attacks.

What is an Adversarial Example?

An adversarial example is an instance characterized by subtle, deliberate feature alterations that mislead a machine-learning model into making an incorrect prediction. Long before machine learning, perceptual illusions were used to understand human cognition, revealing the implicit priors present in human perception, such as Adelson’s checkerboard illusion.

Deep Learning models, like humans, are susceptible to such adversarial examples or ‘illusions’. The Fast Gradient Sign Method (FGSM) is one technique used to generate such examples, which are algorithmically designed to fool machine learning models. The connection between human perceptual illusions and adversarial examples in machines goes beyond the surface; they both reveal the features that are essential for system performance. The study of adversarial examples has expanded beyond deep learning, as various machine learning models, including logistic regression, linear regression, decision trees, k-Nearest Neighbor (kNN), and Support Vector Machines (SVM), are also vulnerable to these instances.

Also Read: AI and Cybersecurity

Difference between adversarial White Box vs. Black Box attacks

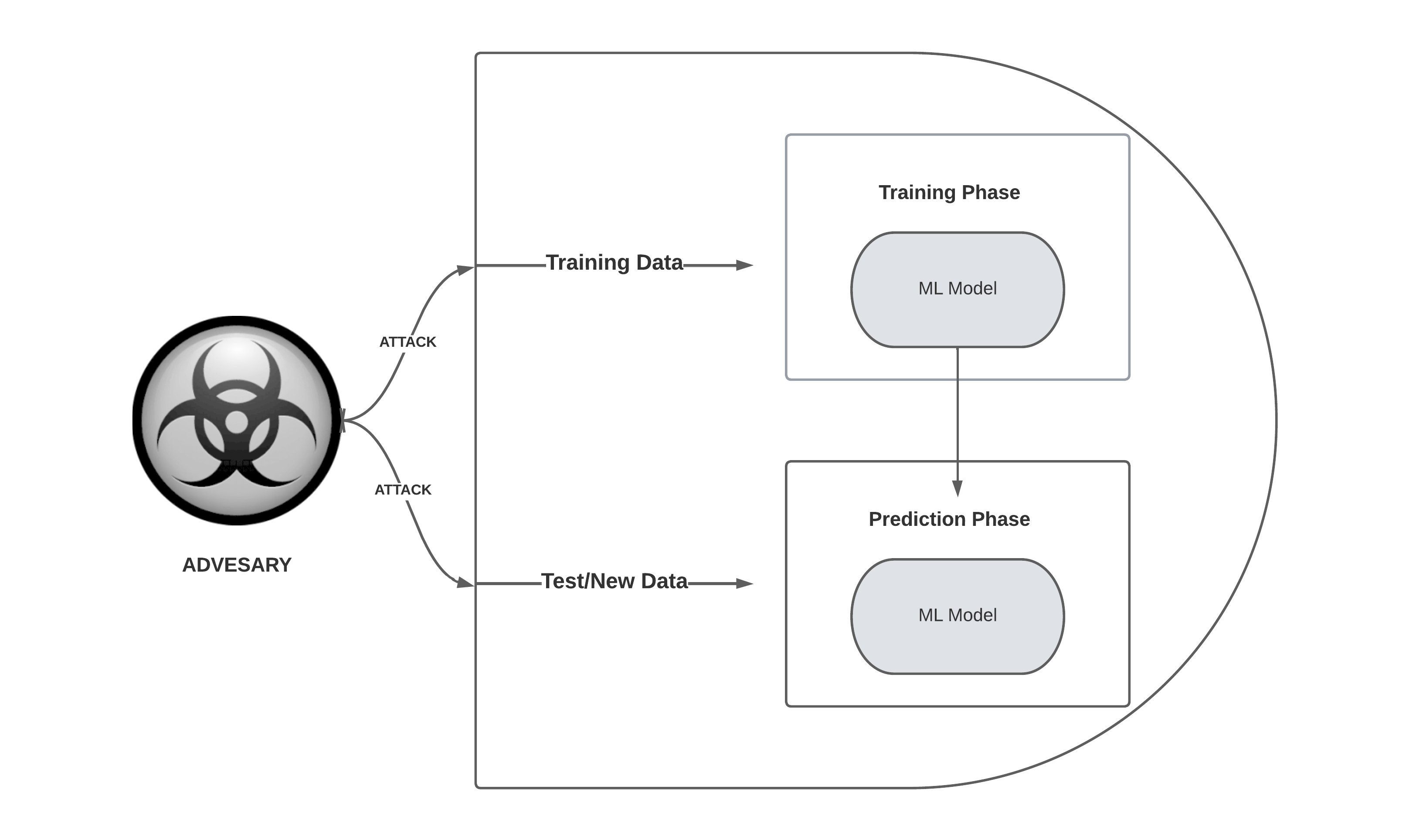

When it comes to Adversarial Machine Learning. there are two main types of attacks: white box and black box attacks. Understanding the differences between these strategies is crucial to better protect AI systems.

In white box attacks, the attacker has full knowledge of the targeted machine learning model, such as its architecture, weights, and training data. This access allows the attacker to create adversarial examples with remarkable accuracy, directly tampering with the model’s internal components. Now although white box attacks tend to be more effective, to execute they need a higher level of expertise and access to the model’s metadata which can be challenging to secure.

Black box attacks take place when the attacker has limited information about the target mode. Tackling the complex nature of black box machines is important in creating dependable and transparent AI systems capable of resisting adversarial challenges. In this scenario, the attacker knows only the model input/output, lacking details about its architecture, weights, or training data. To create an adversarial real-world scenario, the attacker has to rely on alternative methods like transferability, where an adversarial example made for one model is used to attack another model with similar architecture or training data.

Most adversarial ML attacks are currently white-box attacks, which can later be converted to black-box attacks by exploiting the transferability property of adversarial examples. The transferability property of adversarial ML means that adversarial perturbations generated for one ML model will often mislead other unseen ML models. To counter these attacks, various adversarial defenses such as retraining, timely detection, defensive distillation, and feature squeezing have been proposed.

The Threat of Adversarial Attacks in Machine Learning

A Microsoft research study explored the readiness of 28 organizations to handle adversarial machine learning attacks, revealing that the majority of practitioners in the industry were ill-equipped with the required tools and know-how to safeguard their ML systems. The study sheds light on the existing gaps in securing machine learning systems from an industry perspective and urges researchers to update the Security Development Lifecycle for industrial-grade software in the age of adversarial ML.

Adversarial attacks have some concerning characteristics that make them, particularly challenging to deal with:

- Hard to detect: Adversarial examples are often created by making tiny adjustments to the input data, which are nearly impossible for humans to notice. Despite these small changes, machine learning models may still classify these examples incorrectly with high confidence.

- Transferable attacks: Surprisingly, adversarial examples created for one model can also deceive other models with different architectures, trained for the same task. This allows attackers to use a substitute model to generate attacks that will work on the target model, even if the two models have different structures or algorithms.

- No clear explanation: Currently, there is no widely accepted theory that explains why adversarial attacks are so effective. Various hypotheses, such as linearity, invariance, and non-robust features, have been proposed, leading to different defense mechanisms.

How Adversarial Attacks on AI Systems Work

Adversarial attacks on AI systems involve various strategies aimed at exploiting vulnerabilities in deep learning models. Let’s explore five known techniques that adversaries can use to compromise these models:

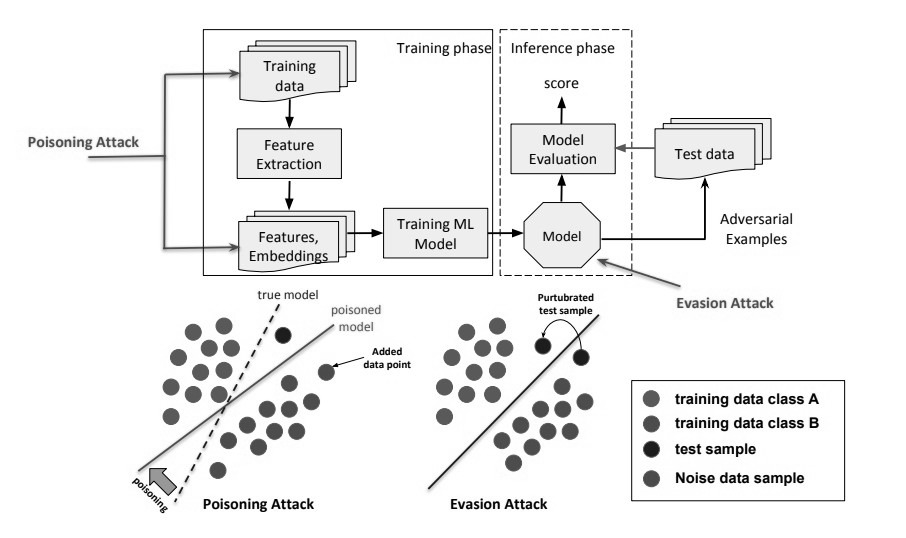

The image illustrates the difference between evasion and poisoning attacks. Evasion attacks target the testing phase, modifying input samples to fool the model. Poisoning attacks occur during the training phase, where adversaries corrupt the training data to compromise the model’s performance.

1. Poisoning Attacks

These attacks involve injecting false data points into the training data with the goal of corrupting or degrading the model. Poisoning attacks have been studied in various tasks, such as binary classification, unsupervised learning like clustering and anomaly detection, and matrix completion tasks in recommender systems. To defend against poisoning attacks, several approaches have been proposed, such as robust learning algorithms that are less sensitive to outliers or malicious data points, data provenance verification to ensure the integrity and trustworthiness of training data, and online learning algorithms that can adapt to changes in the data distribution.

2. Evasion Attacks

Evasion attacks typically occur after a machine learning system has completed its training phase and involve the manipulation of new data inputs to deceive the model. These attacks are also called decision-time attacks, as they attempt to evade the decision made by the learned model at test time. Evasion attacks have been used to bypass spam and network intrusion detectors.

Techniques like Adversarial training strengthen models by incorporating adversarial examples, and model ensembles that boost resilience by combining multiple models. Additional methods include detecting adversarial examples, applying input preprocessing, and creating certified defenses to ensure model robustness.

3. Model Extraction

Model extraction is an adversarial attack technique where an attacker aims to replicate a machine learning model without having direct access to its architecture or training data. By sending crafted input samples to the target model and observing its outputs, the attacker can create a surrogate model that mimics the target model’s behavior. This could lead to intellectual property theft, competitive disadvantages, or allow attackers to further exploit vulnerabilities within the extracted model. To prevent model extraction, researchers have proposed various defense mechanisms such as limiting access to model outputs, obfuscating predictions, and watermarking the models to prove ownership.

4. Training Data (Backdoor Attack)

Another method is tampering with the training data by adding imperceptible patterns that create backdoors. These backdoors can then be used to control the model’s output, further compromising its integrity. This stealthy nature makes backdoor attacks particularly challenging to detect and mitigate. Defenses against backdoor attacks include data sanitization, outlier detection, and the use of techniques like fine-pruning to remove the backdoor from the model after training.

5. Inference Attack

These attacks focus on obtaining information about private data used by the model. Attackers can exploit vulnerabilities to gain insights into sensitive information, potentially breaching privacy or security. These attacks can be categorized into two types: membership inference and attribute inference attacks. Membership inference aims to determine whether a specific data point was used in the training set, potentially exposing sensitive user information. In attribute inference attacks, the attacker tries to infer the value of a specific attribute of a training data point based on the model’s output. To counteract inference attacks, various techniques have been proposed, such as differential privacy, which adds noise to the model’s predictions to protect the privacy of the training data and secure multi-party computation (SMPC), which allows multiple parties to collaboratively compute a function while keeping their input data private.

What Are Adversarial Examples?

An adversarial example is an input data point that has been subtly modified to produce incorrect predictions from a machine learning model. These modifications are often imperceptible to humans but can lead to wrong predictions or security violations in AI systems. Adversarial examples can be generated using various attack methods, including Fast Gradient Sign Method (FGSM), Jacobian-based Saliency Map Attack (JSMA), DeepFool, and Carlini & Wagner Attack (C&W).

Popular Adversarial Attack Methods

FastGradient Sign method (FGSM)

The Fast Gradient Sign Method (FGSM) first made its appearance in the paper “Explaining and Harnessing Adversarial Examples“. It is a white box attack that generates adversarial examples by computing the gradient of the loss function with respect to the input image. It then adds a small perturbation in the direction of the gradient sign to create an adversarial image.

It involves three main steps: calculating the loss after forward propagation, computing the gradient with respect to the input image’s pixels, and slightly adjusting the pixels to maximize the loss. In regular machine learning, gradients help determine the direction to adjust the model’s weights to minimize loss. However, in FGSM, we manipulate the input image pixels to maximize loss and cause the model to make incorrect predictions.

Backpropagation is used to calculate the gradients from the output layer to the input image. The main difference between the equations used for regular neural network training and FGSM is that one minimizes loss while the other maximizes it. This is achieved by either subtracting or adding the gradient multiplied by a small value called epsilon.

The process can be summarized as follows: forward-propagate the image through the neural network, calculate the loss, back-propagate the gradients to the image, and nudge the pixels to maximize the loss value. By doing so, we encourage the neural network to make incorrect predictions. The degree of noticeability of the noise on the resulting image depends on the epsilon value – the larger it is, the more noticeable the noise and the higher the likelihood of the network making an incorrect prediction.

Jacobian-based Saliency Map Attack (JSMA)

Jacobian-based Saliency Map Attack (JSMA) is a fast, effective, and widely used L0 adversarial attack that fools neural network classifiers by exploiting the Jacobian matrix of the outputs with respect to the inputs.

Perturbation bounds are an essential part of understanding adversarial attacks on machine learning models. These bounds determine the size of the perturbation, which can be measured using different mathematical norms like L0, L1, L2, and L_infinity norms. L0 norm attacks are particularly concerning because they can modify only a small number of features in an input signal, making them realistic and dangerous for real-world systems. On the other hand, L_infinity attacks are the most widely studied because of their simplicity and mathematical convenience in robust optimization.

Researchers have proposed new variants of JSMA, such as Weighted JSMA (WJSMA) and Taylor JSMA (TJSMA), which take into account the input’s characteristics and output probabilities to craft more powerful attacks. These improved versions have demonstrated significantly faster and more efficient results than the original targeted and non-targeted versions of JSMA, while maintaining their computational advantages.

Deepfool Attack

The DeepFool attack, developed by Moosavi-Dezfooli et al., focuses on discovering the minimal distance between the original input and the decision boundary of adversarial examples. This approach handles the non-linearity in high-dimensional spaces by employing an iterative linear approximation technique. In comparison to FGSM and JSMA, DeepFool minimizes the perturbation’s intensity rather than the number of selected features.

The crux of the DeepFool algorithm lies in identifying an adversary through the creation of the smallest feasible perturbation. It conceptualizes the classifier’s decision space as divided by linear hyperplane boundaries, which guide the selection of various classes. To approach the nearest decision boundary, the algorithm shifts the image’s position in the decision space directly. However, due to the often non-linear nature of decision boundaries, the algorithm applies the perturbation iteratively, continuing until it crosses a decision boundary. This innovative method offers a unique and engaging perspective on adversarial attacks in deep learning models.

Carlini & Wagner Attack (C&W)

Carlini & Wagner Attack (C&W) is a powerful adversarial attack method that formulates an optimization problem to create adversarial examples. The goal is to cause a misclassification (targeted or untargeted) in a Deep Neural Network (DNN) and find an efficient way to solve the problem. The original optimization problem was difficult to solve due to its highly non-linear nature, but C&W managed to reformulate it by introducing an objective function, which measures “how close we are to being classified as the target class.”

In the C&W attack, the original optimization problem was reformulated using a well-known trick to move the difficult constraints into the minimization function. To address the “box constraint” issue, they used a “change of variables” method, allowing them to use first-order optimizers like Stochastic Gradient Descent (SGD) and its variants, such as the Adam optimizer.

The final form of the optimization problem in the C&W attack is solved using the Adam optimizer, which is computationally efficient and less memory-intensive than classical second-order methods like L-BFGS. The attack can generate stronger, high-quality adversarial examples, but the cost to create them is also higher compared to other methods, such as the Fast Gradient Sign Method (FGSM).

Generative Adversarial Networks (GAN)

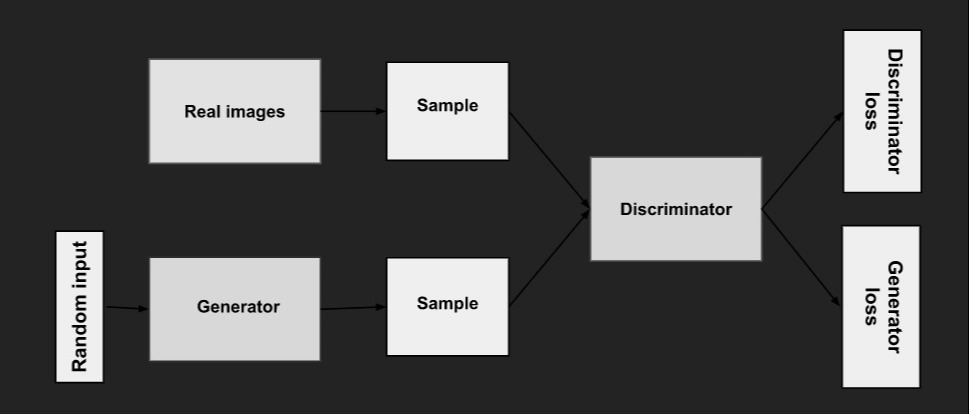

GANs are a type of machine learning system that consists of two neural networks, a generator, and a discriminator, competing against each other in a zero-sum game. While GANs are not an adversarial attack method in and of themselves, they can be used to generate adversarial examples capable of fooling deep neural networks.

The generator network creates fake samples, while the discriminator network attempts to distinguish between real and fake samples. As the generator improves, the generated samples become more challenging for the discriminator to distinguish, potentially leading to adversarial examples. At the onset of training, the generator produces data that is clearly fake, and the discriminator swiftly learns to identify it as inauthentic.

Source: https://developers.google.com/machine-learning/gan/gan_structure

In the diagram, the real-world images and random input represent these two data sources. To train the generator, random noise is used as input, which transforms into meaningful output. The generator loss penalizes it for producing samples that the discriminator classifies as fake. The backpropagation process adjusts both the generator and discriminator weights to minimize their respective losses. This iterative training process enables the generator to produce increasingly convincing data, making it harder for the discriminator to differentiate between real and generated samples.

Also Read: Creative Adversarial Networks: How They Generate Art?

Zeroth-order optimization attack (ZOO)

The Zeroth Order Optimization (ZOO) attack is a black-box attack technique that exploits zeroth order optimization to directly estimate the gradients of the targeted Deep Neural Network (DNN) for generating adversarial examples. Unlike traditional black-box attacks that rely on substitute models, the ZOO attack does not require training any substitute model and achieves comparable performance to state-of-the-art white-box attacks, such as Carlini and Wagner’s attack.

In a black-box setting, an attacker only has access to the input (images) and output (confidence scores) of a targeted DNN. ZOO attack leverages zeroth order stochastic coordinate descent along with dimension reduction, hierarchical attack, and importance sampling techniques to efficiently attack black-box models. This approach spares the need for training substitute models and avoids the loss in attack transferability.

Defense Against Adversarial Attack

Adversarial training has proven to be an effective defense strategy. This approach involves generating adversarial examples during the training process. The intuition behind this is that if the model encounters adversarial examples while training, it will perform better when predicting similar adversarial examples later on.

The loss function used in adversarial training is a modified version that combines the typical loss function for clean examples and a separate loss function for adversarial examples.

During the training process, for every batch of ‘m’ clean images, ‘k’ adversarial images are generated using the current state of the network. Both clean and adversarial examples are then forward-propagated through the network, and the loss is calculated using the modified formula.

Other defense strategies include gradient masking, defensive distillation, ensemble methods, feature squeezing, and autoencoders. Applying game theory for security can provide valuable insights into adversarial behavior, helping design optimal defense strategies for AI systems.

Also Read: Artificial Intelligence + Automation — future of cybersecurity.

Conclusion

As we increasingly rely on AI to solve complex problems and make decisions for us, it is important to recognize the values of openness and robustness of Machine Learning models. We can create a safe and secure technological landscape using advanced defense methods that are equipped to tackle adversarial attacks, ensuring that we can trust AI to work for us, rather than against us.

References

Siva Kumar, Ram Shankar, et al. “Adversarial Machine Learning – Industry Perspectives.” SSRN Electronic Journal, 2020, https://doi.org/10.2139/ssrn.3532474.

Szegedy, Christian, et al. “Going Deeper with Convolutions.” arXiv.Org, 17 Sept. 2014, https://arxiv.org/abs/1409.4842.

“Overview of GAN Structure.” Google Developers, https://developers.google.com/machine-learning/gan/gan_structure.

Siva Kumar, Ram Shankar, et al. “Adversarial Machine Learning – Industry Perspectives.” SSRN Electronic Journal, 2020, https://doi.org/10.2139/ssrn.3532474.

“DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks.” IEEE Xplore, https://ieeexplore.ieee.org/document/7780651.

“Explaining and Harnessing Adversarial Examples.” arXiv.Org, 20 Dec. 2014, https://arxiv.org/abs/1412.6572.

Wiyatno, Rey, and Anqi Xu. “Maximal Jacobian-Based Saliency Map Attack.” arXiv.Org, 23 Aug. 2018, https://arxiv.org/abs/1808.07945.

Chen, Pin-Yu, et al. “ZOO.” Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, ACM, 2017, https://arxiv.org/pdf/1708.03999.pdf. Accessed 25 Mar. 2023.

Dasgupta, Prithviraj, and Joseph Collins. “A Survey of Game Theoretic Approaches for Adversarial Machine Learning in Cyber Security Tasks.” AI Magazine, vol. 40, no. 2, June 2019, pp. 31–43, https://doi.org/10.1609/aimag.v40i2.2847.

Carlini, Nicholas, and David Wagner. “Towards Evaluating the Robustness of Neural Networks.” arXiv.Org, 16 Aug. 2016, https://arxiv.org/abs/1608.04644.