What is ADAGrad and How Does it Relate to Machine Learning

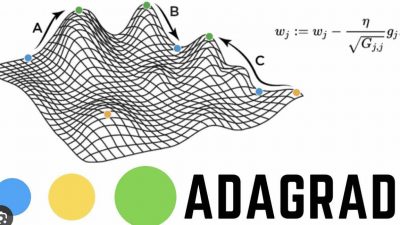

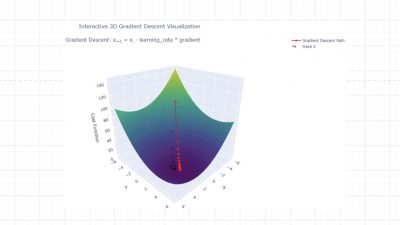

Why it matters: AdaGrad (Adaptive Gradient) is an optimization algorithm used in the field of machine learning and deep learning.

Everything AI, Robotics, and IoT

Graduate of IIT Kharagpur with a background in software development, proficient in Machine Learning and Deep Learning techniques. Over the past five years, I have contributed to more than 50 projects across various software engineering domains, with a strong emphasis on AI/ML. Additionally, I have a keen interest in Natural Language Processing.

Why it matters: AdaGrad (Adaptive Gradient) is an optimization algorithm used in the field of machine learning and deep learning.

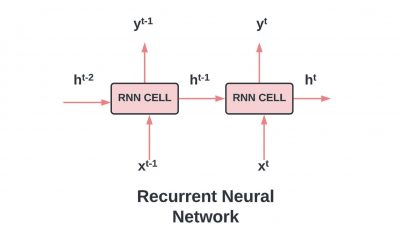

Why it matters: Recurrent Neural Networks (RNNs) are artificial neural networks designed to process sequence data by maintaining an internal state.

Why it matters: In this article let’s learn about Keras loss function, how it impacts deep learning architecture and its applications in real life scenarios.

Why it matters: Batch normalization standardizes inputs to network layers, enabling faster training, better model performance, and inherent regularization.

Why it matters: Neural architecture search helps in automating the process of creating neural network structures, helping us create high-performing models.

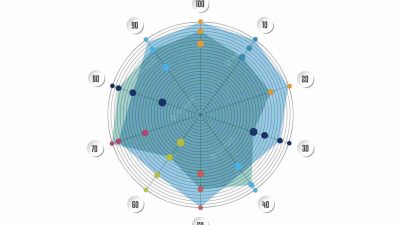

Why it matters: Radial bias function networks have become popular in applications such as pattern recognition, approximation, and time series prediction.

Why it matters: Let us dive into the world of Bayesian Optimization, exploring its practical uses, especially when it comes to fine-tuning parameters in ML.

Why it matters: Univariate linear regression, specifically, focuses on predicting the dependent variable using a single independent variable.

Why it matters: What is Adversarial Machine Learning? ML/DL models are vulnerable to security threats arising from the adversarial use of AI.