Introduction

Artificial Intelligence (AI) has paved the way for numerous advancements in various fields. One of the core concepts within AI is machine learning, which comprises supervised learning, unsupervised learning, and reinforcement learning. Each learning type has its own set of algorithms.

Among these algorithms, linear regression, a supervised learning technique, is a fundamental concept in machine learning and artificial intelligence. It is employed to model the linear relationship between two variables, where one is considered the dependent variable and the other is the independent variable. Univariate linear regression, specifically, focuses on predicting the dependent variable using a single independent variable, thus simplifying the modeling process. In this article, we will discuss the concept of univariate linear regression, which is used to predict a dependent variable based on a single independent variable. We will also explore how univariate linear regression is used in AI.

Table of contents

What is Linear Regression?

Linear regression is a statistical technique that helps us uncover the relationships between variables. It enables us to create a model that explains how one variable, known as the dependent or response variable, changes in response to the variations in another variable, referred to as the independent or predictor variable. By understanding these relationships, we can make predictions and better comprehend the dynamics at play within a particular dataset.

The main purpose of using a linear model is to assess connections, enabling us to explain changes in an outcome variable by considering a chosen model and an associated prediction error:

Outcome = Model + Error

What is Univariate Linear Regression?

Univariate linear regression is a specific type of linear regression analysis that deals with a single dependent variable and a single independent variable. In this case, we aim to establish a simple linear relationship between the two variables, which can be represented as a straight line. By using this straightforward model, we can analyze the effect of the independent variable on the dependent variable and make predictions accordingly.

Univariate linear regression is a fundamental concept in statistics and serves as a building block for understanding more complex multivariate models.

Expanding on the concept of univariate linear regression, we arrive at multivariate linear regression. Multivariate linear regression is an extension of the linear regression model, where we deal with multiple independent or predictor variables affecting a single dependent or response variable. This approach allows us to capture the combined impact of these predictor variables on the dependent variable, offering a more comprehensive understanding of the relationships.

Caption: A scatter plot displaying randomly generated data points with an overlaid linear regression line in black. The X-axis represents predictor variable values, and the Y-axis represents response variable values. A red dotted line denotes the intercept, while a green line segment highlights the slope of the regression line. Purple dotted lines connect the data points to the regression line, illustrating the residuals between the actual values and predicted values.

For univariate linear regression, we have the equation:

Y = θ₀ + θ₁X₁ + ε

Here, Y is the dependent variable, X₁ is the single independent variable, θ₀ is the intercept, θ₁ is the coefficient of the independent variable, and ε is the error term.

In contrast, the equation for multivariate linear regression is:

Y = θ₀ + θ₁X₁ + θ₂X₂ + … + θₙXₙ + ε

In this equation, Y is still the dependent variable, but we now have multiple independent variables (X₁, X₂, …, Xₙ), each with its corresponding coefficient (θ₁, θ₂, …, θₙ). The error term, ε, remains in the equation as well.

By comparing these two equations, we can see that multivariate linear regression is a natural extension of univariate linear regression, allowing us to consider multiple independent variables simultaneously. This more advanced technique enables data scientists to analyze and predict complex relationships in real-world datasets with greater accuracy.

Also Read: Top 20 Machine Learning Algorithms Explained

Basic Concepts

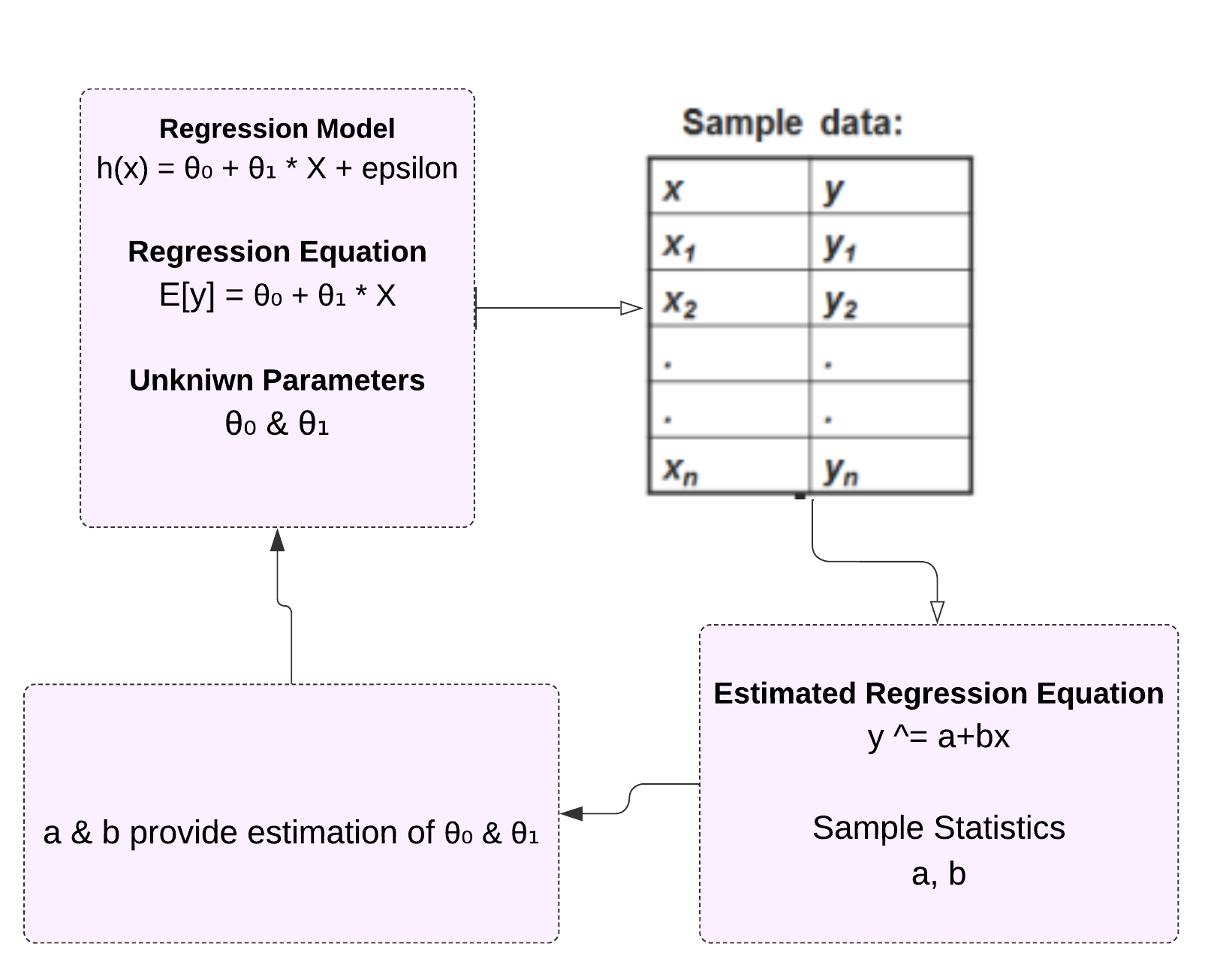

Linear regression, as a supervised learning algorithm, is instrumental in enabling machines to make predictions based on input data. To better appreciate the mathematics underlying the algorithm, let’s learn the variables and notations used in the context of training samples:

- m: total count of training examples

- x: input variable or attribute

- y: output variable or target

- (x, y): a pair denoting a single training sample with input and output

- (xᵢ, yᵢ): the i-th training sample in the dataset

Let’s consider a dataset of patients’ age (in years) and their systolic blood pressure (in mm Hg):

| Age (years) (x) | Systolic Blood Pressure (y) |

| 25 | 120 |

| 30 | 127 |

| 33 | 132 |

| 42 | 145 |

In this univariate analysis, we use a linear equation to model the relationship between the predictor variable X1 (age) and the target variable (systolic blood pressure). By estimating the values of coefficients, we can predict blood pressures for individuals based on their age.

Initialize Parameters

Initialize the parameters θ₀ and θ₁ with random values. These values will be updated during the optimization process.

θ₀ = random_value_1

θ₁ = random_value_2

Hypothesis Function

The hypothesis function represents the linear relationship between the input and output variables:

h(x) = θ₀ + θ₁* X

The Cost Function

The squared error cost function, or loss function, is a crucial element in linear regression. This function calculates the difference between the predicted values and the actual values of the dependent variable. The aim is to minimize this difference, resulting in a more accurate linear model. The squared error function can be represented as:

J(θ₀, θ₁) = (1 / 2m) * Σ[(h(x) – y)²]

Here, m denotes the number of training examples, and the summation represents the sum of squared errors for all examples.

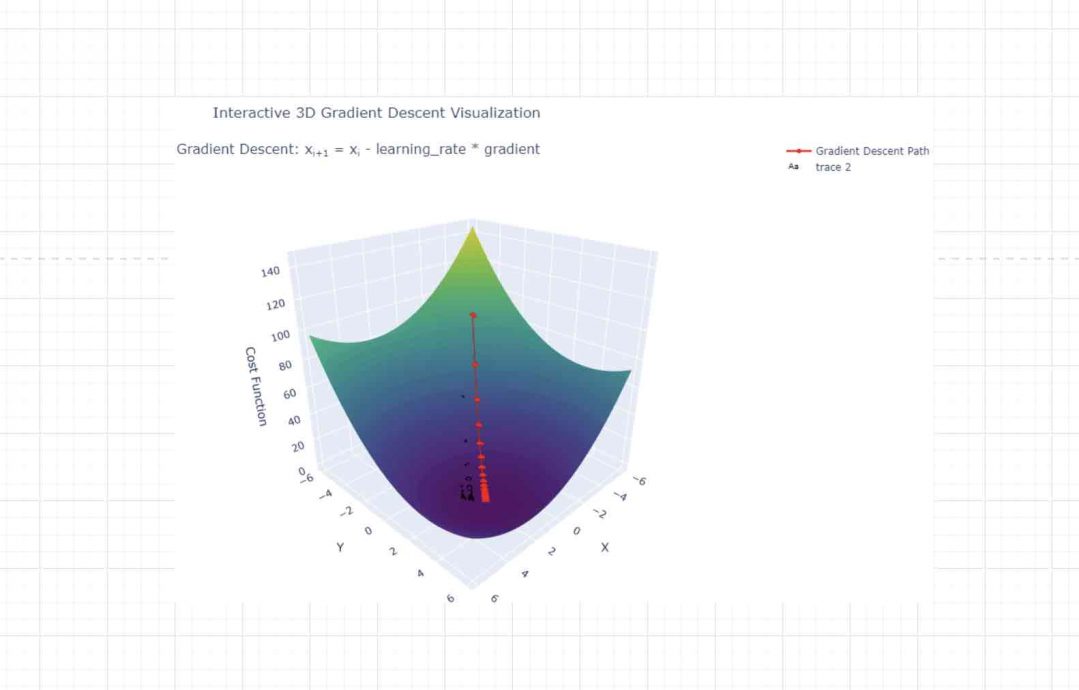

Gradient Descent

Gradient descent is the learning algorithm that minimizes the cost function. By iteratively updating the parameters θ₀ and θ₁, the algorithm converges to the cost function minimum. The learning rate, denoted by α, controls the step size during the gradient descent process. The core idea is to update the parameters as follows:

θ₀ := θ₀ – α * ∂J(θ₀, θ₁) / ∂θ₀

θ₁ := θ₁ – α * ∂J(θ₀, θ₁) / ∂θ₁

The gradient descent function is essential in determining the optimal parameters for the linear regression function.

One of the main reasons for using gradient descent in linear regression is its computational efficiency. In some cases, gradient descent can provide a faster solution compared to other methods, making it an appealing option

Model Training

During the training phase, the linear regression algorithm utilizes the given training samples to adjust the parameters θ₀ and θ₁ with the help of the gradient descent method. The aim is to minimize the cost function, J(θ₀, θ₁), by iteratively updating the parameters until the consecutive cost function values show only a minor difference or a predetermined number of iterations have been completed.

The gradient descent algorithm updates the parameters θ₀ and θ₁ as follows:

θ₀ := θ₀ – α * ∂J(θ₀, θ₁) / ∂θ₀

θ₁ := θ₁ – α * ∂J(θ₀, θ₁) / ∂θ₁

The learning rate coefficient, α, manages the step size for each update, making sure that the parameters ultimately converge to their ideal values. The partial derivatives of the cost function, ∂J(θ₀, θ₁) / ∂θ₀ and ∂J(θ₀, θ₁) / ∂θ₁, indicate the direction of the steepest increase in the cost function. By subtracting these values multiplied by the learning rate, we ensure that the updated parameters move towards the direction of the steepest decrease in the cost function, reducing the error in each iteration.

Caption: A dynamic 3D representation of the gradient descent process, illustrating the cost function as a surface plot with various colors signifying different cost values. The gradient descent trajectory is highlighted by red markers interconnected by red lines, and every step is labeled with a corresponding step number. The x, y, and z axes denote the input variables and the cost function, respectively.

As the parameters are updated, the cost function approaches its global minimum, resulting in the optimal values for θ₀ and θ₁. This iterative process continues until the cost function’s consecutive values differ by a small amount, indicating that the algorithm has converged to the optimal parameter values, or a predetermined number of iterations have been reached.

Validation

Evaluating the performance of a linear regression model is crucial to ensure its effectiveness and generalizability to new, unseen data points. A common approach to achieve this is by partitioning the dataset into separate training and testing subsets. The model is initially trained on the training subset, after which its performance is assessed on the testing subset by comparing the predicted values to the actual values. This assessment helps ascertain the accuracy and reliability of the linear regression model, as well as its ability to make predictions on real-world data.

One widely used metric for this evaluation is the Mean Squared Error (MSE), which measures the average squared difference between the predicted and actual values. The formula for MSE is as follows:

MSE = (1 / n) * Σ[(predicted_value – actual_value)²]

In this formula, n represents the number of testing examples. A smaller MSE value signifies a better model fit and higher predictive accuracy.

While assessing the model, it is essential to consider the balance between underfitting and overfitting. Underfitting occurs when the model is too simple and does not capture the underlying patterns in the data, leading to high bias and low variance. Overfitting, on the other hand, happens when the model is too complex and adapts too closely to the training data, resulting in high variance and low bias. A well-balanced model should strike a balance between these two extremes, capturing the underlying patterns in the data without being overly sensitive to noise or random fluctuations.

The Result

Upon completing the training phase, the linear regression model produces the best-fitting line that encapsulates the relationship between the input and output variables.

The essence of univariate linear regression is its simplicity, enabling researchers and practitioners to establish and understand the linear relationship between a single independent variable and a dependent variable. By mastering this fundamental concept, one can easily build on this knowledge and delve into more complex multivariate models, which encompass multiple independent variables. Moreover, understanding the logic behind univariate linear regression, including its underlying mathematics and the gradient descent optimization technique, provides a solid foundation for tackling more advanced machine learning and artificial intelligence concepts in the future.

OLS

Ordinary Least Squares (OLS) is a popular method for estimating parameters in univariate linear regression models. It aims to minimize the squared differences between observed data points and expected values, optimizing the model’s coefficients.

In simple linear regression, the relationship between the dependent variable Y and predictor variable X can be expressed as:

Y = θ₀ + θ₁X + e

Here, Y is a function of X with a residual term e, and θ₀ and θ₁ are unknown coefficients.

Using the least squares estimation, we aim to find the best estimates for θ₀ and θ₁ by minimizing the error sum of squares (SSE):

SSE = Σ(yᵢ – ŷᵢ)² = Σ(yᵢ – (θ₀ + θ₁xᵢ))², for i = 1 to n

The estimates can be computed as:

θ₁ = Σ((xᵢ – x̄)(yᵢ – ȳ)) / Σ(xᵢ – x̄)²

and

θ₀ = ȳ – θ₁x̄ = (Σyᵢ / n) – θ₁(Σxᵢ / n)

The slope of a line represents the average change in the vertical axis (y-axis) due to a change in the horizontal axis (x-axis), expressed as:

slope = (y₂ – y₁) / (x₂ – x₁)

Apart from Ordinary Least Squares (OLS) and Gradient Descent, there are several other methods to solve univariate linear regression problems. Some of these methods are:

- Weighted Least Squares (WLS): WLS is an OLS variant that accounts for heteroscedasticity, meaning the error variance varies across observations. WLS allocates weight to each observation based on the error variance’s inverse, leading to more precise model parameter estimations.

- Ridge Regression (L2 regularization): Ridge regression is a regularization technique that aims to prevent overfitting by adding a penalty term to the cost function. The penalty term is proportional to the coefficients’ squared magnitude, promoting smaller coefficients and smoother models.

- Lasso Regression (L1 regularization): Lasso regression is another regularization technique that introduces an L1 penalty term to the cost function. This term is proportional to the coefficients’ absolute value, encouraging models with fewer non-zero coefficients.

- Elastic Net Regression: This approach combines L1 and L2 regularization by integrating both penalty terms into the cost function. It balances the advantages of Ridge and Lasso regression, making it applicable to a broader range of challenges.

These methods can be chosen based on the specific requirements and characteristics of the problem, such as the presence of multicollinearity, overfitting, heteroscedasticity, or the need for sparse models. Each method has its strengths and limitations, and selecting the right one depends on the context and the dataset.

Also Read: What is the Adam Optimizer and How is It Used in Machine Learning.

Conclusion

Univariate linear regression is a fundamental machine learning algorithm that models the linear relationship between a single input variable and an output variable. It forms the foundation for more advanced machine learning tasks, including deep learning models and reinforcement learning. The core concepts discussed in this article, such as the hypothesis function, cost function, and gradient descent, provide an understanding of the linear regression algorithm’s inner workings.

In the context of AI, univariate linear regression has numerous applications, from predicting numerical response variables to analyzing the relationship between explanatory variables. By leveraging this supervised learning technique, AI systems can make informed decisions, optimize processes, and offer valuable insights to various industries.

As AI continues to evolve, univariate linear regression remains a crucial building block for more complex learning algorithms. With the rise of deep learning and reinforcement learning, the importance of understanding and applying linear regression in AI cannot be overstated. Whether you are a beginner or an expert in the field, a strong foundation in linear regression will undoubtedly benefit your AI journey.

References

Chuah, WeiQin. “Univariate Linear Regression: Explained with Examples.” Becoming Human: Artificial Intelligence Magazine, 9 Apr. 2021, https://becominghuman.ai/univariate-linear-regression-clearly-explained-with-example-4164e83ca2ee. Accessed 4 Apr. 2023.

Mittal, Aayush. “Interactive 3D Gradient Descent Visualization.” Plotly, https://chart-studio.plotly.com/~aayushmittalaayush/4/#/. Accessed 4 Apr. 2023.

Aayush Mittal. “Scatter Plot with Linear Regression Line.” Plotly, https://chart-studio.plotly.com/~aayushmittalaayush/2/#/. Accessed 4 Apr. 2023.

“Univariate Linear Regression Tutorials & Notes.” HackerEarth, https://www.hackerearth.com/practice/machine-learning/linear-regression/univariate-linear-regression/tutorial/. Accessed 4 Apr. 2023.