Introduction to Machine Learning Models

Machine learning models have revolutionized the way we understand and work with data. These computational tools facilitate nuanced tasks, such as prediction, classification, and clustering. Relying on robust algorithms, they digest data and extract pertinent patterns. Over time, they refine their operations autonomously, thus optimizing performance. Their adaptive nature distinguishes them from traditional software models.

The rise of big data and advancements in computational power have accelerated the development and deployment of such models, marking a paradigm shift in how we approach problem-solving across disciplines. Incorporating these models into existing systems augments efficiency, thereby transforming operations. Consequently, understanding the intricacies of machine learning models is vital for academics and industry professionals alike.

Also Read: How to Use Linear Regression in Machine Learning

Table Of Contents

- Introduction to Machine Learning Models

- What Are Machine Learning Models?

- Historical Context of Machine Learning

- What is a Machine Learning Algorithm?

- What is Model Training in Machine Learning?

- Categories of Machine Learning Algorithms

- Supervised Learning Models

- Unsupervised Learning Models

- Reinforcement Learning Models

- Evaluation Metrics

- Hyperparameter Optimization

- Challenges in Machine Learning Models

- Ethical Considerations

- Machine Learning Models in Industry

- Future Trends in Machine Learning Models

- Case Studies

- Case Study 1: Self-driving Cars Using Reinforcement Learning Algorithms

- Case Study 2: Healthcare Diagnosis Using Logistic Regression Model

- Case Study 3: Customer Segmentation Using K-Means Clustering

- Case Study 4: Natural Language Processing in Chatbots

- Case Study 5: Fraud Detection in Financial Transactions

- Case Study 6: Image Recognition in Social Media

- Case Study 7: Predictive Maintenance in Manufacturing

- Conclusion and Outlook

- FAQ’S

- What is Regression in Machine Learning?

- What is a Classifier in Machine Learning?

- How many ML models are there?

- What is the best model for machine learning?

- What is model deployment in Machine Learning (ML)?

- What are Deep Learning Models?

- What is Time Series Machine Learning?

- Where can I learn more about machine learning?

- References

What Are Machine Learning Models?

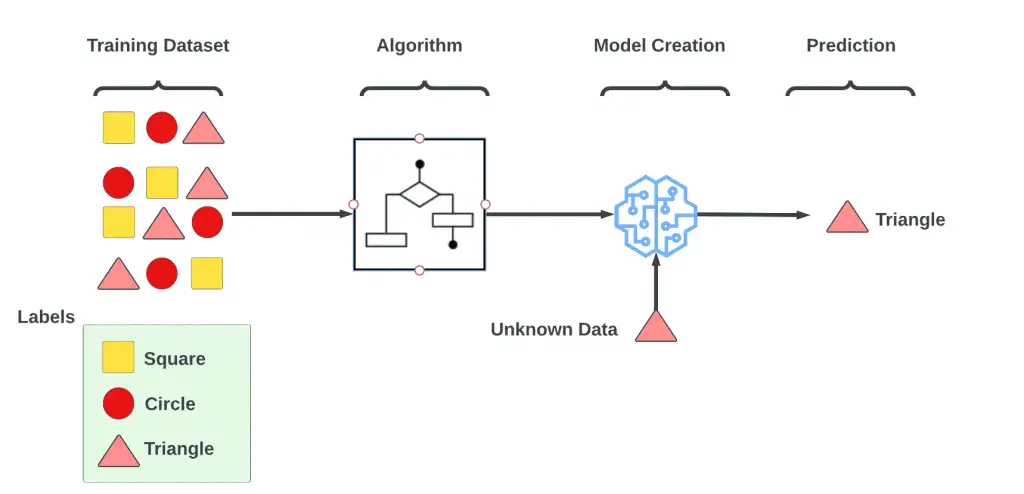

Machine learning models are computational frameworks that learn patterns from data. Unlike traditional algorithms, these models adapt their behavior based on the information they process, making them capable of performing tasks without explicit programming. They operate by training on a set of data, learning to make predictions or decisions without human intervention. The “learning” occurs through the adjustment of internal parameters, which are optimized to enable the model to generalize well to new, unseen data.

Types of machine learning models span supervised, unsupervised, and reinforcement learning, each serving different kinds of problems. The choice of model and its associated parameters often depends on the nature of the problem, the type of data available, and the performance metrics deemed important for the task at hand. From simple linear regressions to complex neural networks, the variety and capabilities of machine learning models have expanded dramatically, offering solutions for a myriad of applications including natural language processing, medical diagnosis, and financial forecasting.

Historical Context of Machine Learning

Machine learning is like teaching computers to learn from experience, combining elements of computer science—the study of how computers work—and statistics—the science of data and numbers. Imagine it as a smart robot that gets smarter the more you interact with it.

In the early days, this technology was mostly about helping computers recognize patterns or sort things into categories. Think of it like teaching a computer to differentiate between cats and dogs based on photos.

As computers became more powerful, the techniques used in machine learning grew more complex. By the 1990s, these smarter methods let computers do more useful stuff, like helping to filter spam emails or improve how search engines work.

As the 21st century emerged, machine learning experienced a transformative shift. Transitioning from basic tasks, it embraced advanced paradigms like deep learning, empowering computers with self-learning capabilities. Picture a machine not merely distinguishing between a cat and a dog, but also identifying specific breeds.

These days, machine learning has so many uses that it’s everywhere around us. It helps doctors diagnose diseases, helps banks detect fraudulent activities, and even powers the recommendation systems that suggest what movie you should watch next.

Understanding how machine learning has grown over time helps us see how far it’s come and how much more it might be able to do in the future. It’s not just a tool for tech companies; it’s a transformative technology that’s changing the world as we know it.

What is a Machine Learning Algorithm?

A machine learning algorithm is essentially the recipe that guides the making of a learning model. Just as a cooking recipe lists the ingredients and steps to make a dish, the algorithm outlines the rules and procedures for a computer to learn from data. These algorithms are what make it possible for the machine to adapt and improve its performance over time.

Some algorithms are straightforward, perfect for simple jobs. Take linear regression, for example. It’s like basic arithmetic for computers and is used to identify trends in data—much like plotting a line of best fit on a graph. This could be used for things like predicting house prices based on location and size.

Other algorithms are more complex and suited for intricate tasks. Imagine a convolutional neural network as a high-level, advanced recipe for making a gourmet dish. It’s capable of handling challenging problems like recognizing what’s in a photo. You could use this to develop a phone app that identifies plant species from pictures.

Choosing the right algorithm is crucial and depends on what you need the model to do, what kind of data you have, and what you want to achieve in the end. It’s like choosing the right tool for the job. If you’re hanging a picture, a hammer is ideal; if you’re assembling a bookshelf, you’ll want a screwdriver. Similarly, understanding the pros and cons of each algorithm helps you build a more effective machine learning model that’s tailored for your specific needs.

Also Read: Introduction to Machine Learning Algorithms

What is Model Training in Machine Learning?

In the world of machine learning, think of model training as the “practice sessions” for the computer. During this phase, the model feeds on a dataset, learning and adjusting its inner settings to make accurate predictions. Just like a musician practices scales to get better, the machine iterates over data multiple times, fine-tuning its capabilities.

The process is guided by something called a ‘cost’ or ‘loss function,’ which essentially serves as a scorekeeper. This function measures how far off the model’s guesses are from the actual answers in the dataset. The goal is to get this score as low as possible, much like a golfer aims for a low score.

To make these adjustments, an optimization technique, often gradient descent, is applied. Imagine trying to find the lowest point in a valley by taking steps downward; that’s what gradient descent does mathematically.

Throughout time, experts have developed sophisticated training methods to enhance model reliability. Specifically, techniques such as ‘regularization’ and ‘batch normalization’ act like training wheels, stabilizing the model. These methods ward off over-specialization on training data, thereby ensuring more reliable performance on unfamiliar data.

Proper training is vital for a model’s effectiveness in real-world tasks. As the model undergoes superior training, it consequently gains the ability to make more accurate predictions on unseen data. This critical skill of generalizing to new scenarios not only solidifies the model’s lab performance, but also guarantees its effectiveness in real-world applications.

Categories of Machine Learning Algorithms

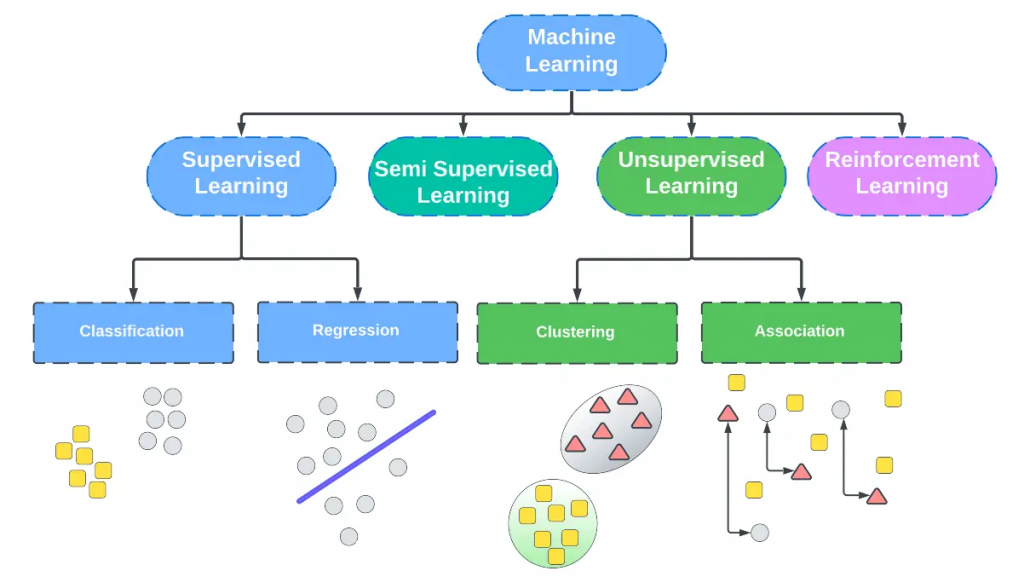

Machine learning algorithms fall under distinct categories based on their learning mechanisms. These categories include supervised learning, unsupervised learning, and reinforcement learning. Each category has its own set of algorithms, complexities, and applications, making it crucial to choose wisely for optimal performance.

Supervised Learning Models

Supervised learning is a common type of machine learning where the model learns from examples that have known outcomes. Think of it like a student learning from a textbook with the answers in the back. Popular techniques in supervised learning include linear regression and decision trees.

In this approach, the model uses a dataset with known answers (labeled data) to learn how to predict outcomes for new data. This is really good for tasks where we want to make future predictions based on past data.

Linear Regression

Linear regression is a basic but powerful tool in supervised learning. Its goal is to find a straight-line formula that best predicts an outcome based on input data. It uses a method called least squares to find this best-fitting line.

The model then uses this line to make future predictions. One of its strengths is that it’s easy to understand and doesn’t require a lot of computing power. This makes it a go-to option for initial analysis of data. It’s commonly used in different areas like economics to forecast demand, and in finance to estimate asset values. Despite its simplicity, it can be highly effective, particularly for tasks that need quick and reliable answers.

Decision Trees

Decision trees provide a straightforward way to make decisions using a set of rules. Imagine a flowchart where each step is a question that helps you make a decision; that’s essentially what a decision tree does. It breaks down a larger question into smaller, easier-to-answer questions, organizing them in a tree-like structure.

In this structure, each “node” is like a fork in the road, representing a feature or attribute that the model considers. Each “leaf” on the tree is a possible outcome or decision. Decision trees are popular because they are easy to understand and use.

They are versatile, used for different types of tasks like classifying objects or predicting numerical values. For example, in healthcare, they can help pinpoint risk factors for certain diseases. In finance, they can streamline the process of approving or denying loans.

Support Vector Machines

Support Vector Machines, or SVMs, work by finding the best dividing line—or in more complex cases, a plane or hyperplane—that separates different groups in the data. The goal is to put as much space as possible between different categories. SVMs can also be adapted to deal with more complex, non-linear data using something called “kernel methods.”

This technique shines when dealing with data that has many features or dimensions, like text analysis or image recognition. One of its main advantages is its robustness, particularly when navigating spaces with many dimensions.

That said, SVMs can require a lot of computational power, making them less ideal for very large datasets. Their high level of accuracy in specific tasks makes them valuable for specialized applications.

Logistic Regression

Contrary to what its name implies, logistic regression is commonly used for classifying data into different groups. It uses a special function, the logistic function, to predict the odds of a particular event occurring. The outcome is a probability, which is then translated into a class label.

In practical terms, logistic regression is frequently used in medicine to help diagnose illnesses, and in marketing to predict customer behavior, like whether a customer will leave a service. One of its key strengths is that it provides probabilities, which helps in understanding the reasoning behind a classification.

Although it’s simpler compared to some other machine learning algorithms, its ability to deliver quick, understandable results makes it a go-to method in many applications.

Naive Bayes

Naive Bayes models use Bayes’ theorem and probability theory to classify data. While they make the simple assumption that all features are independent of each other, they are often surprisingly good at their jobs. These models excel in tasks like sorting text into categories, gauging public sentiment, and identifying spam.

They calculate the likelihood of each category given the data and choose the most probable one. These models are computationally efficient, allowing for speedy training and real-time analysis. Despite their simplicity, their ability to handle many variables makes them useful in a range of fields.

kNN

The k-Nearest Neighbors algorithm classifies data points based on their proximity to k nearest data points in the feature space. It is a lazy learning algorithm, meaning it doesn’t learn a discriminative function from the training data but memorizes it instead.

kNN can be employed for both classification and regression tasks. Due to its simplicity, it often serves as a baseline in more complex machine learning pipelines. Its performance can suffer in high-dimensional spaces, necessitating dimensionality reduction techniques for optimal functionality.

Random Forest

Random Forest is an ensemble learning method that constructs multiple decision trees during training. It merges the output of these individual trees for more accurate and stable predictions. By averaging results or selecting the most frequent class, Random Forest effectively mitigates the overfitting issue common in single decision trees.

It has a wide range of applications, including recommendation systems, image classification, and financial risk assessment. The algorithm’s robustness to noise and ability to handle imbalanced datasets make it a versatile tool in machine learning.

Boosting algorithms

Boosting aggregates weak learners to form a strong learner, focusing on training instances that are hard to classify. Algorithms like AdaBoost, Gradient Boosting, and XGBoost belong to this category. Boosting methods are renowned for their high accuracy and are often used in Kaggle competitions and industrial applications.

They find utility in complex tasks like ranking and object detection, often outperforming other machine learning methods. However, they can be sensitive to noisy data and outliers, which necessitates careful pre-processing.

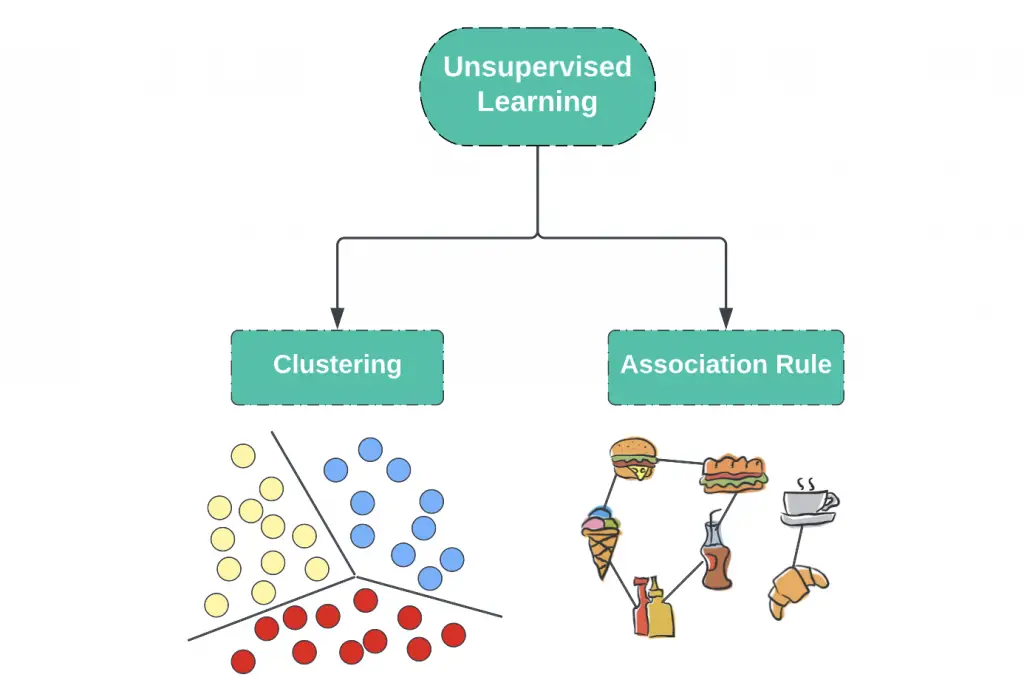

Unsupervised Learning Models

Unsupervised learning models analyze data without the guidance of a labeled outcome variable. These algorithms discover inherent structures within datasets, enabling tasks like clustering, dimensionality reduction, and anomaly detection. k-means, hierarchical clustering, and Principal Component Analysis are notable examples.

Applications of unsupervised models range from customer segmentation in marketing to fraud detection in financial services. The major challenge lies in model evaluation, as traditional metrics like accuracy are not directly applicable.

Also Read: What is Unsupervised Learning?

Clustering Algorithms

Clustering algorithms partition data into distinct groups based on feature similarity. Algorithms like k-means, hierarchical clustering, and DBSCAN are common choices. In bioinformatics, clustering helps identify genes with similar expression patterns. In marketing, it’s used for customer segmentation. These algorithms often serve as preliminary steps in larger data analysis pipelines, providing valuable insights into data structure.

Principal Component Analysis

Principal Component Analysis (PCA) is a dimensionality reduction technique. It transforms the original variables into a new set of uncorrelated variables known as principal components. These components capture most of the data’s variance, enabling simpler, faster processing without significant loss of information. PCA finds use in image compression, financial risk models, and gene expression analysis. Its power lies in its ability to simplify complex data sets, thus making subsequent analyses more manageable.

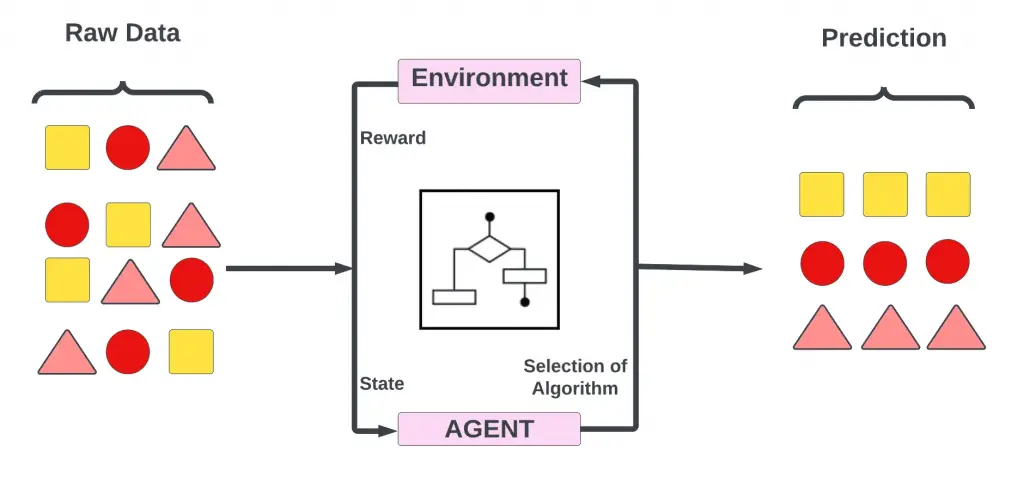

Reinforcement Learning Models

Reinforcement learning models are a subset of machine learning focused on decision-making. In this approach, an agent learns to interact with an environment to achieve a specific goal. The agent receives rewards or penalties based on the actions it takes, guiding it to optimize its behavior over time.

This learning paradigm is particularly suited for problems where the optimal solution involves a sequence of decisions, such as game playing, robotics, and autonomous vehicles. The agent uses a policy, essentially a set of rules, to decide its actions at each state of the environment.

Various algorithms can be used in reinforcement learning, such as Q-Learning and Deep Reinforcement Learning. These models are often computationally intensive, but their ability to adapt and learn from complex environments makes them increasingly important in today’s data-driven world.

Also Read: Is deep learning supervised or unsupervised?

Q-Learning

The Q-Learning algorithm, a subset of reinforcement learning, aims to identify the best action for each state to reach a goal. The algorithm calculates and stores state-action pair values in a Q-table, which the agent uses for decision-making.

The essence of Q-Learning is to learn a policy that will result in maximum total reward. The algorithm iteratively updates the Q-values based on the rewards received for actions taken, eventually converging on optimal action-selection behavior.

One of the major advantages of Q-Learning is its ability to compare the expected utility of the available actions without requiring a model of the environment. This makes it highly effective in situations where the model of the environment is either not available or too complex to use for optimization.

Deep Reinforcement Learning

Deep Reinforcement Learning (DRL) combines neural networks with reinforcement learning, creating systems capable of learning complex behavior. In traditional reinforcement learning, the Q-table for storing state-action values becomes impractical for large or continuous state spaces. Deep learning helps solve this bottleneck by approximating Q-values with neural networks, thus allowing the model to generalize to unseen states.

DRL has been successful in tackling a broad array of complex tasks, from beating human champions in games like Go and Poker to controlling robotic limbs and autonomous vehicles. The key advantage of using neural networks in reinforcement learning is the capability to handle high-dimensional inputs, making them ideal for tasks such as image and speech recognition in complex, real-world environments.

Though computationally demanding, DRL’s ability to manage complexity and adapt in highly variable settings positions it at the forefront of emerging AI technologies.

Evaluation Metrics

Evaluation metrics in machine learning offer quantitative ways to assess a model’s performance. These metrics vary based on the type of problem at hand—classification, regression, clustering, or others. For classification problems, metrics such as accuracy, precision, and recall are often used. Accuracy measures the fraction of correctly classified instances, while precision and recall focus on the performance related to specific classes. F1 Score is the harmonic mean of precision and recall, offering a balance between the two.

In regression problems, metrics like Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) are commonly used. They quantify the average deviation of the model’s predictions from the actual values.

To evaluate the quality of clusters in clustering problems, one may employ metrics like silhouette score or Davies–Bouldin index.

These metrics serve as a foundation for tuning model parameters, selecting appropriate algorithms, and ultimately, validating the utility of a machine learning model.

Accuracy, Precision, Recall

Accuracy measures the fraction of correct predictions among the total instances. Precision assesses the number of true positives among the predicted positives, while recall measures the true positives among actual positives. These metrics are especially vital in imbalanced datasets and offer a nuanced view of model performance.

F1 Score

The F1 Score is the harmonic mean of precision and recall, providing a single metric that balances the trade-off between both. It is particularly useful when classes are imbalanced or when false positives and false negatives have different costs. Often used in text classification and medical diagnosis, the F1 Score offers a more comprehensive performance measure compared to accuracy alone.

ROC Curve

The Receiver Operating Characteristic (ROC) Curve plots the true positive rate against the false positive rate. The area under the ROC curve, often abbreviated as AUC-ROC, serves as an effective measure of the model’s classification performance. It is widely used in various fields, including machine learning, medicine, and radiology, to compare different models.

Hyperparameter Optimization

Hyperparameter optimization involves tuning the configurable parameters of a machine learning model to improve its performance. Techniques like grid search, random search, and Bayesian optimization are commonly employed. This step is crucial as poorly chosen hyperparameters can drastically reduce a model’s effectiveness. Yet, it’s a computationally expensive process that can significantly increase the time needed for model training.

Challenges in Machine Learning Models

Despite advancements, machine learning models still face challenges like overfitting, underfitting, data imbalance, and computational cost. These issues require careful consideration during the model-building process to ensure robust, reliable outcomes. Techniques like regularization, data augmentation, and ensemble methods often help mitigate these challenges.

Overfitting and Underfitting

Underfitting and overfitting are issues related to the performance of machine learning models. Underfitting occurs when a model is too simplistic to capture the underlying patterns in the data. As a result, it performs poorly on both the training set and unseen data, failing to provide accurate predictions. In essence, underfitting is a sign that the model has not learned sufficiently from the training data.

Overfitting, on the other hand, is the result of a model learning the training data too well, including its noise and outliers. While such a model performs excellently on the training set, it generalizes poorly to new, unseen data. The model becomes too tailored to the training set, losing its ability to generalize to other data.

Both underfitting and overfitting are detrimental to the predictive performance of machine learning models. They are typically addressed through techniques like regularization, cross-validation, and ensemble methods, aiming to create a model that balances complexity and generalizability.

Data Imbalance

Data imbalance refers to an unequal distribution of classes within a dataset. In classification tasks, this manifests as a significant skew in the number of instances for each class. For example, in a binary classification problem, you might have 90% of samples in one class and only 10% in the other. This imbalance poses a challenge for machine learning models, as they tend to be biased towards the majority class, often overlooking the minority class.

Data imbalance can lead to misleadingly high accuracy scores, as the model simply learns to predict the majority class for all inputs. In practical terms, this means the model is not effectively learning the characteristics of the minority class, which is often of high interest.

Various techniques can address this issue, such as resampling methods that either oversample the minority class or undersample the majority class. Advanced algorithms like Synthetic Minority Over-sampling Technique (SMOTE) can also be employed. Alternatively, cost-sensitive learning and ensemble methods can adjust the algorithm to be more sensitive to the minority class.

Computational Cost

Computational cost refers to the resources required for running a machine learning algorithm. These resources can include time, memory, and processing power. A high computational cost implies that an algorithm is resource-intensive, often requiring advanced hardware or prolonged runtime to perform its tasks. In machine learning, complex models like deep neural networks often come with high computational costs due to their numerous parameters and layers.

In scenarios requiring real-time processing or limited resources, like mobile devices, computational cost becomes a critical consideration. It also impacts the scalability of machine learning applications, affecting how well a system can handle increased data volume or complexity.

Optimization techniques, including algorithmic improvements and hardware acceleration, aim to mitigate computational costs. These enhancements enable faster training and prediction times, making machine learning models more feasible for a variety of applications.

Ethical Considerations

Ethical concerns in machine learning encompass issues of bias, fairness, and data privacy. Models can inadvertently learn societal biases present in the training data, leading to discriminatory outcomes. Researchers and practitioners increasingly regard ethical frameworks and fairness-aware algorithms as essential for responsible machine learning.

Bias and Fairness In Machine Learning Models

Bias refers to systematic errors that favor one group over another, often perpetuating existing societal inequalities. For instance, a facial recognition system trained mostly on images of people from one ethnicity may perform poorly on individuals from other ethnic groups.

Fairness, on the other hand, aims for equitable treatment across diverse groups. Ensuring fairness in machine learning models involves addressing both the overt and subtle biases that can infiltrate algorithms. These biases may arise from imbalanced or prejudiced training data, or from flawed feature selection that inadvertently captures discriminatory patterns.

Addressing bias and fairness usually involves multiple stages, from data collection to model evaluation. Techniques like re-sampling, re-weighting, and algorithmic adjustments can help mitigate bias. Fairness metrics, such as demographic parity or equalized odds, quantify how well a model performs across different groups.

Failure to address bias and fairness can have severe ethical and legal implications, especially in sensitive applications like healthcare, criminal justice, and financial services. Thus, it’s imperative to scrutinize machine learning models for bias and take corrective action to ensure fairness.

Bias and fairness are critical concerns in the development and deployment of machine learning models.

Data Privacy In Machine Learning Models

Data privacy in machine learning models is a pressing concern, especially given the increasing volume of sensitive data used for training. The issue revolves around how data is collected, stored, and utilized without compromising the confidentiality and anonymity of individuals. Unregulated or careless use of data can lead to serious ethical and legal repercussions, including violation of privacy laws like the General Data Protection Regulation (GDPR) in the European Union.

Various techniques aim to preserve data privacy in machine learning. Differential privacy provides a mathematical framework for quantifying data disclosure risks, allowing algorithms to learn from data without revealing individual entries. Homomorphic encryption enables computations on encrypted data, providing results that, when decrypted, match what would have been obtained with unencrypted data.

Another approach is federated learning, which allows a model to be trained across multiple decentralized devices holding local data samples, without exchanging them. This ensures that all the training data remains on the original device, enhancing privacy.

Securing data privacy is not merely a technical challenge but also a governance issue. Robust data management policies, consent mechanisms, and transparency in data usage are equally critical in maintaining public trust and ensuring ethical machine learning practices.

Machine Learning Models in Industry

Machine learning models have gained immense traction across various industrial sectors due to their ability to derive insights from data and automate complex tasks. In healthcare, algorithms assist in diagnostic imaging, personalized treatment plans, and drug discovery. Predictive models in this sector help forecast patient outcomes, thereby enabling preemptive medical interventions.

In finance, machine learning contributes to risk assessment, fraud detection, and algorithmic trading. Credit scoring models, for instance, evaluate a range of variables to determine loan eligibility, while fraud detection systems flag suspicious activities in real-time.

Retail and e-commerce utilize recommendation systems to offer personalized shopping experiences. These algorithms analyze customer behavior, preferences, and past purchases to suggest relevant products, thereby increasing sales and customer engagement.

In manufacturing, machine learning models optimize supply chain logistics and improve quality control. Predictive maintenance algorithms anticipate equipment failures, allowing for timely repairs and reducing downtime.

Energy companies deploy machine learning for demand forecasting and optimizing grid distribution, ensuring efficient energy use. In the automotive industry, machine learning is pivotal in the development of autonomous vehicles, providing the algorithms that enable cars to ‘learn’ from their environment.

Across these domains, machine learning not only enhances operational efficiency but also fosters innovation, opening new avenues for data-driven decision-making and value creation.

Future Trends in Machine Learning Models

The landscape of machine learning is ever-evolving, marked by several emerging trends that signal transformative shifts in technology and application.

Transfer Learning

One notable trend is transfer learning, which allows a pre-trained model to adapt to a different but related task, reducing training time and data requirements. This technique is especially valuable in fields where data is scarce or expensive to obtain.

Federated Learning

Federated learning also promises to reshape the future of machine learning. It enables models to learn from decentralized data residing on local devices, thereby enhancing data privacy and reducing data transmission costs. This approach is particularly advantageous for Internet of Things (IoT) applications.

Explainable AI

Another trend is the advancement of explainable AI, which aims to make machine learning models more transparent and interpretable. This is crucial for sensitive applications like healthcare and criminal justice, where accountability and understanding of model decisions are paramount.

AutoML

AutoML, or Automated Machine Learning, is gaining popularity for automating the end-to-end process of applying machine learning to real-world problems. It aims to simplify the complex process of model selection, tuning, and deployment.

NLP

Advancements in natural language processing (NLP) and computer vision are also notable, bolstered by increasingly complex architectures and larger datasets. This could revolutionize industries like healthcare, where models can analyze medical literature for research, or retail, where computer vision can automate inventory management.

Edge AI

Edge AI is gaining attention as it enables machine learning algorithms to run locally on a hardware device, reducing the need for data to travel over a network. This is key for applications requiring real-time decision-making and low latency.

Together, these trends indicate a future where machine learning models will become more efficient, accessible, and integrated into our daily lives, transforming the way we interact with technology and the world.

Case Studies

Case Study 1: Self-driving Cars Using Reinforcement Learning Algorithms

Self-driving cars employ sophisticated machine learning systems to navigate real-world environments. The reinforcement learning algorithms use sensor data as input variables to inform a myriad of decisions like acceleration, braking, and turning. A crucial aspect of this application is the use of effective training cycles, incorporating both positive and negative examples, to refine the model’s behavior. Techniques like Q-learning are often used to guide the optimization process, making autonomous vehicles safer and more efficient.

Case Study 2: Healthcare Diagnosis Using Logistic Regression Model

Healthcare has become a significant beneficiary of machine learning technologies, especially in diagnostics. Logistic regression models often serve as the core engine for predictive analytics in healthcare. Variables such as patient age, medical history, and biochemical markers are processed as input values. These variables undergo statistical classification to produce probabilities related to various health outcomes. Effective training on diverse clinical datasets enables these models to provide highly accurate diagnostic assistance, influencing treatment plans and ultimately saving lives.

Case Study 3: Customer Segmentation Using K-Means Clustering

Customer segmentation is crucial for businesses looking to deliver personalized experiences. K-Means Clustering is commonly used for this purpose. It operates on an input matrix consisting of customer data like purchase history, activity metrics, and demographics. The output examples from the algorithm provide clearly defined clusters, allowing businesses to tailor marketing strategies and promotional activities to different customer segments. Pattern recognition further refines these clusters to optimize business operations.

Case Study 4: Natural Language Processing in Chatbots

Chatbots use complex algorithms to engage with users in a human-like manner. Inputs from text-based interactions feed into a belief network, which uses Graphical models to predict possible user intents. Techniques like Semi-supervised learning allow the model to continuously learn from new data. This ensures a dynamic and more engaging user experience. Computational methods, particularly linear algebra, play a vital role in the hidden layers of the chatbot algorithms.

Case Study 5: Fraud Detection in Financial Transactions

Fraud detection is of paramount importance in financial systems. Data like transaction amounts, user behavior, and historical fraud patterns serve as input variables. The model relies on statistical methods, often Gaussian processes and kernel regression, to analyze this high-dimensional space. Negative examples from fraudulent transactions and positive examples from legitimate ones train the binary classification model. Effective training results in models capable of real-time fraud identification, thereby minimizing financial risks.

Case Study 6: Image Recognition in Social Media

Image recognition has become a staple feature in social media platforms. Convolutional neural networks analyze the input layer consisting of pixel values. Hidden layers process these values through linear algebra computations to extract features. These features are then classified in the output layer to identify people, places, or objects within the images. The training examples used to train these models come from vast repositories of tagged or categorized images, ensuring a broad spectrum of recognition capabilities.

Case Study 7: Predictive Maintenance in Manufacturing

In manufacturing, machine learning plays an integral role in predictive maintenance. Data generated from machine sensors are processed as continuous values in real-time. Algorithms like regression trees and Polynomial Regression analyze this data to predict future machine failures. Effective training using both historical data and real-time inputs ensures that the machine learning models can preemptively signal maintenance needs, thereby reducing unexpected downtime and increasing overall operational efficiency.

Each of these case studies illustrates the difference between machine learning techniques and traditional methods. They highlight how machine learning can be customized to specific applications using a variety of algorithms and training processes.

Conclusion and Outlook

The transformative impact of machine learning models on diverse sectors cannot be overstated. From autonomous vehicles to healthcare diagnostics, these models are significantly altering how we interact with technology and the world. Their influence extends beyond mere automation or predictive capabilities; they redefine problem-solving across disciplines. Utilizing techniques ranging from logistic regression to complex reinforcement learning algorithms, machine learning offers unprecedented effectiveness and precision in decision-making processes.

However, challenges persist. Issues surrounding data privacy, model interpretability, and algorithmic bias continue to draw scrutiny. As we continue to integrate machine learning more deeply into societal structures, resolving these ethical and technical dilemmas becomes increasingly critical.

Looking ahead, the trajectory for machine learning models appears steeply upward. Advances in computational power will likely facilitate even more complex algorithms and applications. Moreover, emerging paradigms such as quantum machine learning and edge AI promise to unlock new capabilities, potentially revolutionizing how we comprehend machine learning today.

In essence, machine learning models are not just a technological trend but a foundational pillar for future innovations. Their evolving sophistication promises to offer solutions to some of humanity’s most pressing issues, from climate change to medical research. Thus, understanding and participating in this dynamic field is more than an academic or industrial pursuit; it’s a venture of societal significance.

Also Read: Top 20 Machine Learning Algorithms Explained

FAQ’S

What is Regression in Machine Learning?

In machine learning, regression refers to a set of statistical methods aimed at predicting a continuous outcome variable, often called the target or dependent variable, based on one or more predictor variables. Unlike classification tasks, which predict discrete labels, regression seeks to model and analyze the relationships between variables to predict a numerical value. Techniques such as linear regression, polynomial regression, and ridge regression are commonly used in regression tasks.

Linear regression, for instance, assumes a linear relationship between the input variables and the target. It aims to fit a linear equation to observed data. The equation’s coefficients are derived through optimization techniques like gradient descent, aiming to minimize a loss function that measures prediction errors.

Regression models find extensive use across disciplines, including economics, epidemiology, environmental science, and more. For example, they can be used to predict stock prices, assess medical outcomes, or estimate energy consumption. The power of regression lies in its simplicity, interpretability, and broad applicability across different domains.

What is a Classifier in Machine Learning?

A classifier in machine learning is an algorithm that assigns a label to an input data point. Classifiers like logistic regression, decision trees, and neural networks are instrumental in tasks ranging from spam detection to medical diagnosis.

How many ML models are there?

The types of machine learning models are vast and ever-growing, including supervised, unsupervised, and reinforcement learning models. Within these categories, various algorithms exist, such as decision trees, neural networks, and support vector machines.

What is the best model for machine learning?

There is no one-size-fits-all “best” model in machine learning. Model selection depends on the specific problem, data type, and performance requirements. Ensemble methods that combine multiple algorithms often yield robust results.

What is model deployment in Machine Learning (ML)?

Model deployment refers to integrating a trained machine learning model into a production environment where it can take new data, perform inference, and deliver predictions or decisions in real-time or batch mode.

What are Deep Learning Models?

Deep learning models are neural networks with three or more layers. They automatically learn to represent data by training on large datasets and are extremely effective in tasks like image and speech recognition.

What is Time Series Machine Learning?

Time series machine learning involves algorithms specifically designed to handle data points ordered in time. Tasks often include forecasting stock prices, weather patterns, and energy consumption.

Where can I learn more about machine learning?

If you’re looking to deepen your understanding of machine learning, aiplusinfo.com is an excellent starting point. The platform offers tutorials that cover everything from basic concepts to advanced algorithms. Its comprehensive guides and how-tos cater to both novices and experts. The site stands out for its focus on the ethical and societal implications of AI, ensuring a holistic understanding of the subject matter. Whether you’re interested in technical mastery or grasping broader impacts, this resource is highly recommended.

References

Bonaccorso, Giuseppe. Machine Learning Algorithms. Packt Publishing Ltd, 2017.

Molnar, Christoph. Interpretable Machine Learning. Lulu.com, 2020.

Suthaharan, Shan. Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning. Springer, 2015.